4.2: Cofactor Expansions

- Page ID

- 70203

- Learn to recognize which methods are best suited to compute the determinant of a given matrix.

- Recipes: the determinant of a \(3\times 3\) matrix, compute the determinant using cofactor expansions.

- Vocabulary words: minor, cofactor.

In this section, we give a recursive formula for the determinant of a matrix, called a cofactor expansion. The formula is recursive in that we will compute the determinant of an \(n\times n\) matrix assuming we already know how to compute the determinant of an \((n-1)\times(n-1)\) matrix.

At the end is a supplementary subsection on Cramer’s rule and a cofactor formula for the inverse of a matrix.

Cofactor Expansions

A recursive formula must have a starting point. For cofactor expansions, the starting point is the case of \(1\times 1\) matrices. The definition of determinant directly implies that

\[ \det\left(\begin{array}{c}a\end{array}\right)=a. \nonumber \]

To describe cofactor expansions, we need to introduce some notation.

Let \(A\) be an \(n\times n\) matrix.

- The \((i,j)\) minor, denoted \(A_{ij}\text{,}\) is the \((n-1)\times (n-1)\) matrix obtained from \(A\) by deleting the \(i\)th row and the \(j\)th column.

- The \((i,j)\) cofactor \(C_{ij}\) is defined in terms of the minor by \[C_{ij}=(-1)^{i+j}\det(A_{ij}).\nonumber\]

Note that the signs of the cofactors follow a “checkerboard pattern.” Namely, \((-1)^{i+j}\) is pictured in this matrix:

\[\left(\begin{array}{cccc}\color{Green}{+}&\color{blue}{-}&\color{Green}{+}&\color{blue}{-} \\ \color{blue}{-}&\color{Green}{+}&\color{blue}{-}&\color{Green}{-} \\ \color{Green}{+}&\color{blue}{-}&\color{Green}{+}&\color{blue}{-} \\ \color{blue}{-}&\color{Green}{+}&\color{blue}{-}&\color{Green}{+}\end{array}\right).\nonumber\]

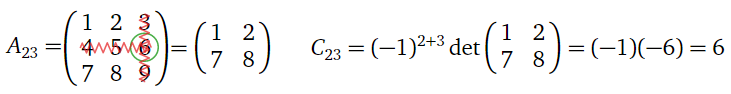

For

\[ A= \left(\begin{array}{ccc}1&2&3\\4&5&6\\7&8&9\end{array}\right), \nonumber \]

compute \(A_{23}\) and \(C_{23}.\)

Solution

Figure \(\PageIndex{1}\)

The cofactors \(C_{ij}\) of an \(n\times n\) matrix are determinants of \((n-1)\times(n-1)\) submatrices. Hence the following theorem is in fact a recursive procedure for computing the determinant.

Let \(A\) be an \(n\times n\) matrix with entries \(a_{ij}\).

- For any \(i = 1,2,\ldots,n\text{,}\) we have \[ \det(A) = \sum_{j=1}^n a_{ij}C_{ij} = a_{i1}C_{i1} + a_{i2}C_{i2} + \cdots + a_{in}C_{in}. \nonumber \] This is called cofactor expansion along the \(i\)th row.

- For any \(j = 1,2,\ldots,n\text{,}\) we have \[ \det(A) = \sum_{i=1}^n a_{ij}C_{ij} = a_{1j}C_{1j} + a_{2j}C_{2j} + \cdots + a_{nj}C_{nj}. \nonumber \] This is called cofactor expansion along the \(j\)th column.

- Proof

-

First we will prove that cofactor expansion along the first column computes the determinant. Define a function \(d\colon\{n\times n\text{ matrices}\}\to\mathbb{R}\) by

\[ d(A) = \sum_{i=1}^n (-1)^{i+1} a_{i1}\det(A_{i1}). \nonumber \]

We want to show that \(d(A) = \det(A)\). Instead of showing that \(d\) satisfies the four defining properties of the determinant, Definition 4.1.1, in Section 4.1, we will prove that it satisfies the three alternative defining properties, Remark: Alternative defining properties, in Section 4.1, which were shown to be equivalent.

- We claim that \(d\) is multilinear in the rows of \(A\). Let \(A\) be the matrix with rows \(v_1,v_2,\ldots,v_{i-1},v+w,v_{i+1},\ldots,v_n\text{:}\) \[A=\left(\begin{array}{ccc}a_11&a_12&a_13 \\ b_1+c_1 &b_2+c_2&b_3+c_3 \\ a_31&a_32&a_33\end{array}\right).\nonumber\] Here we let \(b_i\) and \(c_i\) be the entries of \(v\) and \(w\text{,}\) respectively. Let \(B\) and \(C\) be the matrices with rows \(v_1,v_2,\ldots,v_{i-1},v,v_{i+1},\ldots,v_n\) and \(v_1,v_2,\ldots,v_{i-1},w,v_{i+1},\ldots,v_n\text{,}\) respectively: \[B=\left(\begin{array}{ccc}a_11&a_12&a_13\\b_1&b_2&b_3\\a_31&a_32&a_33\end{array}\right)\quad C=\left(\begin{array}{ccc}a_11&a_12&a_13\\c_1&c_2&c_3\\a_31&a_32&a_33\end{array}\right).\nonumber\] We wish to show \(d(A) = d(B) + d(C)\). For \(i'\neq i\text{,}\) the \((i',1)\)-cofactor of \(A\) is the sum of the \((i',1)\)-cofactors of \(B\) and \(C\text{,}\) by multilinearity of the determinants of \((n-1)\times(n-1)\) matrices: \[ \begin{split} (-1)^{3+1}\det(A_{31}) \amp= (-1)^{3+1}\det\left(\begin{array}{cc}a_12&a_13\\b_2+c_2&b_3+c_3\end{array}\right) \\ \amp= (-1)^{3+1}\det\left(\begin{array}{cc}a_12&a_13\\b_2&b_3\end{array}\right) + (-1)^{3+1}\det\left(\begin{array}{cc}a_12&a_13\\c_2&c_3\end{array}\right) \\ \amp= (-1)^{3+1}\det(B_{31}) + (-1)^{3+1}\det(C_{31}). \end{split} \nonumber \] On the other hand, the \((i,1)\)-cofactors of \(A,B,\) and \(C\) are all the same: \[ \begin{split} (-1)^{2+1} \det(A_{21}) \amp= (-1)^{2+1} \det\left(\begin{array}{cc}a_12&a_13\\a_32&a_33\end{array}\right) \\ \amp= (-1)^{2+1} \det(B_{21}) = (-1)^{2+1} \det(C_{21}). \end{split} \nonumber \] Now we compute \[ \begin{split} d(A) \amp= (-1)^{i+1} (b_i + c_i)\det(A_{i1}) + \sum_{i'\neq i} (-1)^{i'+1} a_{i1}\det(A_{i'1}) \\ \amp= (-1)^{i+1} b_i\det(B_{i1}) + (-1)^{i+1} c_i\det(C_{i1}) \\ \amp\qquad\qquad+ \sum_{i'\neq i} (-1)^{i'+1} a_{i1}\bigl(\det(B_{i'1}) + \det(C_{i'1})\bigr) \\ \amp= \left[(-1)^{i+1} b_i\det(B_{i1}) + \sum_{i'\neq i} (-1)^{i'+1} a_{i1}\det(B_{i'1})\right] \\ \amp\qquad\qquad+ \left[(-1)^{i+1} c_i\det(C_{i1}) + \sum_{i'\neq i} (-1)^{i'+1} a_{i1}\det(C_{i'1})\right] \\ \amp= d(B) + d(C), \end{split} \nonumber \] as desired. This shows that \(d(A)\) satisfies the first defining property in the rows of \(A\).

We still have to show that \(d(A)\) satisfies the second defining property in the rows of \(A\). Let \(B\) be the matrix obtained by scaling the \(i\)th row of \(A\) by a factor of \(c\text{:}\)

\[A=\left(\begin{array}{ccc}a_{11}&a_{12}&a_{13}\\a_{21}&a_{22}&a_{23}\\a_{31}&a_{32}&a_{33}\end{array}\right)\quad B=\left(\begin{array}{ccc}a_{11}&a_{12}&a_{13}\\ca_{21}&ca_{22}&ca_{23}\\a_{31}&a_{32}&a_{33}\end{array}\right).\nonumber\] We wish to show that \(d(B) = c\,d(A)\). For \(i'\neq i\text{,}\) the \((i',1)\)-cofactor of \(B\) is \(c\) times the \((i',1)\)-cofactor of \(A\text{,}\) by multilinearity of the determinants of \((n-1)\times(n-1)\)-matrices: \[ \begin{split} (-1)^{3+1}\det(B_{31}) \amp= (-1)^{3+1}\det\left(\begin{array}{cc}a_{12}&a_{13}\\ca_{22}&ca_{23}\end{array}\right) \\ \amp= (-1)^{3+1}\cdot c\det\left(\begin{array}{cc}a_{12}&a_{13}\\a_{22}&a_{23}\end{array}\right) = (-1)^{3+1}\cdot c\det(A_{31}). \end{split} \nonumber \] On the other hand, the \((i,1)\)-cofactors of \(A\) and \(B\) are the same: \[ (-1)^{2+1} \det(B_{21}) = (-1)^{2+1} \det\left(\begin{array}{cc}a_{12}&a_{13}\\a_{32}&a_{33}\end{array}\right) = (-1)^{2+1} \det(A_{21}). \nonumber \] Now we compute \[ \begin{split} d(B) \amp= (-1)^{i+1}ca_{i1}\det(B_{i1}) + \sum_{i'\neq i}(-1)^{i'+1} a_{i'1}\det(B_{i'1}) \\ \amp= (-1)^{i+1}ca_{i1}\det(A_{i1}) + \sum_{i'\neq i}(-1)^{i'+1} a_{i'1}\cdot c\det(A_{i'1}) \\ \amp= c\left[(-1)^{i+1}ca_{i1}\det(A_{i1}) + \sum_{i'\neq i}(-1)^{i'+1} a_{i'1} \det(A_{i'1})\right] \\ \amp= c\,d(A), \end{split} \nonumber \] as desired. This completes the proof that \(d(A)\) is multilinear in the rows of \(A\). - Now we show that \(d(A) = 0\) if \(A\) has two identical rows. Suppose that rows \(i_1,i_2\) of \(A\) are identical, with \(i_1 \lt i_2\text{:}\) \[A=\left(\begin{array}{cccc}a_{11}&a_{12}&a_{13}&a_{14}\\a_{21}&a_{22}&a_{23}&a_{24}\\a_{31}&a_{32}&a_{33}&a_{34}\\a_{11}&a_{12}&a_{13}&a_{14}\end{array}\right).\nonumber\] If \(i\neq i_1,i_2\) then the \((i,1)\)-cofactor of \(A\) is equal to zero, since \(A_{i1}\) is an \((n-1)\times(n-1)\) matrix with identical rows: \[ (-1)^{2+1}\det(A_{21}) = (-1)^{2+1} \det\left(\begin{array}{ccc}a_{12}&a_{13}&a_{14}\\a_{32}&a_{33}&a_{34}\\a_{12}&a_{13}&a_{14}\end{array}\right) = 0. \nonumber \] The \((i_1,1)\)-minor can be transformed into the \((i_2,1)\)-minor using \(i_2 - i_1 - 1\) row swaps:

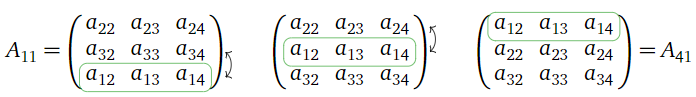

Figure \(\PageIndex{2}\)

- Therefore, \[ (-1)^{i_1+1}\det(A_{i_11}) = (-1)^{i_1+1}\cdot(-1)^{i_2 - i_1 - 1}\det(A_{i_21}) = -(-1)^{i_2+1}\det(A_{i_21}). \nonumber \] The two remaining cofactors cancel out, so \(d(A) = 0\text{,}\) as desired.

- It remains to show that \(d(I_n) = 1\). The first is the only one nonzero term in the cofactor expansion of the identity: \[ d(I_n) = 1\cdot(-1)^{1+1}\det(I_{n-1}) = 1. \nonumber \]

This proves that \(\det(A) = d(A)\text{,}\) i.e., that cofactor expansion along the first column computes the determinant.

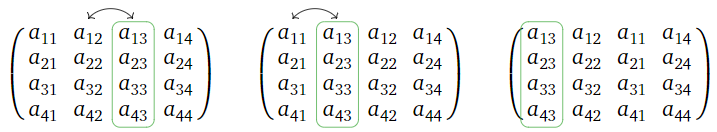

Now we show that cofactor expansion along the \(j\)th column also computes the determinant. By performing \(j-1\) column swaps, one can move the \(j\)th column of a matrix to the first column, keeping the other columns in order. For example, here we move the third column to the first, using two column swaps:

Figure \(\PageIndex{3}\)

Let \(B\) be the matrix obtained by moving the \(j\)th column of \(A\) to the first column in this way. Then the \((i,j)\) minor \(A_{ij}\) is equal to the \((i,1)\) minor \(B_{i1}\text{,}\) since deleting the \(i\)th column of \(A\) is the same as deleting the first column of \(B\). By construction, the \((i,j)\)-entry \(a_{ij}\) of \(A\) is equal to the \((i,1)\)-entry \(b_{i1}\) of \(B\). Since we know that we can compute determinants by expanding along the first column, we have

\[ \det(B) = \sum_{i=1}^n (-1)^{i+1} b_{i1}\det(B_{i1}) = \sum_{i=1}^n (-1)^{i+1} a_{ij}\det(A_{ij}). \nonumber \]

Since \(B\) was obtained from \(A\) by performing \(j-1\) column swaps, we have

\[ \begin{split} \det(A) = (-1)^{j-1}\det(B) \amp= (-1)^{j-1}\sum_{i=1}^n (-1)^{i+1} a_{ij}\det(A_{ij}) \\ \amp= \sum_{i=1}^n (-1)^{i+j} a_{ij}\det(A_{ij}). \end{split} \nonumber \]

This proves that cofactor expansion along the \(i\)th column computes the determinant of \(A\).

By the transpose property, Proposition 4.1.4 in Section 4.1, the cofactor expansion along the \(i\)th row of \(A\) is the same as the cofactor expansion along the \(i\)th column of \(A^T\). Again by the transpose property, we have \(\det(A)=\det(A^T)\text{,}\) so expanding cofactors along a row also computes the determinant.

- We claim that \(d\) is multilinear in the rows of \(A\). Let \(A\) be the matrix with rows \(v_1,v_2,\ldots,v_{i-1},v+w,v_{i+1},\ldots,v_n\text{:}\) \[A=\left(\begin{array}{ccc}a_11&a_12&a_13 \\ b_1+c_1 &b_2+c_2&b_3+c_3 \\ a_31&a_32&a_33\end{array}\right).\nonumber\] Here we let \(b_i\) and \(c_i\) be the entries of \(v\) and \(w\text{,}\) respectively. Let \(B\) and \(C\) be the matrices with rows \(v_1,v_2,\ldots,v_{i-1},v,v_{i+1},\ldots,v_n\) and \(v_1,v_2,\ldots,v_{i-1},w,v_{i+1},\ldots,v_n\text{,}\) respectively: \[B=\left(\begin{array}{ccc}a_11&a_12&a_13\\b_1&b_2&b_3\\a_31&a_32&a_33\end{array}\right)\quad C=\left(\begin{array}{ccc}a_11&a_12&a_13\\c_1&c_2&c_3\\a_31&a_32&a_33\end{array}\right).\nonumber\] We wish to show \(d(A) = d(B) + d(C)\). For \(i'\neq i\text{,}\) the \((i',1)\)-cofactor of \(A\) is the sum of the \((i',1)\)-cofactors of \(B\) and \(C\text{,}\) by multilinearity of the determinants of \((n-1)\times(n-1)\) matrices: \[ \begin{split} (-1)^{3+1}\det(A_{31}) \amp= (-1)^{3+1}\det\left(\begin{array}{cc}a_12&a_13\\b_2+c_2&b_3+c_3\end{array}\right) \\ \amp= (-1)^{3+1}\det\left(\begin{array}{cc}a_12&a_13\\b_2&b_3\end{array}\right) + (-1)^{3+1}\det\left(\begin{array}{cc}a_12&a_13\\c_2&c_3\end{array}\right) \\ \amp= (-1)^{3+1}\det(B_{31}) + (-1)^{3+1}\det(C_{31}). \end{split} \nonumber \] On the other hand, the \((i,1)\)-cofactors of \(A,B,\) and \(C\) are all the same: \[ \begin{split} (-1)^{2+1} \det(A_{21}) \amp= (-1)^{2+1} \det\left(\begin{array}{cc}a_12&a_13\\a_32&a_33\end{array}\right) \\ \amp= (-1)^{2+1} \det(B_{21}) = (-1)^{2+1} \det(C_{21}). \end{split} \nonumber \] Now we compute \[ \begin{split} d(A) \amp= (-1)^{i+1} (b_i + c_i)\det(A_{i1}) + \sum_{i'\neq i} (-1)^{i'+1} a_{i1}\det(A_{i'1}) \\ \amp= (-1)^{i+1} b_i\det(B_{i1}) + (-1)^{i+1} c_i\det(C_{i1}) \\ \amp\qquad\qquad+ \sum_{i'\neq i} (-1)^{i'+1} a_{i1}\bigl(\det(B_{i'1}) + \det(C_{i'1})\bigr) \\ \amp= \left[(-1)^{i+1} b_i\det(B_{i1}) + \sum_{i'\neq i} (-1)^{i'+1} a_{i1}\det(B_{i'1})\right] \\ \amp\qquad\qquad+ \left[(-1)^{i+1} c_i\det(C_{i1}) + \sum_{i'\neq i} (-1)^{i'+1} a_{i1}\det(C_{i'1})\right] \\ \amp= d(B) + d(C), \end{split} \nonumber \] as desired. This shows that \(d(A)\) satisfies the first defining property in the rows of \(A\).

Note that the theorem actually gives \(2n\) different formulas for the determinant: one for each row and one for each column. For instance, the formula for cofactor expansion along the first column is

\[ \begin{split} \det(A) = \sum_{i=1}^n a_{i1}C_{i1} \amp= a_{11}C_{11} + a_{21}C_{21} + \cdots + a_{n1}C_{n1} \\ \amp= a_{11}\det(A_{11}) - a_{21}\det(A_{21}) + a_{31}\det(A_{31}) - \cdots \pm a_{n1}\det(A_{n1}). \end{split} \nonumber \]

Remember, the determinant of a matrix is just a number, defined by the four defining properties, Definition 4.1.1 in Section 4.1, so to be clear:

You obtain the same number by expanding cofactors along \(any\) row or column.

Now that we have a recursive formula for the determinant, we can finally prove the existence theorem, Theorem 4.1.1 in Section 4.1.

Let us review what we actually proved in Section 4.1. We showed that if \(\det\colon\{n\times n\text{ matrices}\}\to\mathbb{R}\) is any function satisfying the four defining properties of the determinant, Definition 4.1.1 in Section 4.1, (or the three alternative defining properties, Remark: Alternative defining properties,), then it also satisfies all of the wonderful properties proved in that section. In particular, since \(\det\) can be computed using row reduction by Recipe: Computing Determinants by Row Reducing, it is uniquely characterized by the defining properties. What we did not prove was the existence of such a function, since we did not know that two different row reduction procedures would always compute the same answer.

Consider the function \(d\) defined by cofactor expansion along the first row:

\[ d(A) = \sum_{i=1}^n (-1)^{i+1} a_{i1}\det(A_{i1}). \nonumber \]

If we assume that the determinant exists for \((n-1)\times(n-1)\) matrices, then there is no question that the function \(d\) exists, since we gave a formula for it. Moreover, we showed in the proof of Theorem \(\PageIndex{1}\) above that \(d\) satisfies the three alternative defining properties of the determinant, again only assuming that the determinant exists for \((n-1)\times(n-1)\) matrices. This proves the existence of the determinant for \(n\times n\) matrices!

This is an example of a proof by mathematical induction. We start by noticing that \(\det\left(\begin{array}{c}a\end{array}\right) = a\) satisfies the four defining properties of the determinant of a \(1\times 1\) matrix. Then we showed that the determinant of \(n\times n\) matrices exists, assuming the determinant of \((n-1)\times(n-1)\) matrices exists. This implies that all determinants exist, by the following chain of logic:

\[ 1\times 1\text{ exists} \;\implies\; 2\times 2\text{ exists} \;\implies\; 3\times 3\text{ exists} \;\implies\; \cdots. \nonumber \]

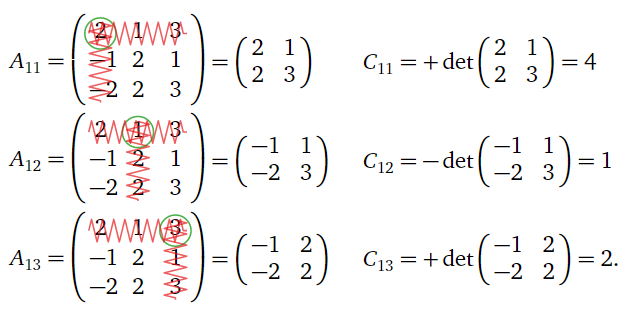

Find the determinant of

\[ A= \left(\begin{array}{ccc}2&1&3\\-1&2&1\\-2&2&3\end{array}\right). \nonumber \]

Solution

We make the somewhat arbitrary choice to expand along the first row. The minors and cofactors are

Figure \(\PageIndex{4}\)

Thus,

\[ \det(A)=a_{11}C_{11}+a_{12}C_{12}+a_{13}C_{13} =(2)(4)+(1)(1)+(3)(2)=15. \nonumber \]

Let us compute (again) the determinant of a general \(2\times2\) matrix

\[ A=\left(\begin{array}{cc}a&b\\c&d\end{array}\right). \nonumber \]

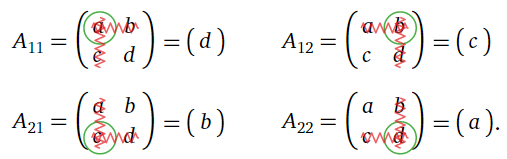

The minors are

Figure \(\PageIndex{5}\)

The minors are all \(1\times 1\) matrices. As we have seen that the determinant of a \(1\times1\) matrix is just the number inside of it, the cofactors are therefore

\begin{align*} C_{11} &= {+\det(A_{11}) = d} & C_{12} &= {-\det(A_{12}) = -c}\\ C_{21} &= {-\det(A_{21}) = -b} & C_{22} &= {+\det(A_{22}) = a} \end{align*}

Expanding cofactors along the first column, we find that

\[ \det(A)=aC_{11}+cC_{21} = ad - bc, \nonumber \]

which agrees with the formulas in Definition 3.5.2 in Section 3.5 and Example 4.1.6 in Section 4.1.

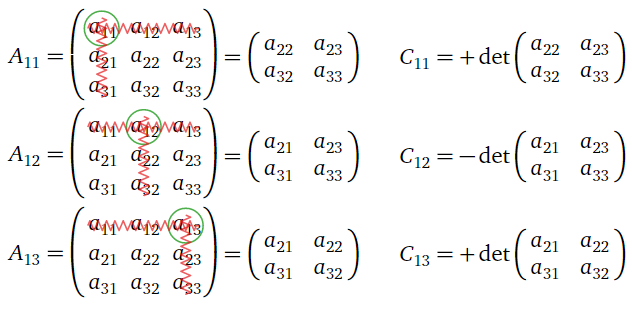

We can also use cofactor expansions to find a formula for the determinant of a \(3\times 3\) matrix. Let is compute the determinant of

\[ A = \left(\begin{array}{ccc}a_{11}&a_{12}&a_{13}\\a_{21}&a_{22}&a_{23}\\a_{31}&a_{32}&a_{33}\end{array}\right) \nonumber \]

by expanding along the first row. The minors and cofactors are:

Figure \(\PageIndex{6}\)

The determinant is:

\begin{align*} \det(A) \amp= a_{11}C_{11} + a_{12}C_{12} + a_{13}C_{13}\\ \amp= a_{11}\det\left(\begin{array}{cc}a_{22}&a_{23}\\a_{32}&a_{33}\end{array}\right) - a_{12}\det\left(\begin{array}{cc}a_{21}&a_{23}\\a_{31}&a_{33}\end{array}\right) + a_{13}\det\left(\begin{array}{cc}a_{21}&a_{22}\\a_{31}&a_{32}\end{array}\right) \\ \amp= a_{11}(a_{22}a_{33}-a_{23}a_{32}) - a_{12}(a_{21}a_{33}-a_{23}a_{31}) + a_{13}(a_{21}a_{32}-a_{22}a_{31})\\ \amp= a_{11}a_{22}a_{33} + a_{12}a_{23}a_{31} + a_{13}a_{21}a_{32} -a_{13}a_{22}a_{31} - a_{11}a_{23}a_{32} - a_{12}a_{21}a_{33}. \end{align*}

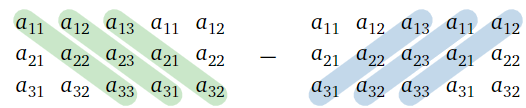

The formula for the determinant of a \(3\times 3\) matrix looks too complicated to memorize outright. Fortunately, there is the following mnemonic device.

To compute the determinant of a \(3\times 3\) matrix, first draw a larger matrix with the first two columns repeated on the right. Then add the products of the downward diagonals together, and subtract the products of the upward diagonals:

\[\det\left(\begin{array}{ccc}a_{11}&a_{12}&a_{13}\\a_{21}&a_{22}&a_{23}\\a_{31}&a_{32}&a_{33}\end{array}\right)=\begin{array}{l} \color{Green}{a_{11}a_{22}a_{33}+a_{12}a_{23}a_{31}+a_{13}a_{21}a_{32}} \\ \color{blue}{\quad -a_{13}a_{22}a_{31}-a_{11}a_{23}a_{32}-a_{12}a_{21}a_{33}}\end{array} \nonumber\]

Figure \(\PageIndex{7}\)

Alternatively, it is not necessary to repeat the first two columns if you allow your diagonals to “wrap around” the sides of a matrix, like in Pac-Man or Asteroids.

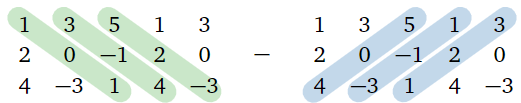

Find the determinant of \(A=\left(\begin{array}{ccc}1&3&5\\2&0&-1\\4&-3&1\end{array}\right)\).

Solution

We repeat the first two columns on the right, then add the products of the downward diagonals and subtract the products of the upward diagonals:

Figure \(\PageIndex{8}\)

\[\det\left(\begin{array}{ccc}1&3&5\\2&0&-1\\4&-3&1\end{array}\right)=\begin{array}{l}\color{Green}{(1)(0)(1)+(3)(-1)(4)+(5)(2)(-3)} \\ \color{blue}{\quad -(5)(0)(4)-(1)(-1)(-3)-(3)(2)(1)}\end{array} =-51.\nonumber\]

Cofactor expansions are most useful when computing the determinant of a matrix that has a row or column with several zero entries. Indeed, if the \((i,j)\) entry of \(A\) is zero, then there is no reason to compute the \((i,j)\) cofactor. In the following example we compute the determinant of a matrix with two zeros in the fourth column by expanding cofactors along the fourth column.

Find the determinant of

\[ A= \left(\begin{array}{cccc}2&5&-3&-2\\-2&-3&2&-5\\1&3&-2&0\\-1&6&4&0\end{array}\right). \nonumber \]

Solution

The fourth column has two zero entries. We expand along the fourth column to find

\[ \begin{split} \det(A) \amp= 2\det\left(\begin{array}{ccc}-2&-3&2\\1&3&-2\\-1&6&4\end{array}\right) -5 \det \left(\begin{array}{ccc}2&5&-3\\1&3&-2\\-1&6&4\end{array}\right) \\ \amp\qquad - 0\det(\text{don't care}) + 0\det(\text{don't care}). \end{split} \nonumber \]

We only have to compute two cofactors. We can find these determinants using any method we wish; for the sake of illustration, we will expand cofactors on one and use the formula for the \(3\times 3\) determinant on the other.

Expanding along the first column, we compute

\begin{align*} & \det \left(\begin{array}{ccc}-2&-3&2\\1&3&-2\\-1&6&4\end{array}\right) \\ & \quad= -2 \det \left(\begin{array}{cc}3&-2\\6&4\end{array}\right) -\det \left(\begin{array}{cc}-3&2\\6&4\end{array}\right) -\det \left(\begin{array}{cc}-3&2\\3&-2\end{array}\right) \\ & \quad= -2 (24) -(-24) -0=-48+24+0=-24. \end{align*}

Using the formula for the \(3\times 3\) determinant, we have

\[\det\left(\begin{array}{ccc}2&5&-3\\1&3&-2\\-1&6&4\end{array}\right)=\begin{array}{l}\color{Green}{(2)(3)(4) + (5)(-2)(-1)+(-3)(1)(6)} \\ \color{blue}{\quad -(2)(-2)(6)-(5)(1)(4)-(-3)(3)(-1)}\end{array} =11.\nonumber\]

Thus, we find that

\[ \det(A)= 2(-24)-5(11)=-103. \nonumber \]

Cofactor expansions are also very useful when computing the determinant of a matrix with unknown entries. Indeed, it is inconvenient to row reduce in this case, because one cannot be sure whether an entry containing an unknown is a pivot or not.

Compute the determinant of this matrix containing the unknown \(\lambda\text{:}\)

\[A=\left(\begin{array}{cccc}-\lambda&2&7&12\\3&1-\lambda&2&-4\\0&1&-\lambda&7\\0&0&0&2-\lambda\end{array}\right).\nonumber\]

Solution

First we expand cofactors along the fourth row:

\[ \begin{split} \det(A) \amp= 0\det\left(\begin{array}{c}\cdots\end{array}\right) + 0\det\left(\begin{array}{c}\cdots\end{array}\right) + 0\det\left(\begin{array}{c}\cdots\end{array}\right) \\ \amp\qquad+ (2-\lambda)\det\left(\begin{array}{ccc}-\lambda&2&7\\3&1-\lambda &2\\0&1&-\lambda\end{array}\right). \end{split} \nonumber \]

We only have to compute one cofactor. To do so, first we clear the \((3,3)\)-entry by performing the column replacement \(C_3 = C_3 + \lambda C_2\text{,}\) which does not change the determinant:

\[ \det\left(\begin{array}{ccc}-\lambda&2&7\\3&1-\lambda &2\\0&1&-\lambda\end{array}\right) = \det\left(\begin{array}{ccc}-\lambda&2&7+2\lambda \\ 3&1-\lambda&2+\lambda(1-\lambda) \\ 0&1&0\end{array}\right). \nonumber \]

Now we expand cofactors along the third row to find

\[ \begin{split} \det\left(\begin{array}{ccc}-\lambda&2&7+2\lambda \\ 3&1-\lambda&2+\lambda(1-\lambda) \\ 0&1&0\end{array}\right) \amp= (-1)^{2+3}\det\left(\begin{array}{cc}-\lambda&7+2\lambda \\ 3&2+\lambda(1-\lambda)\end{array}\right) \\ \amp= -\biggl(-\lambda\bigl(2+\lambda(1-\lambda)\bigr) - 3(7+2\lambda) \biggr) \\ \amp= -\lambda^3 + \lambda^2 + 8\lambda + 21. \end{split} \nonumber \]

Therefore, we have

\[ \det(A) = (2-\lambda)(-\lambda^3 + \lambda^2 + 8\lambda + 21) = \lambda^4 - 3\lambda^3 - 6\lambda^2 - 5\lambda + 42. \nonumber \]

It is often most efficient to use a combination of several techniques when computing the determinant of a matrix. Indeed, when expanding cofactors on a matrix, one can compute the determinants of the cofactors in whatever way is most convenient. Or, one can perform row and column operations to clear some entries of a matrix before expanding cofactors, as in the previous example.

We have several ways of computing determinants:

- Special formulas for \(2\times 2\) and \(3\times 3\) matrices.

This is usually the best way to compute the determinant of a small matrix, except for a \(3\times 3\) matrix with several zero entries. - Cofactor expansion.

This is usually most efficient when there is a row or column with several zero entries, or if the matrix has unknown entries. - Row and column operations.

This is generally the fastest when presented with a large matrix which does not have a row or column with a lot of zeros in it. - Any combination of the above.

Cofactor expansion is recursive, but one can compute the determinants of the minors using whatever method is most convenient. Or, you can perform row and column operations to clear some entries of a matrix before expanding cofactors.

Remember, all methods for computing the determinant yield the same number.

Cramer’s Rule and Matrix Inverses

Recall from Proposition 3.5.1 in Section 3.5 that one can compute the determinant of a \(2\times 2\) matrix using the rule

\[ A = \left(\begin{array}{cc}d&-b\\-c&a\end{array}\right) \quad\implies\quad A^{-1} = \frac 1{\det(A)}\left(\begin{array}{cc}d&-b\\-c&a\end{array}\right). \nonumber \]

We computed the cofactors of a \(2\times 2\) matrix in Example \(\PageIndex{3}\); using \(C_{11}=d,\,C_{12}=-c,\,C_{21}=-b,\,C_{22}=a\text{,}\) we can rewrite the above formula as

\[ A^{-1} = \frac 1{\det(A)}\left(\begin{array}{cc}C_{11}&C_{21}\\C_{12}&C_{22}\end{array}\right). \nonumber \]

It turns out that this formula generalizes to \(n\times n\) matrices.

Let \(A\) be an invertible \(n\times n\) matrix, with cofactors \(C_{ij}\). Then

\[\label{eq:1}A^{-1}=\frac{1}{\det (A)}\left(\begin{array}{ccccc}C_{11}&C_{21}&\cdots&C_{n-1,1}&C_{n1} \\ C_{12}&C_{22}&\cdots &C_{n-1,2}&C_{n2} \\ \vdots&\vdots &\ddots&\vdots&\vdots \\ C_{1,n-1}&C_{2,n-1}&\cdots &C_{n-1,n-1}&C_{n,n-1} \\ C_{1n}&C_{2n}&\cdots &C_{n-1,n}&C_{nn}\end{array}\right).\]

The matrix of cofactors is sometimes called the adjugate matrix of \(A\text{,}\) and is denoted \(\text{adj}(A)\text{:}\)

\[\text{adj}(A)=\left(\begin{array}{ccccc}C_{11}&C_{21}&\cdots &C_{n-1,1}&C_{n1} \\ C_{12}&C_{22}&\cdots &C_{n-1,2}&C_{n2} \\ \vdots&\vdots&\ddots&\vdots&\vdots \\ C_{1,n-1}&C_{2,n-1}&\cdots &C_{n-1,n-1}&C_{n,n-1} \\ C_{1n}&C_{2n}&\cdots &C_{n-1,n}&C_{nn}\end{array}\right).\nonumber\]

Note that the \((i,j)\) cofactor \(C_{ij}\) goes in the \((j,i)\) entry the adjugate matrix, not the \((i,j)\) entry: the adjugate matrix is the transpose of the cofactor matrix.

In fact, one always has \(A\cdot\text{adj}(A) = \text{adj}(A)\cdot A = \det(A)I_n,\) whether or not \(A\) is invertible.

Use the Theorem \(\PageIndex{2}\) to compute \(A^{-1}\text{,}\) where

\[ A = \left(\begin{array}{ccc}1&0&1\\0&1&1\\1&1&0\end{array}\right). \nonumber \]

Solution

The minors are:

\[\begin{array}{lllll}A_{11}=\left(\begin{array}{cc}1&1\\1&0\end{array}\right)&\quad&A_{12}=\left(\begin{array}{cc}0&1\\1&0\end{array}\right)&\quad&A_{13}=\left(\begin{array}{cc}0&1\\1&1\end{array}\right) \\ A_{21}=\left(\begin{array}{cc}0&1\\1&0\end{array}\right)&\quad&A_{22}=\left(\begin{array}{cc}1&1\\1&0\end{array}\right)&\quad&A_{23}=\left(\begin{array}{cc}1&0\\1&1\end{array}\right) \\ A_{31}=\left(\begin{array}{cc}0&1\\1&1\end{array}\right)&\quad&A_{32}=\left(\begin{array}{cc}1&1\\0&1\end{array}\right)&\quad&A_{33}=\left(\begin{array}{cc}1&0\\0&1\end{array}\right)\end{array}\nonumber\]

The cofactors are:

\[\begin{array}{lllll}C_{11}=-1&\quad&C_{12}=1&\quad&C_{13}=-1 \\ C_{21}=1&\quad&C_{22}=-1&\quad&C_{23}=-1 \\ C_{31}=-1&\quad&C_{32}=-1&\quad&C_{33}=1\end{array}\nonumber\]

Expanding along the first row, we compute the determinant to be

\[ \det(A) = 1\cdot C_{11} + 0\cdot C_{12} + 1\cdot C_{13} = -2. \nonumber \]

Therefore, the inverse is

\[ A^{-1} = \frac 1{\det(A)} \left(\begin{array}{ccc}C_{11}&C_{21}&C_{31}\\C_{12}&C_{22}&C_{32}\\C_{13}&C_{23}&C_{33}\end{array}\right) = -\frac12\left(\begin{array}{ccc}-1&1&-1\\1&-1&-1\\-1&-1&1\end{array}\right). \nonumber \]

It is clear from the previous example that \(\eqref{eq:1}\) is a very inefficient way of computing the inverse of a matrix, compared to augmenting by the identity matrix and row reducing, as in Subsection Computing the Inverse Matrix in Section 3.5. However, it has its uses.

- If a matrix has unknown entries, then it is difficult to compute its inverse using row reduction, for the same reason it is difficult to compute the determinant that way: one cannot be sure whether an entry containing an unknown is a pivot or not.

- This formula is useful for theoretical purposes. Notice that the only denominators in \(\eqref{eq:1}\) occur when dividing by the determinant: computing cofactors only involves multiplication and addition, never division. This means, for instance, that if the determinant is very small, then any measurement error in the entries of the matrix is greatly magnified when computing the inverse. In this way, \(\eqref{eq:1}\) is useful in error analysis.

The proof of Theorem \(\PageIndex{2}\) uses an interesting trick called Cramer’s Rule, which gives a formula for the entries of the solution of an invertible matrix equation.

Let \(x = (x_1,x_2,\ldots,x_n)\) be the solution of \(Ax=b\text{,}\) where \(A\) is an invertible \(n\times n\) matrix and \(b\) is a vector in \(\mathbb{R}^n \). Let \(A_i\) be the matrix obtained from \(A\) by replacing the \(i\)th column by \(b\). Then

\[ x_i = \frac{\det(A_i)}{\det(A)}. \nonumber \]

- Proof

-

First suppose that \(A\) is the identity matrix, so that \(x = b\). Then the matrix \(A_i\) looks like this:

\[ \left(\begin{array}{cccc}1&0&b_1&0\\0&1&b_2&0\\0&0&b_3&0\\0&0&b_4&1\end{array}\right). \nonumber \]

Expanding cofactors along the \(i\)th row, we see that \(\det(A_i)=b_i\text{,}\) so in this case,

\[ x_i = b_i = \det(A_i) = \frac{\det(A_i)}{\det(A)}. \nonumber \]

Now let \(A\) be a general \(n\times n\) matrix. One way to solve \(Ax=b\) is to row reduce the augmented matrix \((\,A\mid b\,)\text{;}\) the result is \((\,I_n\mid x\,).\) By the case we handled above, it is enough to check that the quantity \(\det(A_i)/\det(A)\) does not change when we do a row operation to \((\,A\mid b\,)\text{,}\) since \(\det(A_i)/\det(A) = x_i\) when \(A = I_n\).

- Doing a row replacement on \((\,A\mid b\,)\) does the same row replacement on \(A\) and on \(A_i\text{:}\)

\[\begin{aligned}\left(\begin{array}{ccc|c}a_{11}&a_{12}&a_{13}&b_1 \\ a_{21}&a_{22}&a_{23}&b_2\\a_{31}&a_{32}&a_{33}&b_3\end{array}\right)\quad\xrightarrow{R_2=R_2-2R_3}\quad&\left(\begin{array}{lll|l}a_{11}&a_{12}&a_{13}&b_1\\ a_{21}-2a_{31}&a_{22}-2a_{32}&a_{23}-2a_{33}&b_2-2b_3 \\ a_{31}&a_{32}&a_{33}&b_3 \end{array}\right) \\ \left(\begin{array}{ccc}a_{11}&a_{12}&a_{13}\\a_{21}&a_{22}&a_{23}\\a_{31}&a_{32}&a_{33}\end{array}\right)\quad \xrightarrow{R_2=R_2-2R_3}\quad&\left(\begin{array}{ccc}a_{11}&a_{12}&a_{13} \\ a_{21}-2a_{31}&a_{22}-2a_{32}&a_{23}-2a_{33} \\ a_{31}&a_{32}&a_{33}\end{array}\right) \\ \left(\begin{array}{ccc}a_{11}&b_{1}&a_{13}\\a_{21}&b_{2}&a_{23}\\a_{31}&b_{3}&a_{33}\end{array}\right)\quad \xrightarrow{R_2=R_2-2R_3}\quad&\left(\begin{array}{ccc}a_{11}&b_{1}&a_{13} \\ a_{21}-2a_{31}&b_{2}-2b_{3}&a_{23}-2a_{33} \\ a_{31}&b_{3}&a_{33}\end{array}\right).\end{aligned}\]

In particular, \(\det(A)\) and \(\det(A_i)\) are unchanged, so \(\det(A)/\det(A_i)\) is unchanged. - Scaling a row of \((\,A\mid b\,)\) by a factor of \(c\) scales the same row of \(A\) and of \(A_i\) by the same factor:

\[\begin{aligned}\left(\begin{array}{ccc|c}a_{11}&a_{12}&a_{13}&b_1 \\ a_{21}&a_{22}&a_{23}&b_2\\a_{31}&a_{32}&a_{33}&b_3\end{array}\right)\quad\xrightarrow{R_2=cR_2}\quad&\left(\begin{array}{ccc}a_{11}&a_{12}&a_{13}&b_1 \\ ca_{21}&ca_{22}&ca_{23}&cb_2\\a_{31}&a_{32}&a_{33}&b_3\end{array}\right) \\ \left(\begin{array}{ccc}a_{11}&a_{12}&a_{13}\\a_{21}&a_{22}&a_{23}\\a_{31}&a_{32}&a_{33}\end{array}\right)\quad\xrightarrow{R_2=cR_2}\quad&\left(\begin{array}{ccc}a_{11}&a_{12}&a_{13}\\ca_{21}&ca_{22}&ca_{23} \\ a_{31}&a_{32}&a_{33}\end{array}\right) \\ \left(\begin{array}{ccc}a_{11}&b_{1}&a_{13}\\a_{21}&b_{2}&a_{23}\\a_{31}&b_{3}&a_{33}\end{array}\right)\quad\xrightarrow{R_2=cR_2}\quad&\left(\begin{array}{ccc}a_{11}&b_{1}&a_{13}\\ca_{21}&cb_{2}&ca_{23} \\ a_{31}&b_{3}&a_{33}\end{array}\right).\end{aligned}\]

In particular, \(\det(A)\) and \(\det(A_i)\) are both scaled by a factor of \(c\text{,}\) so \(\det(A_i)/\det(A)\) is unchanged. - Swapping two rows of \((\,A\mid b\,)\) swaps the same rows of \(A\) and of \(A_i\text{:}\)

\[\begin{aligned}\left(\begin{array}{ccc|c}a_{11}&a_{12}&a_{13}&b_1 \\ a_{21}&a_{22}&a_{23}&b_2\\a_{31}&a_{32}&a_{33}&b_3\end{array}\right)\quad\xrightarrow{R_1\longleftrightarrow R_2}\quad&\left(\begin{array}{ccc|c}a_{21}&a_{22}&a_{23}&b_2\\a_{11}&a_{12}&a_{13}&b_1\\a_{31}&a_{32}&a_{33}&b_3\end{array}\right) \\ \left(\begin{array}{ccc}a_{11}&a_{12}&a_{13}\\a_{21}&a_{22}&a_{23}\\a_{31}&a_{32}&a_{33}\end{array}\right)\quad\xrightarrow{R_1\longleftrightarrow R_2}\quad&\left(\begin{array}{ccc}a_{21}&a_{22}&a_{23}\\a_{11}&a_{12}&a_{13}\\a_{31}&a_{32}&a_{33}\end{array}\right) \\ \left(\begin{array}{ccc}a_{11}&b_{1}&a_{13}\\a_{21}&b_{2}&a_{23}\\a_{31}&b_{3}&a_{33}\end{array}\right)\quad\xrightarrow{R_1\longleftrightarrow R_2}\quad&\left(\begin{array}{ccc}a_{21}&b_2&a_{23} \\ a_{11}&b_1&a_{13}\\a_{31}&b_3&a_{33}\end{array}\right).\end{aligned}\]

In particular, \(\det(A)\) and \(\det(A_i)\) are both negated, so \(\det(A_i)/\det(A)\) is unchanged.

- Doing a row replacement on \((\,A\mid b\,)\) does the same row replacement on \(A\) and on \(A_i\text{:}\)

Compute the solution of \(Ax=b\) using Cramer’s rule, where

\[ A = \left(\begin{array}{cc}a&b\\c&d\end{array}\right) \qquad b = \left(\begin{array}{c}1\\2\end{array}\right). \nonumber \]

Here the coefficients of \(A\) are unknown, but \(A\) may be assumed invertible.

Solution

First we compute the determinants of the matrices obtained by replacing the columns of \(A\) with \(b\text{:}\)

\[\begin{array}{lll}A_1=\left(\begin{array}{cc}1&b\\2&d\end{array}\right)&\qquad&\det(A_1)=d-2b \\ A_2=\left(\begin{array}{cc}a&1\\c&2\end{array}\right)&\qquad&\det(A_2)=2a-c.\end{array}\nonumber\]

Now we compute

\[ \frac{\det(A_1)}{\det(A)} = \frac{d-2b}{ad-bc} \qquad \frac{\det(A_2)}{\det(A)} = \frac{2a-c}{ad-bc}. \nonumber \]

It follows that

\[ x = \frac 1{ad-bc}\left(\begin{array}{c}d-2b\\2a-c\end{array}\right). \nonumber \]

Now we use Cramer’s rule to prove the first Theorem \(\PageIndex{2}\) of this subsection.

The \(j\)th column of \(A^{-1}\) is \(x_j = A^{-1} e_j\). This vector is the solution of the matrix equation

\[ Ax = A\bigl(A^{-1} e_j\bigr) = I_ne_j = e_j. \nonumber \]

By Cramer’s rule, the \(i\)th entry of \(x_j\) is \(\det(A_i)/\det(A)\text{,}\) where \(A_i\) is the matrix obtained from \(A\) by replacing the \(i\)th column of \(A\) by \(e_j\text{:}\)

\[A_i=\left(\begin{array}{cccc}a_{11}&a_{12}&0&a_{14}\\a_{21}&a_{22}&1&a_{24}\\a_{31}&a_{32}&0&a_{34}\\a_{41}&a_{42}&0&a_{44}\end{array}\right)\quad (i=3,\:j=2).\nonumber\]

Expanding cofactors along the \(i\)th column, we see the determinant of \(A_i\) is exactly the \((j,i)\)-cofactor \(C_{ji}\) of \(A\). Therefore, the \(j\)th column of \(A^{-1}\) is

\[ x_j = \frac 1{\det(A)}\left(\begin{array}{c}C_{ji}\\C_{j2}\\ \vdots \\ C_{jn}\end{array}\right), \nonumber \]

and thus

\[ A^{-1} = \left(\begin{array}{cccc}|&|&\quad&| \\ x_1&x_2&\cdots &x_n\\ |&|&\quad &|\end{array}\right) = \frac 1{\det(A)}\left(\begin{array}{ccccc}C_{11}&C_{21}&\cdots &C_{n-1,1}&C_{n1} \\ C_{12}&C_{22}&\cdots &C_{n-1,2}&C_{n2} \\ \vdots &\vdots &\ddots &\vdots &\vdots \\ C_{1,n-1}&C_{2,n-1}&\cdots &C_{n-1,n-1}&C{n,n-1} \\ C_{1n}&C_{2n}&\cdots &C_{n-1,n}&C_{nn}\end{array}\right). \nonumber \]