4.8: D’Alembert Solution of The Wave Equation

- Page ID

- 342

We have solved the wave equation by using Fourier series. But it is often more convenient to use the so-called d’Alembert solution to the wave equation.\(^{1}\) While this solution can be derived using Fourier series as well, it is really an awkward use of those concepts. It is easier and more instructive to derive this solution by making a correct change of variables to get an equation that can be solved by simple integration.

Suppose we have the wave equation

\[\label{eq:1} y_{tt}=a^2 y_{xx}. \]

We wish to solve the equation \(\eqref{eq:1}\) given the conditions

\[ \begin{align} y(0,t) &= y(L,t) = 0 & & \text{for all } t ,\nonumber \\ y(x,0) &= f(x) & & 0 < x < L , \label{eq:2} \\ y_t(x,0) &= g(x) & & 0 < x < L . \end{align} \nonumber \]

Change of Variables

We will transform the equation into a simpler form where it can be solved by simple integration. We change variables to \( \xi =x-at\), \( \eta =x+at\). The chain rule says:

\[\begin{align}\begin{aligned} \frac{\partial}{\partial x} &= \frac{\partial \xi}{\partial x} \frac{\partial}{\partial \xi}+\frac{\partial \eta}{\partial x}\frac{\partial}{\partial \eta}= \frac{\partial}{\partial \xi}+ \frac{\partial}{\partial \eta}, \\ \frac{\partial}{\partial t} &= \frac{\partial \xi}{\partial t}\frac{\partial}{\partial \xi}+\frac{\partial \eta}{\partial t}\frac{\partial}{\partial \eta}= -a \frac{\partial}{\partial \xi} + a\frac{\partial}{\partial \eta}.\end{aligned}\end{align} \nonumber \]

We compute

\[\begin{align}\begin{aligned} y_{xx} &= \frac{\partial^2 y}{\partial x^2}= \left( \frac{\partial}{\partial \xi}+ \frac{\partial}{\partial \eta} \right) \left( \frac{\partial y}{\partial \xi}+ \frac{\partial y}{\partial \eta} \right)= \frac{\partial^2 y}{\partial \xi^2}+2 \frac{\partial^2 y}{\partial \xi \partial \eta}+ \frac{\partial^2 y}{\partial \eta^2}, \\ y_{tt} &= \frac{\partial^2 y}{\partial ^2}= \left( -a \frac{\partial}{\partial \xi}+a \frac{\partial}{\partial \eta} \right) \left( -a \frac{\partial y}{\partial \xi}+ a \frac{\partial y}{\partial \eta} \right)= a^2 \frac{\partial^2 y}{\partial \xi^2}-2a^2 \frac{\partial^2 y}{\partial \xi \partial \eta}+a^2 \frac{\partial^2 y}{\partial \eta^2}. \end{aligned}\end{align} \nonumber \]

In the above computations, we used the fact from calculus that \( \frac{\partial^2 y}{\partial \xi \partial \eta}=\frac{\partial^2 y}{\partial \eta \partial \xi}\). We plug what we got into the wave equation,

\[ 0=a^2 y_{xx}-y_{tt}=4a^2 \frac{\partial^2 y}{\partial \xi \partial \eta}= 4a^2 y_{ \xi \eta}. \nonumber \]

Therefore, the wave equation \(\eqref{eq:1}\) transforms into \( y_{ \xi \eta} =0 \). It is easy to find the general solution to this equation by integrating twice. Keeping \( \xi\) constant, we integrate with respect to \( \eta\) first\(^{2}\) and notice that the constant of integration depends on \( \xi\); for each \( \xi\) we might get a different constant of integration. We get \(y _{ \xi}=C( \xi)\). Next, we integrate with respect to \( \xi\) and notice that the constant of integration must depend on \( \eta\). Thus, \( y= \int C( \xi)d \xi+B( \eta) \). The solution must, therefore, be of the following form for some functions \(A( \xi)\) and \(B( \eta ) \) :

\[ y =A( \xi)+B( \eta)= A(x-at)+B(x+at). \nonumber \]

The solution is a superposition of two functions (waves) traveling at speed \(a\) in opposite directions. The coordinates \(\xi\) and \(\eta\) are called the characteristic coordinates, and a similar technique can be applied to more complicated hyperbolic PDE. And in fact, in Section 1.9 it is used to solve first order linear PDE. Basically, to solve the wave equation (or more general hyperbolic equations) we find certain characteristic curves along which the equation is really just an ODE, or a pair of ODEs. In this case these are the curves where \(\xi\) and \(\eta\) are constant.

D’Alembert’s Formula

We know what any solution must look like, but we need to solve for the given side conditions. We will just give the formula and see that it works. First let \( F(x)\) denote the odd extension of \( f(x)\), and let \( G(x)\) denote the odd extension of \( g(x)\). Define

\[ A(x)= \frac{1}{2} F(x)- \frac{1}{2a} \int^x_0 G(s) ds,\quad B(x)= \frac{1}{2} F(x)+ \frac{1}{2a} \int^x_0 G(s) ds. \nonumber \]

We claim this \( A(x)\) and \( B(x)\) give the solution. Explicitly, the solution is \(y(x,t)= A(x-at)+B(x+at)\) or in other words:

\[ \begin{align} y(x,t) &= \frac{1}{2}F(x-at)- \frac{1}{2a} \int_0^{x-at} G(s)ds+ \frac{1}{2}F(x+at)+ \frac{1}{2a} \int_0^{x+at} G(s)ds \label{eq:8} \\ &= \frac{F(x-at)+F(x+at)}{2} + \frac{1}{2a} \int_{x-at}^{x+at} G(s)ds. \end{align} \nonumber \]

Let us check that the d’Alembert formula really works.

\[ y(x,0)= \frac{1}{2}F(x)- \frac{1}{2a} \int_0^{x} G(s)ds+ \frac{1}{2}F(x)+ \frac{1}{2a} \int_0^{x} G(s)ds =F(x). \nonumber \]

So far so good. Assume for simplicity \(F\) is differentiable. And we use the first form of \(\eqref{eq:8}\) as it is easier to differentiate. By the fundamental theorem of calculus we have

\[ y_t(x,t)= \frac{-a}{2}F'(x-at)+ \frac{1}{2}G(x-at)+ \frac{a}{2} F'(x+at)+ \frac{1}{2}G(x+at). \nonumber \]

So

\[ y_t(x,0)= \frac{-a}{2}F'(x)+ \frac{1}{2}G(x)+ \frac{a}{2} F'(x)+ \frac{1}{2}G(x)=G(x). \nonumber \]

Yay! We’re smoking now. OK, now the boundary conditions. Note that \(F(x)\) and \(G(x)\) are odd. Also \( \int_0^x G(s)ds\) is an even function of \(x\) because \(G(x)\) is odd (to see this fact, do the substitution \(s=-v\)). So

\[\begin{align}\begin{aligned} y(0,t) &= \frac{1}{2}F(-at)- \frac{1}{2a} \int_0^{-at} G(s)ds+ \frac{1}{2}F(at)+ \frac{1}{2a} \int_0^{at} G(s)ds \\ &= \frac{-1}{2}F(at)- \frac{1}{2a} \int_0^{at} G(s)ds+ \frac{1}{2}F(at)+ \frac{1}{2a} \int_0^{at} G(s)ds=0 .\end{aligned}\end{align} \nonumber \]

Note that \(F(x)\) and \(G(x)\) are \(2L\) periodic. We compute

\[\begin{align}\begin{aligned} y(L,t) &= \frac{1}{2}F(L-at)- \frac{1}{2a} \int_0^{L-at} G(s)ds+ \frac{1}{2}F(L+at)+ \frac{1}{2a} \int_0^{L+at} G(s)ds \\ &= \frac{1}{2}F(-L-at)- \frac{1}{2a} \int_0^{L} G(s)ds- \frac{1}{2a} \int_0^{-at} G(s)ds +\\ &= \frac{1}{2}F(L+at)+ \frac{1}{2a} \int_0^{L} G(s)ds+ \frac{1}{2a} \int_0^{at} G(s)ds \\ &= \frac{-1}{2}F(L+at)- \frac{1}{2a} \int_0^{at} G(s)ds+ \frac{1}{2}F(L+at)+ \frac{1}{2a} \int_0^{at} G(s)ds=0.\end{aligned}\end{align} \nonumber \]

And voilà, it works.

D’Alembert says that the solution is a superposition of two functions (waves) moving in the opposite direction at “speed” \(a\). To get an idea of how it works, let us work out an example. Consider the simpler setup

\[\begin{align}\begin{aligned} y_{tt} &=y_{xx}, \\ y(0,t) &=y(1,t)=0, \\ y(x,0) & =f(x), \\ y_t(x,0) & =0.\end{aligned}\end{align} \nonumber \]

Here \(f(x)\) is an impulse of height 1 centered at \(x=0.5\):

\[ f(x) = \left\{ \begin{array}{ccc} 0 & {\rm{if}} & 0 \leq x < 0.45, \\ 20(x-0.45) & {\rm{if}} & 0.45 \leq x < 0.5, \\ 20(0.55-x) & {\rm{if}} & 0.5 \leq x < 0.55 \\ 0 & {\rm{if}} & 0.55 \leq x \leq 1. \end{array} \right. \nonumber \]

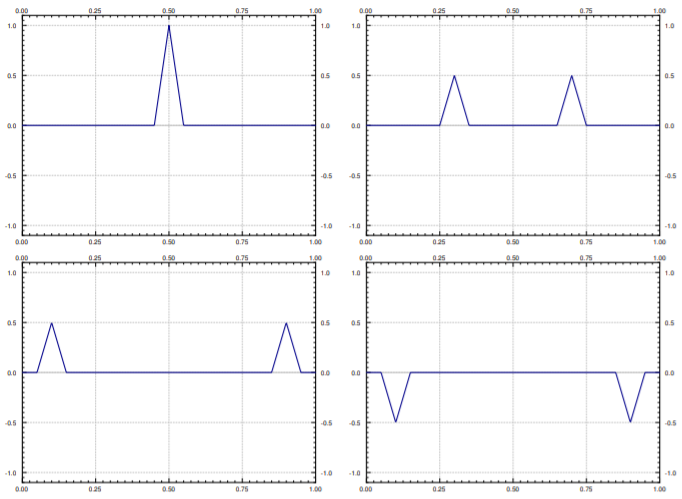

The graph of this impulse is the top left plot in Figure \(\PageIndex{1}\).

Let \(F(x)\) be the odd periodic extension of \(f(x)\). Then from \(\eqref{eq:8}\) we know that the solution is given as

\[ y(x,t)= \frac{F(x-t)+F(x+t)}{2}. \nonumber \]

It is not hard to compute specific values of \( y(x,t)\). For example, to compute \(y(0.1,0.6)\) we notice \(x-t=-0.5\) and \(x+t=0.7\). Now \(F(-0.5)=-f(0.5)=-20(0.55-0.5)=-1\) and \(F(0.7)=f(0.7)=0\). Hence \(y(0.1,0.6)= \frac{-1+0}{2}=-0.5\). As you can see the d’Alembert solution is much easier to actually compute and to plot than the Fourier series solution. See Figure \(\PageIndex{1}\) for plots of the solution \(y\) for several different \(t\).

Another Way to Solve for the Side Conditions

It is perhaps easier and more useful to memorize the procedure rather than the formula itself. The important thing to remember is that a solution to the wave equation is a superposition of two waves traveling in opposite directions. That is,

\[y(x,t)=A(x-at)+B(x+at). \nonumber \]

If you think about it, the exact formulas for \(A\) and \(B\) are not hard to guess once you realize what kind of side conditions \(y(x,t)\) is supposed to satisfy. Let us give the formula again, but slightly differently. Best approach is to do this in stages. When \(g(x)=0\) (and hence \(G(x)=0\)) we have the solution

\[ \frac{F(x-at)+F(x+at)}{2}. \nonumber \]

On the other hand, when \(f(x)=0\) (and hence \(F(x)=0\)), we let

\[H(x)=\int_0^x G(s)ds. \nonumber \]

The solution in this case is

\[\frac{1}{2a} \int_{x-at}^{x+at} G(s)ds = \frac{-H(x-at)+H(x+at)}{2a}. \nonumber \]

By superposition we get a solution for the general side conditions \(\eqref{eq:2}\) (when neither \(f(x)\) nor \(g(x)\) are identically zero).

\[\label{eq:21} y(x,t)= \frac{F(x-at)+F(x+at)}{2} + \frac{-H(x-at)+H(x+at)}{2a}. \]

Do note the minus sign before the \(H\), and the \(a\) in the second denominator.

Check that the new formula \(\eqref{eq:21}\) satisfies the side conditions \(\eqref{eq:2}\).

Warning: Make sure you use the odd extensions \(F(x)\) and \(G(x)\), when you have formulas for \(f(x)\) and \(g(x)\). The thing is, those formulas in general hold only for \(0<x<L\), and are not usually equal to \(F(x)\) and \(G(x)\) for other \(x\).

Remarks

Let us remark that the formula \(y(x,t) = A(x-at) + B(x+at)\) is the reason why the solution of the wave equation doesn’t get as time goes on, that is, why in the examples where the initial conditions had corners, the solution also has corners at every time \(t\).

The corners bring us to another interesting remark. Nobody ever notices at first that our example solutions are not even differentiable (they have corners): In Example \(\PageIndex{1}\) above, the solution is not differentiable whenever \(x=t+0.5\) or \(x=-t+0.5\) for example. Really to be able to compute \(u_{xx}\) or \(u_{tt}\), you need not one, but two derivatives. Fear not, we could think of a shape that is very nearly \(F(x)\) but does have two derivatives by rounding the corners a little bit, and then the solution would be very nearly \(\frac{F(x-t)+F(x+t)}{2}\) and nobody would notice the switch.

One final remark is what the d’Alembert solution tells us about what part of the initial conditions influence the solution at a certain point. We can figure this out by Let us suppose that the string is very long (perhaps infinite) for simplicity. Since the solution at time \(t\) is \[y(x,t) = \frac{F(x-at) + F(x+at)}{2} + \frac{1}{2a} \int_{x-at}^{x+at} G(s) \,ds , \nonumber \] we notice that we have only used the initial conditions in the interval \([x-at,x+at]\). These two endpoints are called the wavefronts, as that is where the wave front is given an initial (\(t=0\)) disturbance at \(x\). So if \(a=1\), an observer sitting at \(x=0\) at time \(t=1\) has only seen the initial conditions for \(x\) in the range \([-1,1]\) and is blissfully unaware of anything else. This is why for example we do not know that a supernova has occurred in the universe until we see its light, millions of years from the time when it did in fact happen.

Footnotes

[1] Named after the French mathematician Jean le Rond d’Alembert (1717 – 1783).

[2] There is nothing special about \(\eta\), you can integrate with \(\xi\) first, if you wish.

Contributors and Attributions

- Jiří Lebl (Oklahoma State University).These pages were supported by NSF grants DMS-0900885 and DMS-1362337.