4.1: Newton's Method

- Page ID

- 4171

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\dsum}{\displaystyle\sum\limits} \)

\( \newcommand{\dint}{\displaystyle\int\limits} \)

\( \newcommand{\dlim}{\displaystyle\lim\limits} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\(\newcommand{\longvect}{\overrightarrow}\)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)In Chapter 3, we learned how the first and second derivatives of a function influence its graph. In this chapter we explore other applications of the derivative.

Solving equations is one of the most important things we do in mathematics, yet we are surprisingly limited in what we can solve analytically. For instance, equations as simple as \(x^5+x+1=0\) or \(\cos x =x\) cannot be solved by algebraic methods in terms of familiar functions. Fortunately, there are methods that can give us approximate solutions to equations like these. These methods can usually give an approximation correct to as many decimal places as we like. In Section 1.5, we learned about the Bisection Method. This section focuses on another technique (which generally works faster), called Newton's Method.

Newton's Method is built around tangent lines. The main idea is that if \(x\) is sufficiently close to a root of \(f(x)\), then the tangent line to the graph at \((x,f(x))\) will cross the \(x\)-axis at a point closer to the root than \(x\).

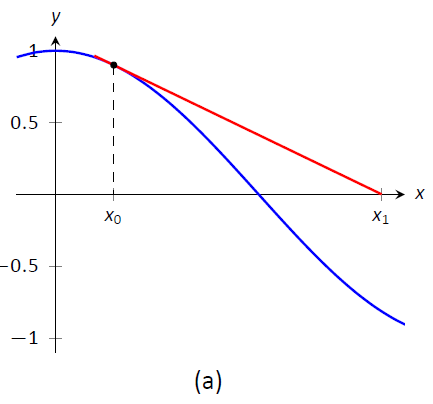

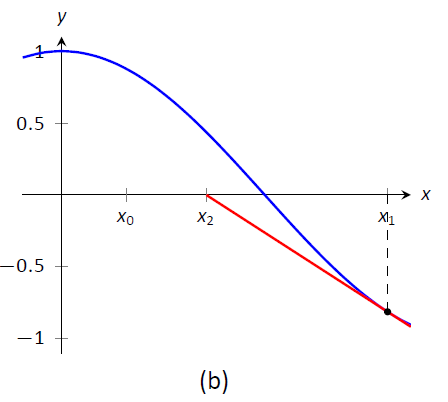

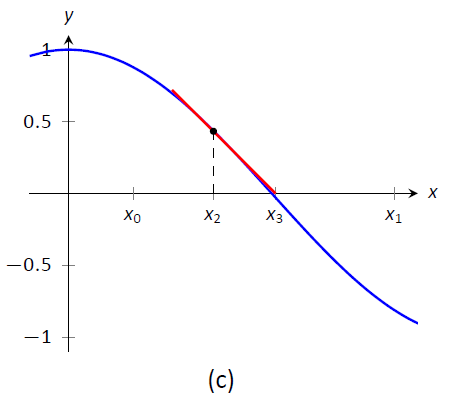

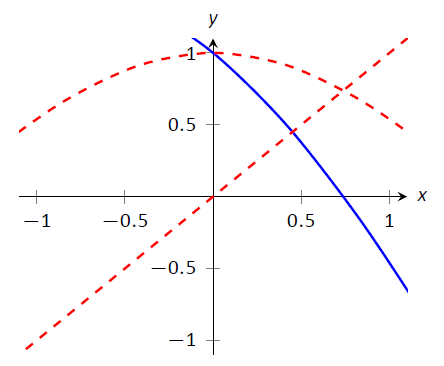

Figure \(\PageIndex{1}\): Demonstrating the geometric concept behind Newton's Method

We start Newton's Method with an initial guess about roughly where the root is. Call this \(x_0\). (See Figure \(\PageIndex{1a}\).) Draw the tangent line to the graph at \((x_0,f(x_0))\) and see where it meets the \(x\)-axis. Call this point \(x_1\). Then repeat the process -- draw the tangent line to the graph at \((x_1, f(x_1))\) and see where it meets the \(x\)-axis. (See Figure \(\PageIndex{1b}\).) Call this point \(x_2\). Repeat the process again to get \(x_3\), \(x_4\), etc. This sequence of points will often converge rather quickly to a root of \(f\).

We can use this geometric process to create an algebraic process. Let's look at how we found \(x_1\). We started with the tangent line to the graph at \((x_0,f(x_0))\). The slope of this tangent line is \(f'(x_0)\) and the equation of the line is

$$y=f'(x_0)(x-x_0)+f(x_0).\]

This line crosses the \(x\)-axis when \(y=0\), and the \(x\)--value where it crosses is what we called \(x_1\). So let \(y=0\) and replace \(x\) with \(x_1\), giving the equation:

\[ 0 = f'(x_0)(x_1-x_0)+f(x_0).\]

Now solve for \(x_1\):

\[x_1=x_0-\frac{f(x_0)}{f'(x_0)}.\]

Since we repeat the same geometric proces to find \(x_2\) from \(x_1\), we have

\[x_2=x_1-\frac{f(x_1)}{f'(x_1)}.\]

In general, given an approximation \(x_n\), we can find the next approximation, \(x_{n+1}\) as follows:

\[x_{n+1} = x_{n} - \frac{f(x_{n})}{f'(x_{n})}.\]

We summarize this process as follows.

Key Idea 5: Newton's Method

Let \(f\) be a differentiable function on an interval \(I\) with a root in \(I\). To approximate the value of the root, accurate to \(d\) decimal places:

- Choose a value \(x_0\) as an initial approximation of the root. (This is often done by looking at a graph of \(f\).)

- Create successive approximations iteratively; given an approximation \(x_n\), compute the next approximation \(x_{n+1}\) as $$x_{n+1} = x_n - \frac{f(x_n)}{f'(x_n)}.$$

- Stop the iterations when successive approximations do not differ in the first \(d\) places after the decimal point.

Note: Newton's Method is not infallible. The sequence of approximate values may not converge, or it may converge so slowly that one is "tricked" into thinking a certain approximation is better than it actually is. These issues will be discussed at the end of the section.}

Let's practice Newton's Method with a concrete example.

Example \(\PageIndex{1}\): Using Newton's Method

Approximate the real root of \(x^3-x^2-1=0\), accurate to the first 3 places after the decimal, using Newton's Method and an initial approximation of \(x_0=1\).}

Solution

To begin, we compute \(f'(x)=3x^2-2x\). Then we apply the Newton's Method algorithm, outlined in Key Idea 5.

\[\begin{align} x_1&=1-\frac{f(1)}{f'(1)}=1-\frac{1^3-1^2-1}{3\cdot 1^2-2\cdot 1}=2,\\ x_2&=2-\frac{f(2)}{f'(2)}=2-\frac{2^3-2^2-1}{3\cdot 2^2-2\cdot 2}=1.625,\\ x_3&=1.625-\frac{f(1.625)}{f'(1.625)} = 1.625-\frac{1.625^3-1.625^2-1}{3\cdot 1.625^2-2\cdot 1.625}\approx 1.48579. \\ x_4 &= 1.48579 - \frac{f(1.48579)}{f'(1.48579)} \approx 1.46596\\ x_5 &= 1.46596 - \frac{f(1.46596)}{f'(1.46596)} \approx 1.46557 \end{align}\]

We performed 5 iterations of Newton's Method to find a root accurate to the first 3 places after the decimal; our final approximation is \(1.465.\) The exact value of the root, to six decimal places, is \(1.465571\); It turns out that our \(x_5\) is accurate to more than just 3 decimal places.

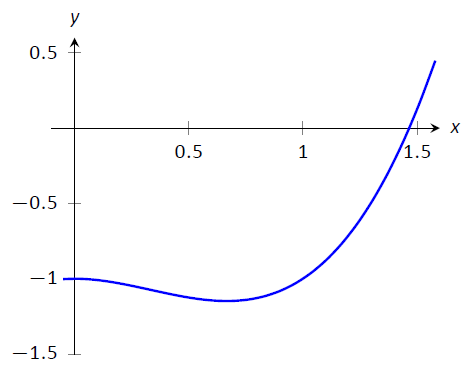

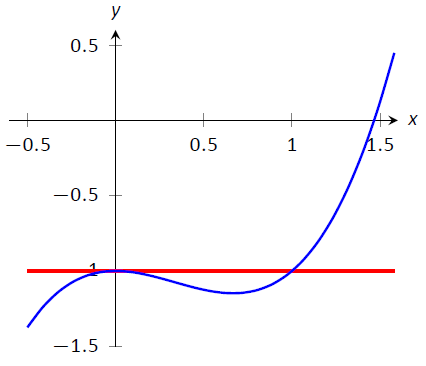

A graph of \(f(x)\) is given in Figure \(\PageIndex{2}\). We can see from the graph that our initial approximation of \(x_0=1\) was not particularly accurate; a closer guess would have been \(x_0=1.5\). Our choice was based on ease of initial calculation, and shows that Newton's Method can be robust enough that we do not have to make a very accurate initial approximation.

Figure \(\PageIndex{2}\): A graph of \(f(x) = x^3-x^2-1\) in Example \(\PageIndex{1}\).

We can automate this process on a calculator that has an Ans key that returns the result of the previous calculation. Start by pressing \(\texttt{1}\) and then \(\texttt{Enter}\). (We have just entered our initial guess, \(x_0=1\).) Now compute

$$\texttt{Ans} - \frac{f(\texttt{Ans})}{f'(\texttt{Ans})}\]

by entering the following and repeatedly press the \(\texttt{Enter}\) key:

\[ \texttt{Ans}-(\texttt{Ans}^3-\texttt{Ans}^2-1)/(3*\texttt{Ans}^2-2*\texttt{Ans})\]

Each time we press the \texttt{Enter} key, we are finding the successive approximations, \(x_1\), \(x_2\), \dots, and each one is getting closer to the root. In fact, once we get past around \(x_7\) or so, the approximations don't appear to be changing. They actually are changing, but the change is far enough to the right of the decimal point that it doesn't show up on the calculator's display. When this happens, we can be pretty confident that we have found an accurate approximation.

Using a calculator in this manner makes the calculations simple; many iterations can be computed very quickly.

Example \(\PageIndex{2}\): Using Newton's Method to find where functions intersect

Use Newton's Method to approximate a solution to \(\cos{x} = x\), accurate to 5 places after the decimal.

Solution

Newton's Method provides a method of solving \(f(x) = 0\); it is not (directly) a method for solving equations like \(f(x) = g(x)\). However, this is not a problem; we can rewrite the latter equation as \(f(x) - g(x)=0\) and then use Newton's Method.

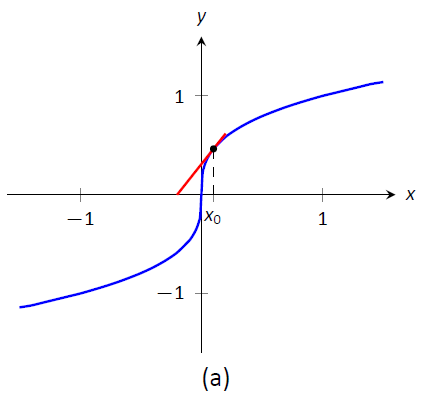

So we rewrite \(\cos x=x\) as \(\cos{x}-x=0\). Written this way, we are finding a root of \(f(x)=\cos{x}-x\). We compute \(f'(x)=-\sin{x} - 1\). Next we need a starting value, \(x_0\). Consider Figure \(\PageIndex{3}\), where \(f(x) = \cos x-x\) is graphed. It seems that \(x_0=0.75\) is pretty close to the root, so we will use that as our \(x_0\). (The figure also shows the graphs of \(y=\cos x\) and \(y=x\), drawn with dashed lines. Note how they intersect at the same \(x\) value as when \(f(x) = 0\).)

Figure \(\PageIndex{3}\): A graph of \(f(x)=\cos x-x\) used to find an initial approximation of its root.

We now compute \(x_1\), \(x_2\), etc. The formula for \(x_1\) is

\[x_1 = 0.75 - \frac{\cos(0.75)-0.75}{-\sin(0.75)-1}\approx 0.7391111388.\]

Apply Newton's Method again to find \(x_2\):

\[x_2 = 0.7391111388 - \frac{\cos(0.7391111388)-0.7391111388}{-\sin(0.7391111388)-1}\approx 0.7390851334.\]

We can continue this way, but it is really best to automate this process. On a calculator with an Ans key, we would start by pressing 0.75, then \(\texttt{Enter}\), inputting our initial approximation. We then enter:

\[\texttt{Ans - (cos(Ans)-Ans)/(-sin(Ans)-1).}\]

Repeatedly pressing the \(\texttt{Enter}\) key gives successive approximations. We quickly find:

\[ \begin{align} x_3 &= 0.7390851332\\ x_4 &= 0.7390851332. \end{align} \]

Our approximations \(x_2\) and \(x_3\) did not differ for at least the first 5 places after the decimal, so we could have stopped. However, using our calculator in the manner described is easy, so finding \(x_4\) was not hard. It is interesting to see how we found an approximation, accurate to as many decimal places as our calculator displays, in just 4 iterations.

If you know how to program, you can translate the following pseudocode into your favorite language to perform the computation in this problem.

\(\texttt{x = .75}\)

\(\texttt{while true}\)

\(\texttt{oldx = x}\)

\(\texttt{x = x - (cos(x)-x)/(-sin(x)-1)}\)

\(\texttt{print x}\)

\(\texttt{if abs(x-oldx) < .0000000001}\)

\(\texttt{break}\)

This code calculates \(x_1\), \(x_2\), etc., storing each result in the variable \(\texttt{x}\). The previous approximation is stored in the variable \(\texttt{oldx}\). We continue looping until the difference between two successive approximations, \(\texttt{abs(x-oldx)}\), is less than some small tolerance, in this case, \(\texttt{.0000000001}\).

Convergence of Newton's Method

What should one use for the initial guess, \(x_0\)? Generally, the closer to the actual root the initial guess is, the better. However, some initial guesses should be avoided. For instance, consider Example \(\PageIndex{1}\) where we sought the root to \(f(x) = x^3-x^2-1\). Choosing \(x_0=0\) would have been a particularly poor choice. Consider Figure \(\PageIndex{4}\), where \(f(x)\) is graphed along with its tangent line at \(x=0\). Since \(f'(0)=0\), the tangent line is horizontal and does not intersect the \(x\)--axis. Graphically, we see that Newton's Method fails.

\Figure \(\PageIndex{4}\): A graph of \(f(x) = x^3-x^2-1\), showing why an initial approximation of \(x_0=0\) with Newton's Method fails.

We can also see analytically that it fails. Since

\[x_1 = 0 -\frac{f(0)}{f'(0)}\]

and \(f'(0)=0\), we see that \(x_1\) is not well defined.

This problem can also occur if, for instance, it turns out that \(f'(x_5)=0\). Adjusting the initial approximation \(x_0\) by a very small amount will likely fix the problem.

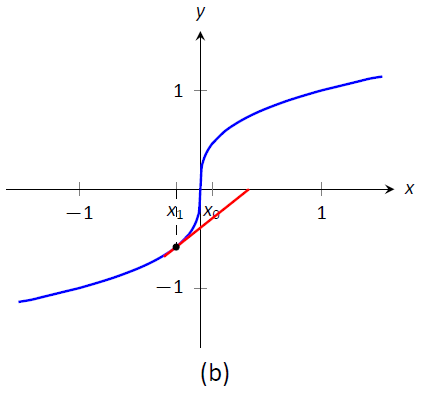

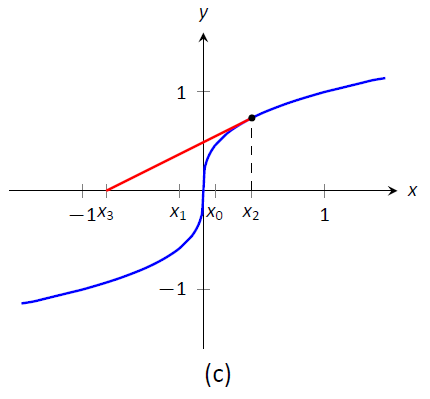

It is also possible for Newton's Method to not converge while each successive approximation is well defined. Consider \(f(x) = x^{1/3}\), as shown in Figure \(\PageIndex{5}\). It is clear that the root is \(x=0\), but let's approximate this with \(x_0=0.1\). Figure \(\PageIndex{5a}\) shows graphically the calculation of \(x_1\); notice how it is farther from the root than \(x_0\). Figures \(\PageIndex{5b}\) and (\(\PageIndex{5c}\)) show the calculation of \(x_2\) and \(x_3\), which are even farther away; our successive approximations are getting worse. (It turns out that in this particular example, each successive approximation is twice as far from the true answer as the previous approximation.)

Figure \(\PageIndex{5}\): Newton's Method fails to find a root of \(f(x) = x^{1/3}\), regardless of the choice of \(x_0\).}\label{fig:newt4}

There is no "fix" to this problem; Newton's Method simply will not work and another method must be used.

While Newton's Method does not always work, it does work "most of the time," and it is generally very fast. Once the approximations get close to the root, Newton's Method can as much as double the number of correct decimal places with each successive approximation. A course in Numerical Analysis will introduce the reader to more iterative root finding methods, as well as give greater detail about the strengths and weaknesses of Newton's Method.

Contributors and Attributions

Gregory Hartman (Virginia Military Institute). Contributions were made by Troy Siemers and Dimplekumar Chalishajar of VMI and Brian Heinold of Mount Saint Mary's University. This content is copyrighted by a Creative Commons Attribution - Noncommercial (BY-NC) License. http://www.apexcalculus.com/

Integrated by Justin Marshall.