13.9: Constrained Optimization

- Page ID

- 30638

( \newcommand{\kernel}{\mathrm{null}\,}\)

In this section, we will consider some applications of optimization. Applications of optimization almost always involve some kind of constraints or boundaries.

Anytime we have a closed region or have constraints in an optimization problem the process we'll use to solve it is called constrained optimization. In this section we will explore how to use what we've already learned to solve constrained optimization problems in two ways. In the next section we will learn a different approach called the Lagrange multiplier method that can be used to solve many of the same (and some additional) constrained optimization problems.

We will first look at a way to rewrite a constrained optimization problem in terms of a function of two variables, allowing us to find its critical points and determine optimal values of the function using the second partials test. We will then consider another approach to determining absolute extrema of a function of two variables on a closed region, where we can write each boundary as a function of x.

Applications of Optimization - Approach 1: Using the Second Partials Test

Example 13.9.1: A Box Problem

A closed cardboard box is constructed from different materials. If the material for the base of the box costs $2 per square foot, and the side/top material costs $1 per square foot, find the dimensions of the largest box that can be created for $36.

Solution

Consider the box shown in the diagram.

Our first task will be to come up with the objective function (what we are trying to optimize). Here this is the volume of the box (see that we were asked for the "largest box"). Using the variables from the diagram, we have:

Objective Function:V=LWH

Now we need to write the constraint using the same variables. The constraint here is the cost of the materials for the box. Since the cost of the material in the bottom of the box is twice the cost of the materials in the rest of the box, we'll need to account for this in the constraint. We have that the cost of the sides is $1 per square foot, and so the cost of the bottom of the box must be $2 per square foot. Now we need to write an expression for the area of the bottom of the box and another for the area of the sides and top of the box.

Area of bottom of box=LWArea of sides of box and the top=2LH+2WH+LW

Now we can put the costs of the respective material together with these areas to write the cost constraint:

Cost Constraint:2LW+(2LH+2WH+LW)=36→3LW+2LH+2WH=36

Note that there are also some additional implied constraints in this problem. That is, we know that L≥0,W≥0, and H≥0.

This constraint can be used to reduce the number of variables in the objective function, V=LWH, from three to two. We can choose to solve the constraint for any convenient variable, so let's solve it for H. Then,

3LW+2LH+2WH=36→2H(L+W)=36−3LW→H=36−3LW2(L+W)

Substituting this result into the objective function (replacing H), we obtain:

V(L,W)=LW(36−3LW2(L+W))=36LW−3L2W22(L+W)

Now that we have the volume expressed as a function of just two variables, we can find its critical points (feasible for this situation) and then use the second partials test to confirm that we obtain a relative maximum volume at one of these points.

Finding Critical Points:

First we find the partial derivatives of V: VL(L,W)=2(L+W)(36W−6LW2)−2(36LW−3L2W2)4(L+W)2by the Quotient Rule=(L+W)(36W−6LW2)−(36LW−3L2W2)2(L+W)2Canceling a common factor of 2=36LW−6L2W2+36W2−6LW3−36LW+3L2W22(L+W)2Simplifying the numerator=36W2−6LW3−3L2W22(L+W)2Collecting like terms=W2(36−6LW−3L2)2(L+W)2Factoring outW2

Similarly, we find that

VW(L,W)=L2(36−6LW−3W2)2(L+W)2

Setting these partial derivatives both equal to zero, we note that the denominators cannot make either partial equal zero. We can then write the nonlinear system:

W2(36−6LW−3L2)=0L2(36−6LW−3W2)=0

Note that if not for the fact that in this application we know that the variables L and W are non-negative, we would find that this function has critical points anywhere the denominator of these partials is equal to 0, i.e., when L=−W. That is, all points on the line L=−W would be critical points of this function. But since this is not possible here, we can ignore it and go on.

To solve the system above, we first note that both equations are factored and set equal to zero. Using the zero-product property, we know the first equation tells us that either

W=0or36−6LW−3L2=0

The second equation tells us that

L=0or36−6LW−3W2=0

Taking the various combinations here, we find

W=0 and L=0→Critical point: (0, 0). However, we see that this point also makes the denominator of the partials zero, making it a critical point of the second kind. We also see that with these dimensions the other constraint could not be true. We'd end up with a cost of $0, not $36.

W=0 and 36−6LW−3W2=0 is impossible, since when we put zero in for W in the second equation, we obtain the contradiction, 36=0.

36−6LW−3L2=0 and L=0 is also impossible, since when we put zero in for L in the first equation, we again obtain the contradiction, 36=0.

Finally, considering the combination of the last two equations we have the system:

36−6LW−3W2=036−6LW−3L2=0

Multiplying through the bottom equation by −1, we have

36−6LW−3W2=0−36+6LW+3L2=0

Adding the two equations eliminates the first two terms on the left side of each equation, yielding

3L2−3W2=0→3(L−W)(L+W)=0

So we have that

L=WorL=−W

Since we know that none of the dimensions can be negative we can reject L=−W. This leaves us with the result that L=W.

Substituting this into the first equation of our system above (we could have used either one), gives us

36−6W2−3W2=036−9W2=09(4−W2)=09(2−W)(2+W)=0

From this equation we see that either W=2 or W=−2. Since the width cannot be negative, we reject W=−2 and conclude that W=2 ft.

Then, since L=W, we know that L=2 ft also.

Using the formula we found for H above, we find that the corresponding height is

H=36−3(2)(2)2(2+2)=248=3ft

This gives a volume of V=2⋅2⋅3=12ft3.

Note that the critical point we just found was actually (2,2). This is the only feasible critical point of the function V.

Now we just have to use the second partials test to prove that we obtain a relative maximum here.

Second Partials Test:

First we need to determine the second partials of V(L,W), i.e., VLL,VWW, and VLW.

VLL(L,W)=2(L+W)2(−6W3−6LW2)−4(L+W)(36W2−6LW3−3L2W2)4(L+W)4=4(L+W)[(L+W)(−3W3−3LW2)−36W2+6LW3+3L2W2]4(L+W)4=−3LW3−3L2W2−3W4−3LW3−36W2+6LW3+3L2W2(L+W)3=−3W4−36W2(L+W)3

Similarly, we find

VWW(L,W)=−3L4−36L2(L+W)3

And the mixed partial

VLW(L,W)=2(L+W)2(72W−18LW2−6L2W)−4(L+W)(36W2−6LW3−3L2W2)4(L+W)4=4(L+W)[(L+W)(36W−9LW2−3L2W)−36W2+6LW3+3L2W2]4(L+W)4=36LW+36W2−9L2W2−9LW3−3L3W−3L2W2−36W2+6LW3+3L2W2(L+W)3=36LW−3LW3−9L2W2−3L3W(L+W)3

Now we can calculate the discriminant at the critical point:

D(2,2)=VLL(2,2)⋅VWW(2,2)−[VLW(2,2)]2=(−3)(−3)−(−32)2=9−94=274>0

Since D>0 and VLL(2,2)=−3<0, we know that V(L,W) is concave down at this critical point and thus has a relative maximum of 12 there.

Therefore, we know that the box of maximum volume that costs $36 to make will have a volume of V=2ft⋅2ft⋅3ft=12ft3.

Finding Absolute Extrema on a Closed, Bounded Region

When optimizing functions of one variable such as y=f(x), we used the Extreme Value Theorem. It said that over a closed interval I, a continuous function has both an absolute maximum value and an absolute minimum value. To find these maximum and minimum values, we evaluated f at all critical points in the interval, as well as at the endpoints (the "boundaries'') of the interval.

A similar theorem and procedure applies to functions of two variables. When working with a function of two variables, the closed interval is replaced by a closed, bounded region. A region is bounded if all the points in that region can be contained within a disk (or ball) of finite radius. Just as a continuous function of one variable was guaranteed to have an absolute maximum and an absolute minimum on a closed interval, a continuous function of two variables will attain an absolute maximum and absolute minimum value over a closed region (see the following theorem).

We can find these values by evaluating the function at its critical points in the region and at the critical values and endpoints of traces formed on the boundaries of the region. First, we need to find the critical points of the function that lie inside the region and calculate the corresponding function values. Then, it is necessary to find the maximum and minimum values of the function on the boundaries of the region. When we have all these values, the largest function value corresponds to the absolute (global) maximum and the smallest function value corresponds to the absolute (global) minimum.

First, however, we need to be assured that such values exist. The following theorem does this.

Theorem 13.9.1: Extreme Value Theorem

Let z=f(x,y) be a continuous function on a closed, bounded region S. Then f has an absolute maximum value at some point in S and an absolute minimum value at some point in S.

The proof of this theorem is a direct consequence of the extreme value theorem and Fermat’s theorem. In particular, if either extremum is not located on the boundary of S, then it is located at an interior point of S. But an interior point (x0,y0) of S that’s an absolute extremum is also a local extremum; hence, (x0,y0) is a critical point of f by Fermat’s theorem. Therefore the only possible values for the global extrema of f on S are the extreme values of f on the interior or boundary of S.

Now that we know any continuous function f defined on a closed, bounded region will have global extreme values on that region, let's summarize how to find them.

Finding Extreme Values of a Function of Two Variables

Assume z=f(x,y) is a differentiable function of two variables defined on a closed, bounded region S. Then f will attain the absolute maximum value and the absolute minimum value, which are, respectively, the largest and smallest values found among the following:

- The values of f at the critical points of f in S.

- The values of f on the boundary of S.

Now let's see how this works in an example.

Example 13.9.2: Finding Extrema on a Closed, Bounded Region

Let f(x,y)=x2−y2+5 and let the region S be the triangle with vertices (−1,−2), (0,1) and (2,−2), including its interior. Find the absolute maximum and absolute minimum values of f on S.

SOLUTION

It can help to see a graph of f along with the region S. In Figure 13.9.1(a) the triangle defining S is shown in the xy-plane (using dashed red lines). Above it is the surface of f. Here we are only concerned with the portion of f enclosed by the "triangle'' on its surface. Note that the triangular region forming our constrained region S in the xy-plane has been projected up onto the surface above it.

Figure 13.9.1: Plotting the surface of f along with the restricted domain S.

We begin by finding the critical points of f. With fx(x,y)=2x and fy(x,y)=−2y, we find only one critical point, at (0,0).

We now find the maximum and minimum values that f attains along the boundary of S, that is, along the edges of the triangle. In Figure 13.9.1(b) we see the triangle sketched in the plane with the equations of the lines forming its edges labeled.

Start with the bottom edge, along the line y=−2. If y is −2, then on the surface, we are considering points f(x,−2); that is, our function reduces to f(x,−2)=x2−(−2)2+5=x2+1=f1(x). We want to maximize/minimize f1(x)=x2+1 on the interval [−1,2]. To do so, we evaluate f1(x) at its critical points and at the endpoints.

The critical points of f1 are found by setting its derivative equal to 0:

f′1(x)=0⇒x=0.

Evaluating f1 at this critical point, and at the endpoints of [−1,2] gives:

f1(−1)=2⇒f(−1,−2)=2f1(0)=1⇒f(0,−2)=1f1(2)=5⇒f(2,−2)=5.

Notice how evaluating f1 at a point is the same as evaluating f at its corresponding point.

We need to do this process twice more, for the other two edges of the triangle.

Along the left edge, along the line y=3x+1, we substitute 3x+1 in for y in f(x,y):

f(x,y)=f(x,3x+1)=x2−(3x+1)2+5=−8x2−6x+4=f2(x).

We want the maximum and minimum values of f2 on the interval [−1,0], so we evaluate f2 at its critical points and the endpoints of the interval. We find the critical points:

f′2(x)=−16x−6=0⇒x=−38.

Evaluate f2 at its critical point and the endpoints of [−1,0]:

f2(−1)=2⇒f(−1,−2)=2f2(−38)=418=5.125⇒f(−38,−0.125)=5.125f2(0)=1⇒f(0,1)=4.

Finally, we evaluate f along the right edge of the triangle, where y=−32x+1.

f(x,y)=f(x,−32x+1)=x2−(−32x+1)2+5=−54x2+3x+4=f3(x).

The critical points of f3(x) are:

f′3(x)=0⇒x=65=1.2.

We evaluate f3 at this critical point and at the endpoints of the interval [0,2]:

f3(0)=4⇒f(0,1)=4f3(1.2)=5.8⇒f(1.2,−0.8)=5.8f3(2)=5⇒f(2,−2)=5.

One last point to test: the critical point of f, the point (0,0). We find f(0,0)=5.

Figure 13.9.2: The surface of f along with important points along the boundary of S and the interior.

We have evaluated f at a total of 7 different places, all shown in Figure 13.9.2. We checked each vertex of the triangle twice, as each showed up as the endpoint of an interval twice. Of all the z-values found, the maximum is 5.8, found at (1.2,−0.8); the minimum is 1, found at (0,−2).

Notice that in this example we did not need to use the Second Partials Test to analyze the function's behavior at any critical points within the closed, bounded region. All we needed to do was evaluate the function at these critical points and then to find and evaluate the function at any critical points on the boundaries of this region. The largest output gives us the absolute maximum value of the function on the region, and the smallest output gives us the absolute minimum value of the function on the region.

Let's summarize the process we'll use for these problems.

Problem-Solving Strategy: Finding Absolute Maximum and Minimum Values on a Closed, Bounded Region

Let z=f(x,y) be a continuous function of two variables defined on a closed, bounded region D, and assume f is differentiable on D. To find the absolute maximum and minimum values of f on D, do the following:

- Determine the critical points of f in D.

- Calculate f at each of these critical points.

- Determine the maximum and minimum values of f on the boundary of its domain.

- The maximum and minimum values of f will occur at one of the values obtained in steps 2 and 3.

This portion of the text is entitled "Constrained Optimization'' because we want to optimize a function (i.e., find its maximum and/or minimum values) subject to a constraint -- limits on which input points are considered. In the previous example, we constrained ourselves by considering a function only within the boundary of a triangle. This was largely arbitrary; the function and the boundary were chosen just as an example, with no real "meaning'' behind the function or the chosen constraint.

However, solving constrained optimization problems is a very important topic in applied mathematics. The techniques developed here are the basis for solving larger problems, where more than two variables are involved.

We illustrate the technique again with a classic problem.

Example 13.9.3: Constrained Optimization of a Package

The U.S. Postal Service states that the girth plus the length of Standard Post Package must not exceed 130''. Given a rectangular box, the "length'' is the longest side, and the "girth'' is twice the sum of the width and the height.

Given a rectangular box where the width and height are equal, what are the dimensions of the box that give the maximum volume subject to the constraint of the size of a Standard Post Package?

SOLUTION

Let w, h and ℓ denote the width, height and length of a rectangular box; we assume here that w=h. The girth is then 2(w+h)=4w. The volume of the box is V(w,ℓ)=whℓ=w2ℓ. We wish to maximize this volume subject to the constraint 4w+ℓ≤130, or ℓ≤130−4w. (Common sense also indicates that ℓ>0,w>0.)

We begin by finding the critical values of V. We find that Vw(w,ℓ)=2wℓ and Vℓ(w,ℓ)=w2; these are simultaneously 0 only at (0,0). This gives a volume of 0, so we can ignore this critical point.

We now consider the volume along the constraint ℓ=130−4w. Along this line, we have:

V(w,ℓ)=V(w,130−4w)=w2(130−4w)=130w2−4w3=V1(w).

The constraint restricts w to the interval [0,32.5], as indicated in the figure. Thus we want to maximize V1 on [0,32.5].

Finding the critical values of V1, we take the derivative and set it equal to 0:

V′1(w)=260w−12w2=0⇒w(260−12w)=0⇒w=0 or w=26012≈21.67.

We found two critical values: when w=0 and when w=21.67. We again ignore the w=0 solution. The maximum volume, subject to the constraint, comes at w=h=21.67, ℓ=130−4(21.6)=43.33. This gives a maximum volume of V(21.67,43.33)≈19,408 in3.

Figure 13.9.3: Graphing the volume of a box with girth 4w and length ℓ, subject to a size constraint.

The volume function V(w,ℓ) is shown in Figure 13.9.3 along with the constraint ℓ=130−4w. As done previously, the constraint is drawn dashed in the xy-plane and also projected up onto the surface of the function. The point where the volume is maximized is indicated.

Finding the maximum and minimum values of f on the boundary of D can be challenging. If the boundary is a rectangle or set of straight lines, then it is possible to parameterize the line segments and determine the maxima on each of these segments, as seen in Example 13.9.1. The same approach can be used for other shapes such as circles and ellipses.

If the boundary of the region D is a more complicated curve defined by a function g(x,y)=c for some constant c, and the first-order partial derivatives of g exist, then the method of Lagrange multipliers can prove useful for determining the extrema of f on the boundary.

Before we get to the method of Lagrange Multipliers (in the next section), let's consider a few additional examples of doing constrained optimization problems in this way.

Example 13.9.4: Finding Absolute Extrema

Use the problem-solving strategy for finding absolute extrema of a function to determine the absolute extrema of each of the following functions:

- f(x,y)=x2−2xy+4y2−4x−2y+24 on the domain defined by 0≤x≤4 and 0≤y≤2

- g(x,y)=x2+y2+4x−6y on the domain defined by x2+y2≤16

Solution:

a. Using the problem-solving strategy, step 1 involves finding the critical points of f on its domain. Therefore, we first calculate fx(x,y) and fy(x,y), then set them each equal to zero:

fx(x,y)=2x−2y−4fy(x,y)=−2x+8y−2.

Setting them equal to zero yields the system of equations

2x−2y−4=0−2x+8y−2=0.

The solution to this system is x=3 and y=1. Therefore (3,1) is a critical point of f. Calculating f(3,1) gives f(3,1)=17.

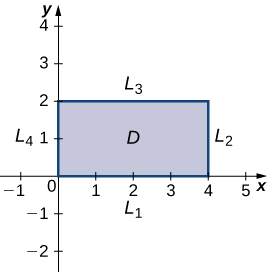

The next step involves finding the extrema of f on the boundary of its domain. The boundary of its domain consists of four line segments as shown in the following graph:

L1 is the line segment connecting (0,0) and (4,0), and it can be parameterized by the equations x(t)=t,y(t)=0 for 0≤t≤4. Define g(t)=f(x(t),y(t)). This gives g(t)=t2−4t+24. Differentiating g leads to g′(t)=2t−4. Therefore, g has a critical value at t=2, which corresponds to the point (2,0). Calculating f(2,0) gives the z-value 20.

L2 is the line segment connecting (4,0) and (4,2), and it can be parameterized by the equations x(t)=4,y(t)=t for 0≤t≤2. Again, define g(t)=f(x(t),y(t)). This gives g(t)=4t2−10t+24. Then, g′(t)=8t−10. g has a critical value at t=54, which corresponds to the point (4,54). Calculating f(4,54) gives the z-value 17.75.

L3 is the line segment connecting (0,2) and (4,2), and it can be parameterized by the equations x(t)=t,y(t)=2 for 0≤t≤4. Again, define g(t)=f(x(t),y(t)). This gives g(t)=t2−8t+36. The critical value corresponds to the point (4,2). So, calculating f(4,2) gives the z-value 20.

L4 is the line segment connecting (0,0) and (0,2), and it can be parameterized by the equations x(t)=0,y(t)=t for 0≤t≤2. This time, g(t)=4t2−2t+24 and the critical value t=14 correspond to the point (0,14). Calculating f(0,14) gives the z-value 23.75.

We also need to find the values of f(x,y) at the corners of its domain. These corners are located at (0,0),(4,0),(4,2) and (0,2):

f(0,0)=(0)2−2(0)(0)+4(0)2−4(0)−2(0)+24=24f(4,0)=(4)2−2(4)(0)+4(0)2−4(4)−2(0)+24=24f(4,2)=(4)2−2(4)(2)+4(2)2−4(4)−2(2)+24=20f(0,2)=(0)2−2(0)(2)+4(2)2−4(0)−2(2)+24=36.

The absolute maximum value is 36, which occurs at (0,2), and the global minimum value is 20, which occurs at both (4,2) and (2,0) as shown in the following figure.

b. Using the problem-solving strategy, step 1 involves finding the critical points of g on its domain. Therefore, we first calculate gx(x,y) and gy(x,y), then set them each equal to zero:

gx(x,y)=2x+4gy(x,y)=2y−6.

Setting them equal to zero yields the system of equations

2x+4=02y−6=0.

The solution to this system is x=−2 and y=3. Therefore, (−2,3) is a critical point of g. Calculating g(−2,3), we get

g(−2,3)=(−2)2+32+4(−2)−6(3)=4+9−8−18=−13.

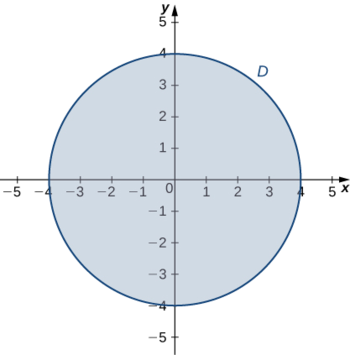

The next step involves finding the extrema of g on the boundary of its domain. The boundary of its domain consists of a circle of radius 4 centered at the origin as shown in the following graph.

The boundary of the domain of g can be parameterized using the functions x(t)=4cost,y(t)=4sint for 0≤t≤2π. Define h(t)=g(x(t),y(t)):

h(t)=g(x(t),y(t))=(4cost)2+(4sint)2+4(4cost)−6(4sint)=16cos2t+16sin2t+16cost−24sint=16+16cost−24sint.

Setting h′(t)=0 leads to

−16sint−24cost=0−16sint=24cost−16sint−16cost=24cost−16costtant=−43.

−16sint−24cost=0−16sint=24cost−16sint−16cost=24cost−16costtant=−32.

This equation has two solutions over the interval 0≤t≤2π. One is t=π−arctan(32) and the other is t=2π−arctan(32). For the first angle,

sint=sin(π−arctan(32))=sin(arctan(32))=3√1313cost=cos(π−arctan(32))=−cos(arctan(32))=−2√1313.

Therefore, x(t)=4cost=−8√1313 and y(t)=4sint=12√1313, so (−8√1313,12√1313) is a critical point on the boundary and

g(−8√1313,12√1313)=(−8√1313)2+(12√1313)2+4(−8√1313)−6(12√1313)=14413+6413−32√1313−72√1313=208−104√1313≈−12.844.

For the second angle,

sint=sin(2π−arctan(32))=−sin(arctan(32))=−3√1313cost=cos(2π−arctan(32))=cos(arctan(32))=2√1313.

Therefore, x(t)=4cost=8√1313 and y(t)=4sint=−12√1313, so (8√1313,−12√1313) is a critical point on the boundary and

g(8√1313,−12√1313)=(8√1313)2+(−12√1313)2+4(8√1313)−6(−12√1313)=14413+6413+32√1313+72√1313=208+104√1313≈44.844.

The absolute minimum of g is −13, which is attained at the point (−2,3), which is an interior point of D. The absolute maximum of g is approximately equal to 44.844, which is attained at the boundary point (8√1313,−12√1313). These are the absolute extrema of g on D as shown in the following figure.

Exercise 13.9.1

Use the problem-solving strategy for finding absolute extrema of a function to find the absolute extrema of the function

f(x,y)=4x2−2xy+6y2−8x+2y+3

on the domain defined by 0≤x≤2 and −1≤y≤3.

- Hint

-

Calculate fx(x,y) and fy(x,y), and set them equal to zero. Then, calculate f for each critical point and find the extrema of f on the boundary of D.

- Answer

-

The absolute minimum occurs at (1,0):f(1,0)=−1.

The absolute maximum occurs at (0,3):f(0,3)=63.

Example 13.9.5: Profitable Golf Balls

Pro-T company has developed a profit model that depends on the number x of golf balls sold per month (measured in thousands), and the number of hours per month of advertising y, according to the function

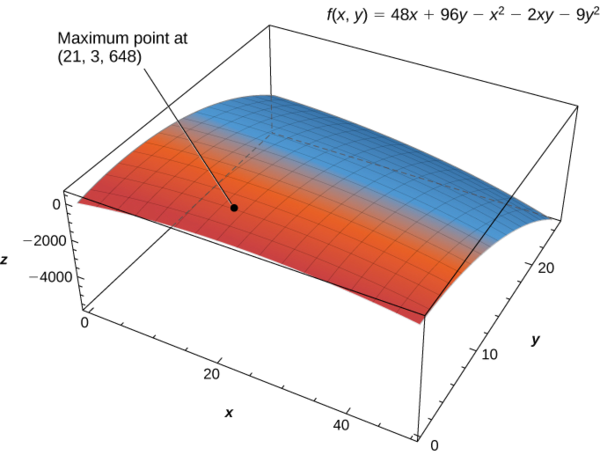

z=f(x,y)=48x+96y−x2−2xy−9y2,

where z is measured in thousands of dollars. The maximum number of golf balls that can be produced and sold is 50,000, and the maximum number of hours of advertising that can be purchased is 25. Find the values of x and y that maximize profit, and find the maximum profit.

Solution

Using the problem-solving strategy, step 1 involves finding the critical points of f on its domain. Therefore, we first calculate fx(x,y) and fy(x,y), then set them each equal to zero:

fx(x,y)=48−2x−2yfy(x,y)=96−2x−18y.

Setting them equal to zero yields the system of equations

48−2x−2y=096−2x−18y=0.

The solution to this system is x=21 and y=3. Therefore (21,3) is a critical point of f. Calculating f(21,3) gives f(21,3)=48(21)+96(3)−212−2(21)(3)−9(3)2=648.

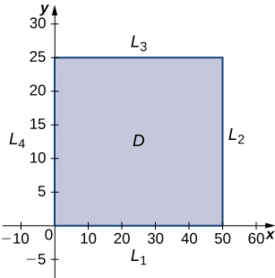

The domain of this function is 0≤x≤50 and 0≤y≤25 as shown in the following graph.

L1 is the line segment connecting (0,0) and (50,0), and it can be parameterized by the equations x(t)=t,y(t)=0 for 0≤t≤50. We then define g(t)=f(x(t),y(t)):

g(t)=f(x(t),y(t))=f(t,0)=48t+96(0)−y2−2(t)(0)−9(0)2=48t−t2.

Setting g′(t)=0 yields the critical point t=24, which corresponds to the point (24,0) in the domain of f. Calculating f(24,0) gives 576.

L2 is the line segment connecting and (50,25), and it can be parameterized by the equations x(t)=50,y(t)=t for 0≤t≤25. Once again, we define g(t)=f(x(t),y(t)):

g(t)=f(x(t),y(t))=f(50,t)=48(50)+96t−502−2(50)t−9t2=−9t2−4t−100.

This function has a critical point at t=−29, which corresponds to the point (50,−29). This point is not in the domain of f.

L3 is the line segment connecting (0,25) and (50,25), and it can be parameterized by the equations x(t)=t,y(t)=25 for 0≤t≤50. We define g(t)=f(x(t),y(t)):

g(t)=f(x(t),y(t))=f(t,25)=48t+96(25)−t2−2t(25)−9(252)=−t2−2t−3225.

This function has a critical point at t=−1, which corresponds to the point (−1,25), which is not in the domain.

L4 is the line segment connecting (0,0) to (0,25), and it can be parameterized by the equations x(t)=0,y(t)=t for 0≤t≤25. We define g(t)=f(x(t),y(t)):

g(t)=f(x(t),y(t))=f(0,t)=48(0)+96t−(0)2−2(0)t−9t2=96t−t2.

This function has a critical point at t=163, which corresponds to the point (0,163), which is on the boundary of the domain. Calculating f(0,163) gives 256.

We also need to find the values of f(x,y) at the corners of its domain. These corners are located at (0,0),(50,0),(50,25) and (0,25):

f(0,0)=48(0)+96(0)−(0)2−2(0)(0)−9(0)2=0f(50,0)=48(50)+96(0)−(50)2−2(50)(0)−9(0)2=−100f(50,25)=48(50)+96(25)−(50)2−2(50)(25)−9(25)2=−5825f(0,25)=48(0)+96(25)−(0)2−2(0)(25)−9(25)2=−3225.

The maximum critical value is 648, which occurs at (21,3). Therefore, a maximum profit of $648,000 is realized when 21,000 golf balls are sold and 3 hours of advertising are purchased per month as shown in the following figure.

It is hard to overemphasize the importance of optimization. In "the real world,'' we routinely seek to make something better. By expressing the something as a mathematical function, "making something better'' means to "optimize some function.''

The techniques shown here are only the beginning of a very important field. But be aware that many functions that we will want to optimize are incredibly complex, making the step to "find the gradient, set it equal to ⇀0, and find all points that make this true'' highly nontrivial. Mastery of the principles on simpler problems here is key to being able to tackle these more complicated problems.

The Method of Least Squares Regression

Our world is full of data, and to interpret and extrapolate based on this data, we often try to find a function to model this data in a particular situation. You've likely heard about a line of best fit, also known as a least squares regression line. This linear model, in the form f(x)=ax+b, assumes the value of the output changes at a roughly constant rate with respect to the input, i.e., that these values are related linearly. And for many situations this linear model gives us a powerful way to make predictions about the value of the function's output for inputs other than those in the data we collected. For other situations, like most population models, a linear model is not sufficient, and we need to find a quadratic, cubic, exponential, logistic, or trigonometric model (or possibly something else).

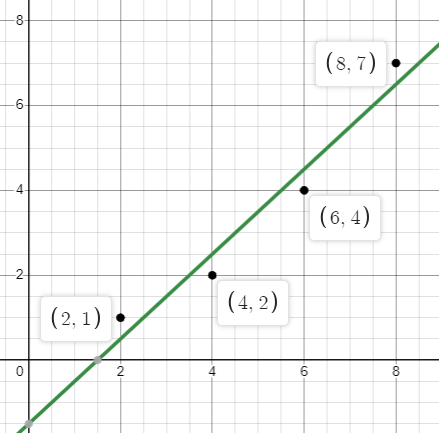

Consider the data points given in the table below. Figures 13.9.11 - 13.9.14 show various best fit regression models for this data.

xy21426487

Figure 13.9.11: Linear model

y=x−1.5

Figure 13.9.12: Quadratic model

y=0.125x2−0.25x+1

Figure 13.9.13: Exponential model

y=0.6061⋅(1.359)x

Figure 13.9.14: Logistic model

y=18.311+39.67e−0.4002x

Definition: Sum of the Squared Errors Function

One way to measure how well a particular model y=f(x) fits a given set of data points {(x1,y1),(x2,y2),(x3,y3),...,(xn,yn)} is to consider the squares of the differences between the values given by the model and the actual y-values of the data points.

The differences (or errors), di=f(xi)−yi, are squared and added up so that all errors are considered in the measure of how well the model "fits" the data. This sum of the squared errors is given by

S=n∑i=1(f(xi)−yi)2

Squaring the errors in this sum also tends to magnify the weight of larger errors and minimize the weight of small errors in the measurement of how well the model fits the data.

Note that S is a function of the constant parameters in the particular model y=f(x). For example, if we desire a linear model, then f(x)=ax+b, and

S(a,b)=n∑i=1(f(xi)−yi)2=n∑i=1(axi+b−yi)2

To obtain the best fit version of the model, we will seek the values of the constant parameters in the model (a and b in the linear model) that give us the minimum value of this sum of the squared errors function S for the given set of data points.

When calculating a line of best fit in previous classes, you were likely either given the formulas for the coefficients or shown how to use features on your calculator or other device to find them.

Using our understanding of the optimization of functions of multiple variables in this class, we are now able to derive the formulas for these coefficients directly. In fact, we can use the same methods to determine the constant parameters needed to form a best fit model of any kind (quadratic, cubic, exponential, logistic, sine, cosine, etc), although these can be a bit more difficult to work out than the linear case.

Theorem 13.9.2: Least Squares Regression Line

The least squares regression line (or line of best fit) for the data points {(x1,y1),(x2,y2),(x3,y3),...,(xn,yn)} is given by the formula

f(x)=ax+b

where

a=nn∑i=1xiyi−n∑i=1xin∑i=1yinn∑i=1xi2−(n∑i=1xi)2andb=1n(n∑i=1yi−an∑i=1xi).

Proof (part 1): To obtain a line that best fits the data points we need to minimize the sum of the squared errors for this model. This is a function of the two variables a and b as shown below. Note that the xi and yi are numbers in this function and are not the variables.

S(a,b)=n∑i=1(axi+b−yi)2

To minimize this function of the two variables a and b, we need to determine the critical point(s) of this function. Let's start by stating the partial derivatives of S with respect to a and b and simplifying them so that the sums only include the numerical coordinates from the data points.

Sa(a,b)=n∑i=12xi(axi+b−yi)=n∑i=1(2axi2+2bxi−2xiyi)=n∑i=12axi2+n∑i=12bxi−n∑i=12xiyi=2an∑i=1xi2+2bn∑i=1xi−2n∑i=1xiyi

Sb(a,b)=n∑i=12(axi+b−yi)=n∑i=1(2axi+2b−2yi)=n∑i=12axi+n∑i=12b−n∑i=12yi=2an∑i=1xi+2bn∑i=11−2n∑i=1yi=2an∑i=1xi+2bn−2n∑i=1yi

Next we set these partial derivatives equal to 0 and solve the resulting system of equations.

Set 2an∑i=1xi2+2bn∑i=1xi−2n∑i=1xiyi=0,

and 2an∑i=1xi+2bn−2n∑i=1yi=0.

Since the linear case is simple enough to make this process straightforward, we can use substitution to actually solve this system for a and b. Solving the second equation for b gives us

2bn=2n∑i=1yi−2an∑i=1xi

b=1n(n∑i=1yi−an∑i=1xi).

We now substitute this expression into the first equation for b.

2an∑i=1xi2+2[1n(n∑i=1yi−an∑i=1xi)]n∑i=1xi−2n∑i=1xiyi=0

Multiplying this equation through by n and dividing out the 2 yields

ann∑i=1xi2+[n∑i=1yi−an∑i=1xi]n∑i=1xi−nn∑i=1xiyi=0.

Simplifying,

ann∑i=1xi2+n∑i=1xin∑i=1yi−an∑i=1xin∑i=1xi−nn∑i=1xiyi=0.

Next we isolate the terms containing a on the left side of the equation, moving the other two terms to the right side of the equation.

ann∑i=1xi2−a(n∑i=1xi)2=nn∑i=1xiyi−n∑i=1xin∑i=1yi.

Factoring out the a,

a(nn∑i=1xi2−(n∑i=1xi)2)=nn∑i=1xiyi−n∑i=1xin∑i=1yi.

Finally, we solve for a to obtain

a=nn∑i=1xiyi−n∑i=1xin∑i=1yinn∑i=1xi2−(n∑i=1xi)2.

◼QED

Thus we have found formulas for the coordinates of the single critical point of the sum of squared errors function for the linear model, i.e.,

S(a,b)=n∑i=1(axi+b−yi)2.

Proof (part 2): To prove that this linear model gives us a minimum of S, we will need the Second Partials Test.

The second partials of S are

Saa(a,b)=n∑i=12xi2Sbb(a,b)=n∑i=12=2nSab(a,b)=n∑i=12xi.

Then the discriminant evaluated at the critical point (a,b) is

D(a,b)=Saa(a,b)Sbb(a,b)−[Sab(a,b)]2=2nn∑i=12xi2−(2n∑i=1xi)2=4nn∑i=1xi2−4(n∑i=1xi)2=4[nn∑i=1xi2−(n∑i=1xi)2].

Now to prove that this discriminant is positive (and thus guarantees a local max or min) requires us to use the Cauchy-Schwarz Inequality. This part of the proof is non-trivial, but not too hard, if we don't worry about proving the Cauchy-Schwarz Inequality itself here.

The Cauchy-Schwarz Inequality guarantees

(n∑i=1aibi)2≤(n∑i=1ai2)(n∑i=1bi2)

for any real-valued sequences ai and bi, with equality only if these two sequences are dependent (e.g., if the terms were the same or some multiple of each other).

Here we choose ai=1 and bi=xi. Since these sequences are linearly independent, we will not have equality and substituting into the Cauchy-Schwarz Inequality gives us

(n∑i=11⋅xi)2<(n∑i=112)(n∑i=1xi2).

Since n∑i=11=n, we have

(n∑i=1xi)2<nn∑i=1xi2.

This result proves

D(a,b)=4[nn∑i=1xi2−(n∑i=1xi)2]>0.

Now, since it is clear that Saa(a,b)=n∑i=12xi2>0, we know that S is concave up at the point (a,b) and thus has a relative minimum there. This concludes the proof that the parameters a and b, as shown in Theorem 13.9.2, give us the least squares linear regression model or line of best fit,

f(x)=ax+b.◼QED

Note

The linear case f(x)=ax+b is special in that it is simple enough to allow us to actually solve for the constant parameters a and b directly as formulas (as shown above in Theorem 13.9.2. For other best fit models we typically just obtain the system of equations needed to solve for the corresponding constant parameters that give us the critical point and minimum value of S.

To leave the quadratic regression case for you to try as an exercise, let's consider a cubic best fit model here.

Example 13.9.6: Finding the System of Equations for the Best-fit Cubic Model

Determine the system of equations needed to determine the cubic best fit regression model of the form, f(x)=ax3+bx2+cx+d, for a given set of data points, {(x1,y1),(x2,y2),(x3,y3),...,(xn,yn)}.

Solution

Here consider the sum of least squares function

S(a,b,c,d)=n∑i=1(axi3+bxi2+cxi+d−yi)2.

To find the minimum value of this function (and the corresponding values of the parameters a,b,c, and d needed for the best fit cubic regression model), we need to find the critical point of this function. (Yes, even for a function of four variables!) To begin this process, we find the first partial derivatives of this function with respect to each of the parameters a,b,c, and d.

Sa(a,b,c,d)=2n∑i=1xi3(axi3+bxi2+cxi+d−yi)Sb(a,b,c,d)=2n∑i=1xi2(axi3+bxi2+cxi+d−yi)Sc(a,b,c,d)=2n∑i=1xi(axi3+bxi2+cxi+d−yi)Sd(a,b,c,d)=2n∑i=1(axi3+bxi2+cxi+d−yi)

Now we set these partials equal to 0, divide out the 2 from each, and split the terms into separate sums. We also factor out the a,b,c, and d which don't change as the index changes.

an∑i=1xi6+bn∑i=1xi5+cn∑i=1xi4+dn∑i=1xi3−n∑i=1xi3yi=0an∑i=1xi5+bn∑i=1xi4+cn∑i=1xi3+dn∑i=1xi2−n∑i=1xi2yi=0an∑i=1xi4+bn∑i=1xi3+cn∑i=1xi2+dn∑i=1xi−n∑i=1xiyi=0an∑i=1xi3+bn∑i=1xi2+cn∑i=1xi+dn∑i=11−n∑i=1yi=0

This system of equations can be rewritten by moving the negative terms to the right side of the equation. We'll also emphasize the coefficients and variables and replace n∑i=11 with n.

(n∑i=1xi6)a+(n∑i=1xi5)b+(n∑i=1xi4)c+(n∑i=1xi3)d=n∑i=1xi3yi(n∑i=1xi5)a+(n∑i=1xi4)b+(n∑i=1xi3)c+(n∑i=1xi2)d=n∑i=1xi2yi(n∑i=1xi4)a+(n∑i=1xi3)b+(n∑i=1xi2)c+(n∑i=1xi)d=n∑i=1xiyi(n∑i=1xi3)a+(n∑i=1xi2)b+(n∑i=1xi)c+nd=n∑i=1yi

This is a system of four linear equations with the four variables a,b,c, and d. To solve for the values of these parameters that would give us the best fit cubic regression model, we would need to solve this system using the given data points. We would first evaluate each of the sums using the x- and y-coordinates of the data points and place these numerical coefficients into the equations. We would then only need to solve the resulting system of linear equations.

Let's now use this process to find the best fit cubic regression model for a set of data points.

Example 13.9.7: Finding a Best-fit Cubic Model

a. Find the best fit cubic regression model of the form, f(x)=ax3+bx2+cx+d, for the set of data points below.

xy1143648796

b. Determine the actual sum of the squared errors for this cubic model and these data points.

Solution

a. First we need to calculate each of the sums that appear in the system of linear equations we derived in Example 13.9.6.Note first that since we have five points, n=5.

5∑i=1xi6=16+46+66+86+96=8443385∑i=1xi3yi=13(1)+43(3)+63(4)+83(7)+93(6)=90155∑i=1xi5=15+45+65+85+95=1006185∑i=1xi2yi=12(1)+42(3)+62(4)+82(7)+92(6)=11275∑i=1xi4=14+44+64+84+94=122105∑i=1xiyi=1(1)+4(3)+6(4)+8(7)+9(6)=1475∑i=1xi3=13+43+63+83+93=15225∑i=1yi=1+3+4+7+6=215∑i=1xi2=12+42+62+82+92=1985∑i=1xi=1+4+6+8+9=28

Plugging these values into the system of equations from Example 13.9.6 gives us

844338a+100618b+12210c+1522d=9015100618a+12210b+1522c+198d=112712210a+1522b+198c+28d=1471522a+198b+28c+5d=21

Solving this system by elimination (or using row-reduction in matrix form) gives us

a=−0.0254167,b=0.382981,c=−0.852949,d=1.547308

which results in the following cubic best fit regression model for this set of data points:

f(x)=−0.0254167x3+0.382981x2−0.852949x+1.547308.

See this best fit cubic regression model along with these data points in Figure 13.9.16 below.

b. Now that we have the model, let's consider what the errors are for each data point and calculate the sum of the squared errors. This is the absolute minimum value of the function

S(a,b,c,d)=n∑i=1(axi3+bxi2+cxi+d−yi)2.

xiyif(xi)Error: f(xi)−yiSquared Error: (f(xi)−yi)2111.05192310.05192310.002696432.6365385−0.36346150.132104644.72692310.72692310.528417876.2211538−0.77884620.606601966.36346150.36346150.132104Total Squared Error in S≈1.401922

Contributors

- Edited, Mixed, and expanded by Paul Seeburger (Monroe Community College)

(Applications of Optimization - Approach 1 and Example 13.9.1)

(The Method of Least Squares Regression) Gregory Hartman (Virginia Military Institute). Contributions were made by Troy Siemers and Dimplekumar Chalishajar of VMI and Brian Heinold of Mount Saint Mary's University. This content is copyrighted by a Creative Commons Attribution - Noncommercial (BY-NC) License. http://www.apexcalculus.com/

(Examples 13.9.2 and 13.9.3 and some exposition at top and bottom of section.)Gilbert Strang (MIT) and Edwin “Jed” Herman (Harvey Mudd) with many contributing authors. This content by OpenStax is licensed with a CC-BY-SA-NC 4.0 license. Download for free at http://cnx.org.

(Examples 13.9.4 and 13.9.5 and Exercise 13.9.1.)