6.7: Inverse and Implicit Functions. Open and Closed Maps

( \newcommand{\kernel}{\mathrm{null}\,}\)

I. "If f∈CD1 at →p, then f resembles a linear map (namely df) at →p." Pursuing this basic idea, we first make precise our notion of "f∈CD1 at →p."

A map f:E′→E is continuously differentiable, or of class CD1 (written f∈CD1), at →p iff the following statement is true:

Given any ε>0, there is δ>0 such that f is differentiable on the globe ¯G=¯G→p(δ), with ‖df(→x;⋅)−df(→p;⋅)‖<ε for all →x∈¯G.

By Problem 10 in §5, this definition agrees with Definition 1 §5, but is no longer limited to the case E′=En(Cn). See also Problems 1 and 2 below.

We now obtain the following result.

Let E′ and E be complete. If f:E′→E is of class CD1 at →p and if df(→p;⋅) is bijective (§6), then f is one-to-one on some globe ¯G=¯G→p(δ).

Thus f "locally" resembles df (→p;⋅) in this respect.

- Proof

-

Set ϕ=df(→p;⋅) and

‖ϕ−1‖=1ε

(cf. Theorem 2 of §6).

By Definition 1, fix δ>0 so that for →x∈¯G=¯G→p(δ).

‖df(→x;⋅)−ϕ‖<12ε.

Then by Note 5 in §2,

(∀→x∈¯G)(∀→u∈E′)|df(→x;→u)−ϕ(→u)|≤12ε|→u|.

Now fix any →r,→s∈¯G,→r≠→s, and set →u=→r−→s≠0. Again, by Note 5 in §2,

|→u|=|ϕ−1(ϕ(→u))|≤‖ϕ−1‖|ϕ(→u)|=1ε|ϕ(→u)|;

so

0<ε|→u|≤|ϕ(→u)|.

By convexity, ¯G⊇I=L[→s,→r], so (1) holds for →x∈I,→x=→s+t→u,0≤t≤1.

Noting this, set

h(t)=f(→s+t→u)−tϕ(→u),t∈E1.

Then for 0≤t≤1,

h′(t)=D→uf(→s+t→u)−ϕ(→u)=df(→s+t→u;→u)−ϕ(→u).

(Verify!) Thus by (1) and (2),

sup0≤t≤1|h′(t)|=sup0≤t≤1|df(→s+t→u;→u)−ϕ(→u)|≤ε2|→u|≤12|ϕ(→u)|.

(Explain!) Now, by Corollary 1 in Chapter 5, §4,

|h(1)−h(0)|≤(1−0)⋅sup0≤t≤1|h′(t)|≤12|ϕ(→u)|.

As h(0)=f(→s) and

h(1)=f(→s+→u)−ϕ(→u)=f(→r)−ϕ(→u),

we obtain (even if →r=→s)

|f(→r)−f(→s)−ϕ(→u)|≤12|ϕ(→u)|(→r,→s∈¯G,→u=→r−→s).

But by the triangle law,

|ϕ(→u)|−|f(→r)−f(→s)|≤|f(→r)−f(→s)−ϕ(→u)|.

Thus

|f(→r)−f(→s)|≥12|ϕ(→u)|≥12ε|→u|=12ε|→r−→s|

by (2).

Hence f(→r)≠f(→s) whenever →r≠→s in ¯G; so f is one-to-one on ¯G, as claimed.◻

Under the assumptions of Theorem 1, the maps f and f−1 (the inverse of f restricted to ¯G) are uniformly continuous on ¯G and f[¯G], respectively.

- Proof

-

By (3),

|f(→r)−f(→s)|≤|ϕ(→u)|+12|ϕ(→u)|≤|2ϕ(→u)|≤2‖ϕ‖|→u|=2‖ϕ‖|→r−→s|(→r,→s∈¯G).

This implies uniform continuity for f. (Why?)

Next, let g=f−1 on H=f[¯G].

If →x,→y∈H, let →r=g(→x) and →s=g(→y); so →r,→s∈¯G, with →x=f(→r) and →y=f(→s). Hence by (4),

|→x−→y|≥12ε|g(→x)−g(→y)|,

proving all for g, too.◻

Again, f resembles ϕ which is uniformly continuous, along with ϕ−1.

II. We introduce the following definition.

A map f:(S,ρ)→(T,ρ′) is closed (open) on D⊆S iff, for any X⊆D the set f[X] is closed (open) in T whenever X is so in S.

Note that continuous maps have such a property for inverse images (Problem 15 in Chapter 4, §2).

Under the assumptions of Theorem 1, f is closed on ¯G, and so the set f[¯G] is closed in E.

Similarly for the map f−1 on f[¯G].

- Proof for E′=E=En(Cn) (for the general case, see Problem 6)

-

Given any closed X⊆¯G, we must show that f[X] is closed in E.

Now, as ¯G is closed and bounded, it is compact (Theorem 4 of Chapter 4, §6).

So also is X (Theorem 1 in Chapter 4, §6), and so is f[X] (Theorem 1 of Chapter 4, §8).

By Theorem 2 in Chapter 4, §6, f[X] is closed, as required.◻

For the rest of this section, we shall set E′=E=En(Cn).

If E′=E=En(Cn) in Theorem 1, with other assumptions unchanged, then f is open on the globe G=G→p(δ), with δ sufficiently small.

- Proof

-

We first prove the following lemma.

f[G] contains a globe G→q(α) where →q=f(→p).

- Proof

-

Indeed, let

α=14εδ,

where δ and ε are as in the proof of Theorem 1. (We continue the notation and formulas of that proof.)

Fix any →c∈G→q(α); so

|→c−→q|<α=14εδ.

Set h=|f−→c| on E′. As f is uniformly continuous on ¯G, so is h.

Now, ¯G is compact in En(Cn); so Theorem 2(ii) in Chapter 4, §8, yields a point →r∈¯G such that

h(→r)=minh[¯G].

We claim that →r is in G (the interior of ¯G).

Otherwise, |→r−→p|=δ; for by (4),

2α=12εδ=12ε|→r−→p|≤|f(→r)−f(→p)|≤|f(→r)−→c|+|→c−f(→p)|=h(→r)+h(→p).

But

h(→p)=|→c−f(→p)|=|→c−→q|<α;

and so (7) yields

h(→p)<α<h(→r),

contrary to the minimality of h(→r) (see (6)). Thus |→r−→p| cannot equal δ.

We obtain |→r−→p|<δ, so →r∈G→p(δ)=G and f(→r)∈f[G]. We shall now show that →c=f(→r).

To this end, we set →v=→c−f(→r) and prove that →v=→0. Let

→u=ϕ−1(→v),

where

ϕ=df(→p;⋅),

as before. Then

→v=ϕ(→u)=df(→p;→u).

With →r as above, fix some

→s=→r+t→u(0<t<1)

with t so small that →s∈G also. Then by formula (3),

|f(→s)−f(→r)−ϕ(t→u)|≤12|t→v|;

also,

|f(→r)−→c+ϕ(t→u)|=(1−t)|→v|=(1−t)h(→r)

by our choice of →v,→u and h. Hence by the triangle law,

h(→s)=|f(→s)−→c|≤(1−12t)h(→r).

(Verify!)

As 0<t<1, this implies h(→r)=0 (otherwise, h(→s)<h(→r), violating (6)).

Thus, indeed,

|→v|=|f(→r)−→c|=0,

i.e.,

→c=f(→r)∈f[G] for →r∈G.

But →c was an arbitrary point of G→q(α). Hence

G→q(α)⊆f[G],

proving the lemma.◻

Proof of Theorem 2. The lemma shows that f(→p) is in the interior of f[G] if →p,f,df(→p;⋅), and δ are as in Theorem 1.

But Definition 1 implies that here f∈CD1 on all of G (see Problem 1).

Also, df(→x;⋅) is bijective for any →x∈G by our choice of G and Theorems 1 and 2 in §6.

Thus f maps all →x∈G onto interior points of f[G]; i.e., f maps any open set X⊆G onto an open f[X], as required.◻

Note 1. A map

f:(S,ρ)⟷ onto (T,ρ′)

is both open and closed ("clopen") iff f−1 is continuous - see Problem 15(iv)(v) in Chapter 4, §2, interchanging f and f−1.

Thus ϕ=df(→p;⋅) in Theorem 1 is "clopen" on all of E′.

Again, f locally resembles df(→p;⋅).

III. The Inverse Function Theorem. We now further pursue these ideas.

Under the assumptions of Theorem 2, let g be the inverse of fG(f restricted to G=G→p(δ)).

Then g∈CD1 on f[G] and dg(→y;⋅) is the inverse of df(→x;⋅) whenever →x=g(→y),→x∈G.

Briefly: "The differential of the inverse is the inverse of the differential."

- Proof

-

Fix any →y∈f[G] and →x=g(→y); so →y=f(→x) and →x∈G. Let U=df(→x;⋅).

As noted above, U is bijective for every →x∈G by Theorems 1 and 2 in §6; so we may set V=U−1. We must show that V=dg(→y;⋅).

To do this, give →y an arbitrary (variable) increment Δ→y, so small that →y+Δ→y stays in f[G] (an open set by Theorem 2).

As g and fG are one-to-one, Δ→y uniquely determines

Δ→x=g(→y+Δ→y)−g(→y)=→t,

and vice versa:

Δ→y=f(→x+→t)−f(→x).

Here Δ→y and →t are the mutually corresponding increments of →y=f(→x) and →x=g(→y). By continuity, →y→→0 iff →t→→0.

As U=df(→x;⋅),

lim→t→¯01|→t||f(→x+→t)−f(→t)−U(→t)|=0,

or

lim→t→→01|→t||F(→t)|=0,

where

F(→t)=f(→x+→t)−f(→t)−U(→t).

As V=U−1, we have

V(U(→t))=→t=g(→y+Δ→y)−g(→y).

So from (9),

V(F(→t))=V(Δ→y)−→t=V(Δ→y)−[g(→y+Δ→y)−g(→y)];

that is,

1|Δ→y||g(→y+Δ→y)−g(→y)−V(Δ→y)|=|V(F(→t))||Δ→y|,Δ→y≠→0.

Now, formula (4), with →r=→x,→s=→x+→t, and →u=→t, shows that

|f(→x+→t)−f(→x)|≥12ε|→t|;

i.e., |Δ→y|≥12ε|→t|. Hence by (8),

|V(F(→t))||Δ→y|≤|V(F(→t)|12ε|→t|=2ε|V(1|→t|F(→t))|≤2ε‖V‖1|→t||F(→t)|→0 as →t→→0.

Since →t→→0 as Δ→y→→0 (change of variables!), the expression (10) tends to 0 as Δ→y→→0.

By definition, then, g is differentiable at →y, with dg(→y;)=V=U−1.

Moreover, Corollary 3 in §6, applies here. Thus

(∀δ′>0)(∃δ′′>0)‖U−W‖<δ′′⇒‖U−1−W−1‖<δ′.

Taking here U−1=dg(→y) and W−1=dg(→y+Δ→y), we see that g∈CD1 near →y. This completes the proof.◻

Note 2. If E′=E=En(Cn), the bijectivity of ϕ=df(→p;⋅) is equivalent to

det[ϕ]=det[f′(→p)]≠0

(Theorem 1 of §6).

In this case, the fact that f is one-to-one on G=G→p(δ) means, componentwise (see Note 3 in §6), that the system of n equations

fi(→x)=f(x1,…,xn)=yi,i=1,…,n,

has a unique solution for the n unknowns xk as long as

(y1,…,yn)=→y∈f[G].

Theorem 3 shows that this solution has the form

xk=gk(→y),k=1,…,n,

where the gk are of class CD1 on f[G] provided the fi are of class CD1 near →p and det [f′(→p)]≠0. Here

det[f′(→p)]=Jf(→p),

as in §6.

Thus again f "locally" resembles a linear map, ϕ=df(→p;⋅).

IV. The Implicit Function Theorem. Generalizing, we now ask, what about solving n equations in n+m unknowns x1,…,xn,y1,…,ym? Say, we want to solve

fk(x1,…,xn,y1,…,ym)=0,k=1,2,…,n,

for the first n unknowns (or variables) xk, thus expressing them as

xk=Hk(y1,…,ym),k=1,…,n,

with Hk:Em→E1 or Hk:Cm→C.

Let us set →x=(x1,…,xn),→y=(y1,…,ym), and

(→x,→y)=(x1,…,xn,y1,…,ym)

so that (→x,→y)∈En+m(Cn+m).

Thus the system of equations (11) simplifies to

fk(→x,→y)=0,k=1,…,n

or

f(→x,→y)=→0,

where f=(f1,…,fn) is a map of En+m(Cn+m) into En(Cn);f is a function of n+m variables, but it has n components fk; i.e.,

f(→x,→y)=f(x1,…,xn,y1,…,ym)

is a vector in En(Cn).

Let E′=En+m(Cn+m),E=En(Cn), and let f:E′→E be of class CD1 near

(→p,→q)=(p1,…,pn,q1,…,qm),→p∈En(Cn),→q∈Em(Cm).

Let [ϕ] be the n×n matrix

(Djfk(→p,→q)),j,k=1,…,n.

If det[ϕ]≠0 and if f(→p,→q)=→0, then there are open sets

P⊆En(Cn) and Q⊆Em(Cm),

with →p∈P and →q∈Q, for which there is a unique map

H:Q→P

with

f(H(→y),→y)=→0

for all →y∈Q; furthermore, H∈CD1 on Q.

Thus →x=H(→y) is a solution of (11) in vector form.

- Proof

-

With the above notation, set

F(→x,→y)=(f(→x,→y),→y),F:E′→E′.

Then

F(→p,→q)=(f(→p,→q),→q)=(→0,→q),

since f(→p,→q)=→0.

As f∈CD1 near (→p,→q), so is F (verify componentwise via Problem 9(ii) in §3 and Definition 1 of §5).

By Theorem 4, §3, det[F′(→p,→q)]=det[ϕ]≠0 (explain!).

Thus Theorem 1 above shows that F is one-to-one on some globe G about (→p,→q).

Clearly G contains an open interval about (→p,→q). We denote it by P×Q where →p∈P,→q∈Q;P is open in En(Cn) and Q is open in Em(Cm).

By Theorem 3, FP×Q (F restricted to P×Q) has an inverse

g:A⟷ onto P×Q,

where A=F[P×Q] is open in E′ (Theorem 2), and g∈CD1 on A. Let the map u=(g1,…,gn) comprise the first n components of g (exactly as f comprises the first n components of F).

Then

g(→x,→y)=(u(→x,→y),→y)

exactly as F(→x,→y)=(f(→x,→y),→y). Also, u:A→P is of class CD1 on A, as g is (explain!).

Now set

H(→y)=u(→0,→y);

here →y∈Q, while

(→0,→y)∈A=F[P×Q],

for F preserves →y (the last m coordinates). Also set

α(→x,→y)=→x.

Then f=α∘F (why?), and

f(H(→y),→y)=f(u(→0,→y),→y)=f(g(→0,→y))=α(F(g(→0,→y))=α(→0,→y)=→0

by our choice of α and g (inverse to F). Thus

f(H(→y),→y)=→0,→y∈Q,

as desired.

Moreover, as H(→y)=u(→0,→y), we have

∂∂yiH(→y)=∂∂yiu(→0,→y),→y∈Q,i≤m.

As u∈CD1, all ∂u/∂yi are continuous (Definition 1 in §5); hence so are the ∂H/∂yi. Thus by Theorem 3 in §3, H∈CD1 on Q.

Finally, H is unique for the given P,Q; for

f(→x,→y)=→0⟹(f(→x,→y),→y)=(→0,→y)⟹F(→x,→y)=(→0,→y)⟹g(F(→x,→y))=g(→0,→y)⟹(→x,→y)=g(→0,→y)=(u(→0,→y),→y)⟹→x=u(→0,→y)=H(→y).

Thus f(→x,→y)=→0 implies →x=H(→y); so H(→y) is the only solution for →x.◻

Note 3. H is said to be implicitly defined by the equation f(→x,→y)=→0. In this sense we say that H(→y) is an implicit function, given by f(→x,→y)=→0.

Similarly, under suitable assumptions, f(→x,→y)=→0 defines →y as a function of →x.

Note 4. While H is unique for a given neighborhood P×Q of (→p,→q), another implicit function may result if P×Q or (→p,→q) is changed.

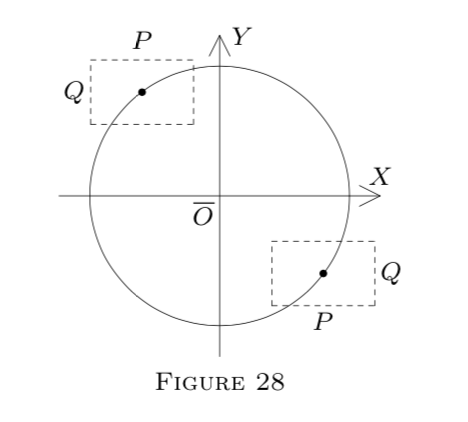

For example, let

f(x,y)=x2+y2−25

(a polynomial; hence f∈CD1 on all of E2). Geometrically, x2+y2−25=0 describes a circle.

Solving for x, we get x=±√25−y2. Thus we have two functions:

H1(y)=+√25−y2

and

H2(y)=−√25−y2.

If P×Q is in the upper part of the circle, the resulting function is H1. Otherwise, it is H2. See Figure 28.

V. Implicit Differentiation. Theorem 4 only states the existence (and uniqueness) of a solution, but does not show how to find it, in general.

The knowledge itself that H∈CD1 exists, however, enables us to use its derivative or partials and compute it by implicit differentiation, known from calculus.

(a) Let f(x,y)=x2+y2−25=0, as above.

This time treating y as an implicit function of x,y=H(x), and writing y′ for H′(x), we differentiate both sides of (x^{2}+y^{2}-25=0\) with respect to x, using the chain rule for the term y2=[H(x)]2.

This yields 2x+2yy′=0, whence y′=−x/y.

Actually (see Note 4), two functions are involved: y=±√25−x2; but both satisfy x2+y2−25=0; so the result y′=−x/y applies to both.

Of course, this method is possible only if the derivative y′ is known to exist. This is why Theorem 4 is important.

(b) Let

f(x,y,z)=x2+y2+z2−1=0,x,y,z∈E1.

Again f satisfies Theorem 4 for suitable x,y, and z.

Setting z=H(x,y), differentiate the equation f(x,y,z)=0 partially with respect to x and y. From the resulting two equations, obtain ∂z∂x and ∂z∂y.