1.1: Integrals as solutions

( \newcommand{\kernel}{\mathrm{null}\,}\)

A first order ODE is an equation of the form

or just

In general, there is no simple formula or procedure one can follow to find solutions. In the next few lectures we will look at special cases where solutions are not difficult to obtain. In this section, let us assume that

We could just integrate (antidifferentiate) both sides with respect to

that is

This

Now is a good time to discuss a point about calculus notation and terminology. Calculus textbooks muddy the waters by talking about the integral as primarily the so-called indefinite integral. The indefinite integral is really the antiderivative (in fact the whole one-parameter family of antiderivatives). There really exists only one integral and that is the definite integral. The only reason for the indefinite integral notation is that we can always write an antiderivative as a (definite) integral. That is, by the fundamental theorem of calculus we can always write

Hence the terminology to integrate when we may really mean to antidifferentiate. Integration is just one way to compute the antiderivative (and it is a way that always works, see the following examples). Integration is defined as the area under the graph, it only happens to also compute antiderivatives. For sake of consistency, we will keep using the indefinite integral notation when we want an antiderivative, and you should always think of the definite integral.

Find the general solution of

Solution

Elementary calculus tells us that the general solution must be

Normally, we also have an initial condition such as

Let us check! We compute

Do note that the definite integral and the indefinite integral (antidifferentiation) are completely different beasts. The definite integral always evaluates to a number. Therefore, Equation

Solve

By the preceding discussion, the solution must be

Solution

Here is a good way to make fun of your friends taking second semester calculus. Tell them to find the closed form solution. Ha ha ha (bad math joke). It is not possible (in closed form). There is absolutely nothing wrong with writing the solution as a definite integral. This particular integral is in fact very important in statistics.

Using this method, we can also solve equations of the form

Let us write the equation in Leibniz notation.

Now we use the inverse function theorem from calculus to switch the roles of

What we are doing seems like algebra with

Finally, we try to solve for

Previously, we guessed

We integrate to obtain

where

If we replace

Find the general solution of

Solution

First we note that

We integrate to get

We solve for

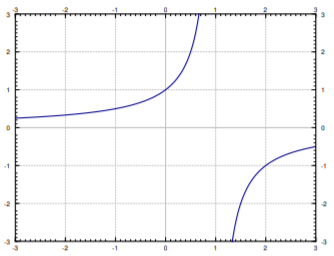

Note the singularities of the solution. If for example

Classical problems leading to differential equations solvable by integration are problems dealing with velocity, acceleration and distance. You have surely seen these problems before in your calculus class.

Suppose a car drives at a speed

Solution

Let

We can just integrate this equation to get that

We still need to figure out

Thus

Now we just plug in to get where the car is at 2 and at 10 seconds. We obtain

Suppose that the car accelerates at a rate of

Solution

Well this is actually a second order problem. If

What if we say

Once we solve for