5: Linear Algebra

( \newcommand{\kernel}{\mathrm{null}\,}\)

Consider the system of n linear equations and n unknowns, given by

a11x1+a12x2+⋯+a1nxn=b1,a21x1+a22x2+⋯+a2nxn=b2,⋮⋮an1x1+an2x2+⋯+annxn=bn.

We can write this system as the matrix equation

Ax=b,

with

A=(a11a12⋯a1na21a22⋯a2n⋮⋮⋱⋮an1an2⋯ann),x=(x1x2⋮xn),b=(b1b2⋮bn)

This chapter details the numerical solution of (5.1).

Gaussian Elimination

The standard numerical algorithm to solve a system of linear equations is called Gaussian Elimination. We can illustrate this algorithm by example.

Consider the system of equations

−3x1+2x2−x3=−1,6x1−6x2+7x3=−7,3x1−4x2+4x3=−6.

To perform Gaussian elimination, we form an Augmented Matrix by combining the matrix A with the column vector →b :

(−32−1−16−67−73−44−6).

Row reduction is then performed on this matrix. Allowed operations are (1) multiply any row by a constant, (2) add multiple of one row to another row, (3) interchange the order of any rows. The goal is to convert the original matrix into an upper-triangular matrix. We start with the first row of the matrix and work our way down as follows. First we multiply the first row by 2 and add it to the second row, and add the first row to the third row:

(−32−1−10−25−90−23−7).

We then go to the second row. We multiply this row by −1 and add it to the third row:

(−32−1−10−25−900−22).

The resulting equations can be determined from the matrix and are given by

−3x1+2x2−x3=−1−2x2+5x3=−9−2x3=2.

These equations can be solved by backward substitution, starting from the last equation and working backwards. We have

−2x3=2→x3=−1−2x2=−9−5x3=−4→x2=2−3x1=−1−2x2+x3=−6→x1=2

Therefore,

(x1x2x3)=(22−1)

LU decomposition

The process of Gaussian Elimination also results in the factoring of the matrix A to

A=LU,

where L is a lower triangular matrix and U is an upper triangular matrix. Using the same matrix A as in the last section, we show how this factorization is realized. We have

(−32−16−673−44)→(−32−10−250−23)=M1 A

where

M1 A=(100210101)(−32−16−673−44)=(−32−10−250−23).

Note that the matrix M1 performs row elimination on the first column. Two times the first row is added to the second row and one times the first row is added to the third row. The entries of the column of M1 come from 2=−(6/−3) and 1=−(3/−3) as required for row elimination. The number −3 is called the pivot.

The next step is

(−32−10−250−23)→(−32−10−2500−2)=M2(M1 A),

where

M2(M1 A)=(1000100−11)(−32−10−250−23)=(−32−10−2500−2).

Here, M2 multiplies the second row by −1=−(−2/−2) and adds it to the third row. The pivot is −2.

We now have

M2M1 A=U

or

A=M−11M−12U.

The inverse matrices are easy to find. The matrix M1 multiples the first row by 2 and adds it to the second row, and multiplies the first row by 1 and adds it to the third row. To invert these operations, we need to multiply the first row by −2 and add it to the second row, and multiply the first row by −1 and add it to the third row. To check, with

M1M−11=I,

we have

(100210101)(100−210−101)=(100010001).

Similarly,

M−12=(100010011)

Therefore,

L=M−11M−12

is given by

L=(100−210−101)(100010011)=(100−210−111)

which is lower triangular. The off-diagonal elements of M−11 and M−12 are simply combined to form L. Our LU decomposition is therefore

(−32−16−673−44)=(100−210−111)(−32−10−2500−2).

CHAPTER 5. LINEAR ALGEBRA Another nice feature of the LU decomposition is that it can be done by overwriting A, therefore saving memory if the matrix A is very large.

The LU decomposition is useful when one needs to solve Ax=b for x when A is fixed and there are many different b ’s. First one determines L and U using Gaussian elimination. Then one writes

(LU)x=L(Ux)=b.

We let

y=Ux,

and first solve

Ly =b

for y by forward substitution. We then solve

Ux=y

for x by backward substitution. If we count operations, we can show that solving (LU) x=b is substantially faster once L and U are in hand than solving Ax=b directly by Gaussian elimination.

We now illustrate the solution of LUx=b using our previous example, where

L=(100−210−111),U=(−32−10−2500−2),b=(−1−7−6)

With y=Ux, we first solve Ly=b, that is

(100−210−111)(y1y2y3)=(−1−7−6).

Using forward substitution

y1=−1,y2=−7+2y1=−9,y3=−6+y1−y2=2.

We now solve Ux=y, that is

(−32−10−2500−2)(x1x2x3)=(−1−92).

Using backward substitution,

−2x3=2→x3=−1,−2x2=−9−5x3=−4→x2=2,−3x1=−1−2x2+x3=−6→x1=2,

and we have once again determined

(x1x2x3)=(22−1).

Partial pivoting

When performing Gaussian elimination, the diagonal element that one uses during the elimination procedure is called the pivot. To obtain the correct multiple, one uses the pivot as the divisor to the elements below the pivot. Gaussian elimination in this form will fail if the pivot is zero. In this situation, a row interchange must be performed.

Even if the pivot is not identically zero, a small value can result in big round-off errors. For very large matrices, one can easily lose all accuracy in the solution. To avoid these round-off errors arising from small pivots, row interchanges are made, and this technique is called partial pivoting (partial pivoting is in contrast to complete pivoting, where both rows and columns are interchanged). We will illustrate by example the LU decomposition using partial pivoting.

Consider

A=(−22−16−673−84).

We interchange rows to place the largest element (in absolute value) in the pivot, or a11, position. That is,

A→(6−67−22−13−84)=P12 A

where

P12=(010100001)

is a permutation matrix that when multiplied on the left interchanges the first and second rows of a matrix. Note that P−112=P12. The elimination step is then

P12 A→(6−67004/30−51/2)=M1P12 A

where

M1=(1001/310−1/201).

The final step requires one more row interchange:

M1P12 A→(6−670−51/2004/3)=P23M1P12 A=U.

Since the permutation matrices given by P are their own inverses, we can write our result as

(P23M1P23)P23P12 A=U.

Multiplication of M on the left by P interchanges rows while multiplication on the right by P interchanges columns. That is,

P23(1001/310−1/201)P23=(100−1/2011/310)P23=(100−1/2101/301).

The net result on M1 is an interchange of the nondiagonal elements 1/3 and −1/2.

We can then multiply by the inverse of (P23M1P23) to obtain

P23P12 A=(P23M1P23)−1U,

which we write as

PA = LU.

Instead of L, MATLAB will write this as

A=(P−1 L)U.

For convenience, we will just denote (P−1 L) by L, but by L here we mean a permutated lower triangular matrix.

MATLAB programming

In MATLAB, to solve Ax=b for x using Gaussian elimination, one types

where ∖ solves for x using the most efficient algorithm available, depending on the form of A. If A is a general n×n matrix, then first the LU decomposition of A is found using partial pivoting, and then x is determined from permuted forward and backward substitution. If A is upper or lower triangular, then forward or backward substitution (or their permuted version) is used directly.

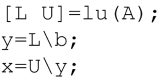

If there are many different right-hand-sides, one would first directly find the LU decomposition of A using a function call, and then solve using ∖. That is, one would iterate for different b ’s the following expressions:

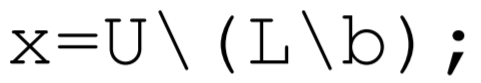

where the second and third lines can be shortened to

where the parenthesis are required. In lecture, I will demonstrate these solutions in MATLAB using the matrix A=[−3,2,−1;6,−6,7;3,−4,4] and the right-hand-side b=[−1;−7;−6], which is the example that we did by hand. Although we do not detail the algorithm here, MATLAB can also solve the linear algebra eigenvalue problem. Here, the mathematical problem to solve is given by

Ax=λx,

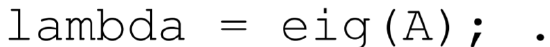

where A is a square matrix, λ are the eigenvalues, and the associated x′ s are the eigenvectors. The MATLAB subroutine that solves this problem is eig.m. To only find the eigenvalues of A, one types

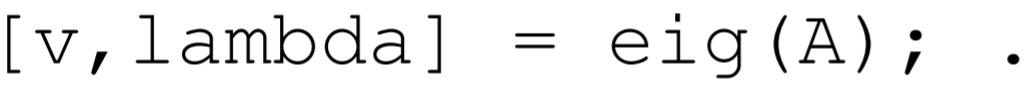

To find both the eigenvalues and eigenvectors, one types

More information can be found from the MATLAB help pages. One of the nice features about programming in MATLAB is that no great sin is commited if one uses a builtin function without spending the time required to fully understand the underlying algorithm.