11.5: The Summary of Hypothesis Testing for One Parameter

- Page ID

- 105864

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)We learned altogether 4 procedures for testing statistical claims about 3 different parameters. So how do we know when and which procedure to use? Next, we will summarize all the procedures for testing hypotheses for one parameter.

First, we must determine the statistical claim that is being tested in two steps:

- Look for the key words to identify the parameter in the claim:

- Mean/average when testing a claim about a population mean.

- Proportion/percentage when testing a claim about a population proportion.

- Variance/standard deviation when testing a claim about a population variance.

- Look for the key words that identify the inequality in the claim:

- Less than/at most for LT

- Not equal/different for TT

- Greater than/at least for RT

The statistical claim in the form of an inequality is called the alternative hypothesis, and the statistical claim in the form of an equation is called the null hypothesis.

Next, we determine the procedure in the following way:

- Use the \(X^2\) procedure for testing claims about variances.

- Use the \(Z\) procedure for testing claims about proportions.

- For testing claims about means, we need to check whether the population standard deviation is known:

- If it is known, then we use the \(Z\) procedure.

- If it is not known, then we use the \(T\) procedure.

Remember - before applying the procedure verify all the assumptions!

So before starting the procedure we must have already figured out the significance level α which is the maximum tolerated type 1 error. The most common values for alpha are \(1\), \(5\), and \(10\%\).

Next, we compute the test statistic using a formula that is specific to each procedure. Note that we label the test statistic using the lower-case letter of the name of the involved distribution with the index zero. That is in a \(Z\)-test the test statistic is called \(z_0\), in the \(T\)-test the test statistic is called \(t_0\), and in the \(X^2\)-test the test statistic is called \(\chi_0^2\).

|

Hypothesis Test |

One Mean \(Z\) Test |

One Mean \(T\) Test |

One Proportion \(Z\) Test |

One Variance \(X^2\) Test |

|---|---|---|---|---|

|

Test Statistic: |

\(z_0=\frac{\bar{x}_i-\mu_0}{\sigma/\sqrt{n}}\) |

\(t_0=\frac{\bar{x}_i-\mu_0}{s/\sqrt{n}}\) |

\(z_0=\frac{\hat{p}_i-p_0}{\sqrt{p_0(1-p_0)/n}}\) |

\(\chi_0^2=\frac{s^2_i(n-1)}{\sigma^2_0}\) |

To apply the formula, one must be able to understand the notation. In this step, it may be necessary to reread the question several times to extract the information.

|

Hypothesis Test |

One Mean \(Z\) or \(T\) Test |

One Proportion \(Z\) Test |

One Variance \(X^2\) Test |

|---|---|---|---|

|

Notation: |

\(\mu_0\) - mean from \(H_0\) \(\bar{x}_i\) - sample mean \(n\) – sample size \(\sigma^2\) – population variance \(s^2\) – sample variance |

\(\hat{p}_0\) - sample proportion \(n\) – sample size |

\(\sigma^2_0\) - variance from \(H_0\) \(\sigma^2_i\) - sample variance \(n\) – sample size |

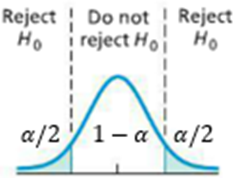

To test the claim, we can use the critical value approach. In this approach, we construct the rejection region under the probability density curve of the involved distribution based on the type of the test and the significance level alpha. The boundaries of the region are called the critical values and can be found in a similar way for all procedures. We reject the null hypothesis if the test statistic is in the rejection region.

|

Test Type |

LT |

TT |

RT |

|---|---|---|---|

|

RR |

|

|

|

|

Critical Values |

LCV: \(x_{1-\alpha}\) RCV: DNE |

LCV: \(x_{1-\alpha/2}\) RCV: \(x_{\alpha/2}\) |

LCV: DNE RCV: \(x_\alpha\) |

|

Reject if ... |

\(x_0<x_{1-\alpha}\) |

\(x_0<x_{1-\alpha/2}\) or \(x_0>x_{\alpha/2}\) |

\(x_0>x_{\alpha}\) |

Note that \(x_\alpha\) and \(x_0\) can be \(z_\alpha\) and \(z_0\), \(t_\alpha\) and \(t_0\), or \(\chi_\alpha^2\) and \(\chi_0^2\).

Alternatively, we can use the P-value approach. In this approach, we find the p-value depending on the test statistic and the type of the test. We reject the null hypothesis if the p-value is less than the significance level \(\alpha\).

|

Test Type |

LT |

TT |

RT |

|---|---|---|---|

|

P-Value |

|

|

|

|

\(P(X<x_0)\) |

\(2P(X<x_0)\) or \(2P(X>x_0)\) |

\(P(X>x_0)\) |

|

|

Reject if ... |

\(\text{P-value}<\alpha\) |

||

Note that \(x_\alpha\) and \(x_0\) can be \(z_\alpha\) and \(z_0\), \(t_\alpha\) and \(t_0\), or \(\chi_\alpha^2\) and \(\chi_0^2\).

Due to the logic of a hypothesis testing procedure, there are only two outcomes of the procedure:

reject or not reject the null hypothesis

Note that the double negative in this case is never interpreted as a positive that is, we never-never accept the null hypothesis! Therefore, the interpretation is that we either do or do not have sufficient evidence to suggest the alternative hypothesis.

All four procedures can be summarized in the following table!

|

Procedure |

One Mean \(Z\) Test |

One Mean \(T\) Test |

One Proportion \(Z\) Test |

One Variance \(X^2\) Test |

|

|---|---|---|---|---|---|

|

Purpose: |

To test a statistical claim about a population mean, \(\mu\) |

To test a statistical claim about a population mean, \(\mu\) |

To test a statistical claim about a population proportion, \(p\) |

To test a statistical claim about a population variance, \(\sigma^2\) |

|

|

Assumptions: |

|

|

|

|

|

|

Hypotheses: |

\(H_0: \mu=\mu_0\) \(H_a: \mu?\mu_0\)

|

\(H_0: p=p_0\) \(H_a: p?p_0\) |

\(H_0: \sigma^2=\sigma^2_0\) \(H_a: \sigma^2?\sigma^2_0\) |

||

|

Test Statistic:

|

\(z_0=\frac{\bar{x}_i-\mu_0}{\sigma/\sqrt{n}}\) |

\(t_0=\frac{\bar{x}_i-\mu_0}{s/\sqrt{n}}\) with \(df=n-1\) |

\(z_0=\frac{\hat{p}_i-p_0}{\sqrt{p_0(1-p_0)/n}}\) |

\(\chi_0^2=\frac{s^2_i(n-1)}{\sigma^2_0}\) with \(df=n-1\) |

|

|

Test Type: |

\(<\) |

\(\neq\) |

\(>\) |

||

|

LT |

TT |

RT |

|||

|

CVA: Reject if … |

\(x_0<x_{1-\alpha}\) |

\(x_0<x_{1-\alpha/2}\) or \(x_0>x_{\alpha/2}\) |

\(x_0>x_{\alpha}\) |

||

|

PVA: Reject if … |

\(P(X<x_0)\) |

\(2P(X<x_0)\) or \(2P(X>x_0)\) |

\(P(X>x_0)\) |

||

|

Interpretation: |

We DO (NOT) have sufficient evidence to reject the null hypothesis in favor of the alternative hypothesis. |

||||

It is easy to see that the procedures not only have a lot in common among themselves but also with the procedures for constructing confidence intervals when estimating population parameters.