9.1: Variances

- Page ID

- 130831

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\dsum}{\displaystyle\sum\limits} \)

\( \newcommand{\dint}{\displaystyle\int\limits} \)

\( \newcommand{\dlim}{\displaystyle\lim\limits} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\(\newcommand{\longvect}{\overrightarrow}\)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)The Law of the Unconscious Statistician will play an important role in this section. To motivate the next topic, consider the following two random variables, \(X\) and \(Y\) with the following probability mass functions:

\( f(x) = P(X = x) =

\begin{cases}

0.5, & \mbox{if } x = -1, 1\\

0, & \mbox{otherwise}

\end{cases}\)

\( g(y) = P(Y = y) =

\begin{cases}

0.5, & \mbox{if } y = -100, 100\\

0, & \mbox{otherwise}

\end{cases}\)

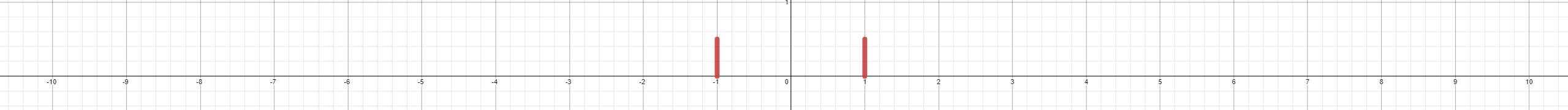

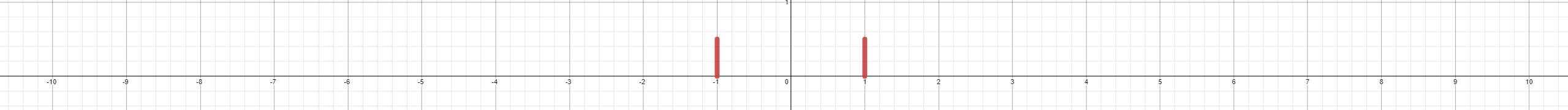

The graph of the probability mass function looks like the following:

Based off our intuition that \( \mathbb{E}[X] \) and \( \mathbb{E}[Y] \) represents the center of mass of each distribution, we would perhaps guess that \( \mathbb{E}[X] = 0 \) and \( \mathbb{E}[Y] = 0 \). The actual calculation is straightforward and indeed \( \mathbb{E}[X] = 0 = \mathbb{E}[Y] \).

Notice that \(X\) and \(Y\) have the same average, yet there is a striking difference between the two distributions. What is this difference?

The difference is that the distribution of \(Y\) is far more spread out than the distribution of \(X\). That is, the values of \(Y\) seem to "vary" more than the values of \(X\). Our goal is to develop a measure of how much a distribution can vary. The way we define this measure is by computing it's variance, as defined below:

Definition: If \(X\) is a random variable with mean \( \mathbb{E}[X] = \mu \), then the variance of \(X\), denoted by \( \mathbb{V}ar[X] = \sigma^2 \), is defined to be

\[ \mathbb{V}ar[X] = \mathbb{E} \bigg[ (X - \mu)^2 \bigg] \nonumber \]

Again, this is merely a definition and it does not make sense to prove a definition, but we can certainly take a moment and ask as to why our definition achieves our goal. Recall that our goal is to develop a measure of how much a distribution varies and we are claiming that \[ \mathbb{V}ar[X] = \mathbb{E} \bigg[ (X - \mu)^2 \bigg] \nonumber \] does the trick. How so?

Well, in order to measure the spread of a distribution, we first must fix a reference point. For us, we are fixing our reference point to be \( \mu = \mathbb{E}[X] \) which is the center of our distribution. Once we have \( \mu \), we then study \( X - \mu \) which represents the distance of each value of \(X\) from its center. Since distances cannot be negative, we square the lengths which gives us \( X - \mu \)^2. We then average all the square lengths from each value of \(X\) to its center, \( \mathbb{E} \bigg[ (X - \mu)^2 \bigg] \).

In summary, the expression \( \mathbb{E} \bigg[ (X - \mu) ^2 \bigg] \) yields the average square distance between each value of \(X\) and its center.

Some students may argue that if we wish to compute the spread of the distribution, then it makes sense to define the variance as \( \mathbb{E}\bigg[ |X - \mu| \bigg] \) since this tells us, on average, how far each value of \(X\) is away from its center. Although this is a good idea, in practice, the computations can get messy. This will be further discussed when we study continuous random variables.

Suppose \(X\) has the following probability mass function: \( f(x) = P(X = x) =

\begin{cases}

0.5, & \mbox{if } x = -1, 1\\

0, & \mbox{otherwise}

\end{cases}\)

Find \( \mathbb{V}ar[X] \).

- Answer

-

Recall that for this random variable, \( \mu = \mathbb{E}[X] = 0 \).

\begin{align*} \mathbb{V}ar[X] &= \mathbb{E} \bigg[ (X - \mu)^2 \bigg] \\ &= \mathbb{E} \bigg[ (X - 0)^2 \bigg] \\ &= \mathbb{E} [ X^2 ] \\ &= \sum_{\text{all} ~ x} x^2 f(x) \\ &= (-1)^2 f(-1) + (1)^2 f(1) \\ &= f(-1) + f(1) \\ &= 0.5 + 0.5 \\ &= 1 \end{align*}

Suppose \(Y\) has the following probability mass function: \( g(y) = P(Y = y) =

\begin{cases}

0.5, & \mbox{if } y = -100, 100\\

0, & \mbox{otherwise}

\end{cases}\)

Find \( \mathbb{V}ar[Y] \).

- Answer

-

Recall that for this random variable, \( \mu = \mathbb{E}[Y] = 0 \).

\begin{align*} \mathbb{V}ar[Y] &= \mathbb{E} \bigg[ (Y - \mu)^2 \bigg] \\ &= \mathbb{E} \bigg[ Y - 0)^2 \bigg] \\ &= \mathbb{E} [ Y^2 ] \\ &= \sum_{\text{all} ~ y} y^2 f(x) \\ &= (-10)^2 f(-10) + (10)^2 f(10) \\ &= 100f(-1) + 100 f(1) \\ &= 100(0.5) + 100(0.5) \\ &= 100 \end{align*}

As expected, \( \mathbb{V}ar[Y] > \mathbb{V}ar[X] \).

Suppose the probability mass function of \(X\) is given by \( f(x) = P(X = x) =

\begin{cases}

.855, & \mbox{if } x = 0 \\

.140, & \mbox{if } x = 100000 \\ .005 , & \mbox{if } x = 200000 \\ 0, & \mbox{otherwise}

\end{cases}\)

Find \( \mathbb{V}ar[X]\).

- Answer

-

Recall that for this random variable, \( \mu = \mathbb{E}[X] = 15,000 \).

\begin{align*} \mathbb{V}ar[X] &= \mathbb{E} \bigg[ (X - \mu)^2 \bigg] \\ &= \mathbb{E} \bigg[ (X - 15,000)^2 \bigg] \\ &= \sum_{\text{all} ~ x} (x-15000)^2 f(x) \\ &= (0-15000)^2 f(0) + (100000-15000)^2 f(100000) + (200000-15000)^2 f(200000) \\ &= 1,375,000,000 \end{align*}

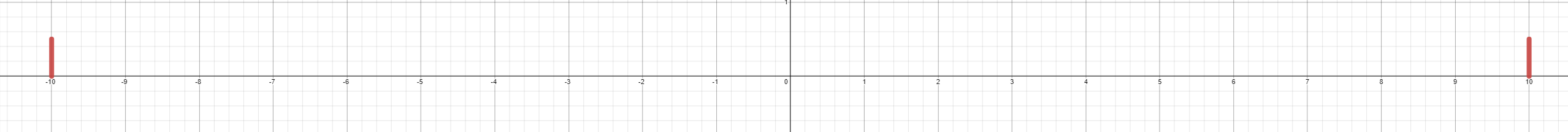

Looking back at the answers to the above three questions, we perhaps may feel uneasy. For instance, in Example 1, the variance is 1 and this makes sense because from the sketch of the pmf,

it makes sense that on average, the square distance of each value of \(X\) to its center is 1. For Example 2, on average, the square distance of each value of \(Y\) to its center is 100. However, some may argue that the 100 does not make sense since the values of \(Y\) are -10 and 10 and so intuitively the most amount of variation we have is 20.

How can we reconcile this?

Simply put, allow us to think about the units of \( \mathbb{V}ar[X]\). Notice that in \( \mathbb{V}ar[X] = \mathbb{E} \bigg[ (X - \mu)^2 \bigg] \), the \(X - \mu \) term is being squared and so our final answer is in terms of \( ( \text{units of} ~ X )^2 \).

For instance, in Example 3, the variance of \(X\) is 1,375,000,000 square dollars. However, it is rather difficult in the dimension of dollars squared as opposed to just dollars.

In order to make the measure of spread more intuitive, we will take the square root of the variance. Doing so gives us the standard deviation.

Definition: Given a random variable, \(X\), the positive square root of the variance is called the standard deviation of \(X\), denoted by \(SD[X] \) or \(\sigma \).

Suppose \(X\) has the following probability mass function: \( f(x) = P(X = x) =

\begin{cases}

0.5, & \mbox{if } x = -1, 1\\

0, & \mbox{otherwise}

\end{cases}\)

Find \(SD[X] \).

- Answer

-

Recall that for this random variable, \( \mathbb{V}ar[X] = 1 \). Hence Find \(SD[X] = \sqrt{1} = 1 \).

Suppose \(Y\) has the following probability mass function: \( g(y) = P(Y = y) =

\begin{cases}

0.5, & \mbox{if } y = -100, 100\\

0, & \mbox{otherwise}

\end{cases}\)

Find \(SD[Y] \).

- Answer

-

Recall that for this random variable, \( \mathbb{V}ar[Y] = 100 \). Hence Find \(SD[Y] = \sqrt{100} = 10 \). Intutively, this means the distribution of \(Y\) is ten times more spread out than the distribution of \(X\) where \(X\) is defined as above in Example 4.

As expected, \( \mathbb{V}ar[Y] > \mathbb{V}ar[X] \).

Suppose the probability mass function of \(X\) is given by \( f(x) = P(X = x) =

\begin{cases}

.855, & \mbox{if } x = 0 \\

.140, & \mbox{if } x = 100000 \\ .005 , & \mbox{if } x = 200000 \\ 0, & \mbox{otherwise}

\end{cases}\)

Find \(SD[X] \).

- Answer

-

Recall that for this random variable, \( \mathbb{V}ar[X] = 1375000000 \). Hence Find \(SD[X] = \sqrt{1375000000} \approx $37,081\).

We conclude this section with two theorems concerning the variance. Our first theorem gives us a more convenient way to calculate the variance.

Theorem: If \(X\) is a random variable, then \[ \mathbb{V}ar[X] = \mathbb{E}[X^2] - \bigg( \mathbb{E}[X] \bigg)^2 \]

That is, the variance is equal to the second moment of \(X\) minus the square of the first moment of \(X\). The proof is left as a homework exercise.

Theorem: Let \(X\) denote a random variable. For any constants, \(a\) and \(b\), \( \mathbb{V}ar[aX + b] =a^2 \mathbb{V}ar[X] \).