16: Basis and Dimension

- Page ID

- 115923

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\dsum}{\displaystyle\sum\limits} \)

\( \newcommand{\dint}{\displaystyle\int\limits} \)

\( \newcommand{\dlim}{\displaystyle\lim\limits} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\(\newcommand{\longvect}{\overrightarrow}\)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)In chapter 10, the notions of a linearly independent set of vectors in a vector space \(V\), and of a set of vectors that span \(V\) were established: Any set of vectors that span \(V\) can be reduced to some minimal collection of linearly independent vectors; such a set is called a \emph{basis} of the subspace \(V\).

Definitions

Let \(V\) be a vector space.

- Then a set \(S\) is a \(\textit{basis}\) for \(V\) if \(S\) is linearly independent and \(V = span S\).

- If \(S\) is a basis of \(V\) and \(S\) has only finitely many elements, then we say that \(V\) is \(\textit{finite-dimensional}\).

- The number of vectors in \(S\) is the \(\textit{dimension}\) of \(V\).

Suppose \(V\) is a \(\textit{finite-dimensional}\) vector space, and \(S\) and \(T\) are two different bases for \(V\). One might worry that \(S\) and \(T\) have a different number of vectors; then we would have to talk about the dimension of \(V\) in terms of the basis \(S\) or in terms of the basis \(T\). Luckily this isn't what happens. Later in this chapter, we will show that \(S\) and \(T\) must have the same number of vectors. This means that the dimension of a vector space is basis-independent. In fact, dimension is a very important characteristic of a vector space.

Example 112

\(P_{n}(t)\) (polynomials in \(t\) of degree \(n\) or less) has a basis \(\{1,t,\ldots , t^{n} \}\), since every vector in this space is a sum

\[a^{0}\,1+a^{1}\,t+\cdots +a^{n}\,t^{n}, \qquad a^{i}\in \Re\, ,\]

so \(P_{n}(t)=span \{1,t,\ldots , t^{n} \}\). This set of vectors is linearly independent: If the polynomial \(p(t)=c^{0}1+c^{1}t+\cdots +c^{n}t^{n}=0\), then \(c^{0}=c^{1}=\cdots =c^{n}=0\), so \(p(t)\) is the zero polynomial. Thus \(P_{n}(t)\) is finite dimensional, and \(\dim P_{n}(t)=n+1\).

Theorem

Let \(S=\{v_{1}, \ldots, v_{n} \}\) be a basis for a vector space \(V\). Then every vector \(w \in V\) can be written \(\textit{uniquely}\) as a linear combination of vectors in the basis \(S\):

\[w=c^{1}v_{1}+\cdots + c^{n}v_{n}.\]

Proof

Since \(S\) is a basis for \(V\), then \(span S=V\), and so there exist constants \(c^{i}\) such that \(w=c^{1}v_{1}+\cdots + c^{n}v_{n}\).

Suppose there exists a second set of constants \(d^{i}\) such that

$$w=d^{1}v_{1}+\cdots + d^{n}v_{n}\, .$$ Then:

\begin{eqnarray*}

0_{V}&=&w-w\\

&=&c^{1}v_{1}+\cdots + c^{n}v_{n}-d^{1}v_{1}-\cdots - d^{n}v_{n} \\

&=&(c^{1}-d^{1})v_{1}+\cdots + (c^{n}-d^{n})v_{n}. \\

\end{eqnarray*}

If it occurs exactly once that \(c^{i}\neq d^{i}\), then the equation reduces to \(0=(c^{i}-d^{i})v_{i}\), which is a contradiction since the vectors \(v_{i}\) are assumed to be non-zero.

If we have more than one \(i\) for which \(c^{i}\neq d^{i}\), we can use this last equation to write one of the vectors in \(S\) as a linear combination of other vectors in \(S\), which contradicts the assumption that \(S\) is linearly independent. Then for every \(i\), \(c^{i}=d^{i}\).

Remark

This theorem is the one that makes bases so useful--they allow us to convert abstract vectors into column vectors. By ordering the set \(S\) we obtain \(B=(v_{1},\ldots,v_{n})\) and can write

\[w=(v_{1},\ldots,v_{n}) \begin{pmatrix}c^{1}\\ \vdots\\ c^{n}\end{pmatrix}=\begin{pmatrix}c^{1}\\ \vdots\\ c^{n}\end{pmatrix}_{B}\, .\]

Remember that in general it makes no sense to drop the subscript \(B\) on the column vector on the right--most vector spaces are not made from columns of numbers!

Next, we would like to establish a method for determining whether a collection of vectors forms a basis for \(\Re^{n}\). But first, we need to show that any two bases for a finite-dimensional vector space has the same number of vectors.

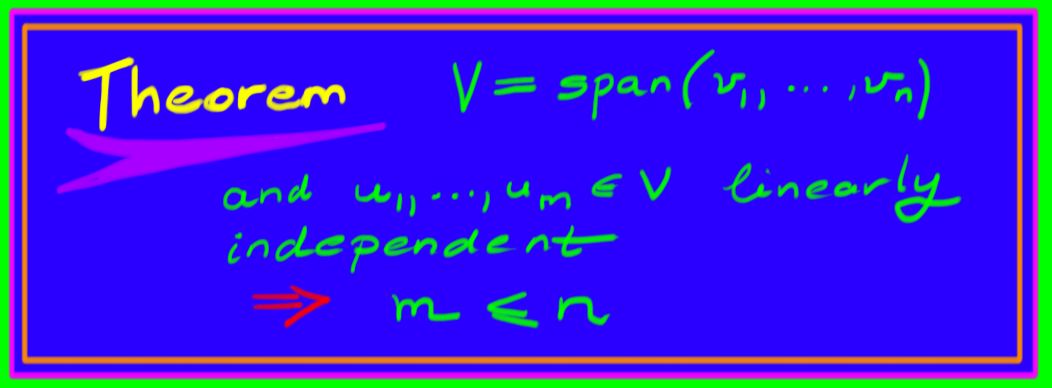

Lemma

If \(S=\{v_{1}, \ldots, v_{n} \}\) is a basis for a vector space \(V\) and \(T=\{w_{1}, \ldots, w_{m} \}\) is a linearly independent set of vectors in \(V\), then \(m\leq n\).

The idea of the proof is to start with the set \(S\) and replace vectors in \(S\) one at a time with vectors from \(T\), such that after each replacement we still have a basis for \(V\).

Proof

Since \(S\) spans \(V\), then the set \(\{w_{1}, v_{1}, \ldots, v_{n} \}\) is linearly dependent. Then we can write \(w_{1}\) as a linear combination of the \(v_{i}\); using that equation, we can express one of the \(v_{i}\) in terms of \(w_{1}\) and the remaining \(v_{j}\) with \(j\neq i\). Then we can discard one of the \(v_{i}\) from this set to obtain a linearly independent set that still spans \(V\). Now we need to prove that \(S_{1}\) is a basis; we must show that \(S_{1}\) is linearly independent and that \(S_{1}\) spans \(V\).

The set \(S_{1}=\{w_{1}, v_{1}, \ldots, v_{i-1}, v_{i+1},\ldots, v_{n} \}\) is linearly independent: By the previous theorem, there was a unique way to express \(w_{1}\) in terms of the set \(S\). Now, to obtain a contradiction, suppose there is some \(k\) and constants \(c^{i}\) such that

\[v_{k} = c^{0}w_{1}+c^{1}v_{1}+\cdots + c^{i-1}v_{i-1} + c^{i+1}v_{i+1} + \cdots + c^{n}v_{n}.\]

Then replacing \(w_{1}\) with its expression in terms of the collection \(S\) gives a way to express the vector \(v_{k}\) as a linear combination of the vectors in \(S\), which contradicts the linear independence of \(S\). On the other hand, we cannot express \(w_{1}\) as a linear combination of the vectors in \(\{v_{j} | j\neq i\}\), since the expression of \(w_{1}\) in terms of \(S\) was unique, and had a non-zero coefficient for the vector \(v_{i}\). Then no vector in \(S_{1}\) can be expressed as a combination of other vectors in \(S_{1}\), which demonstrates that \(S_{1}\) is linearly independent.

The set \(S_{1}\) spans \(V\): For any \(u\in V\), we can express \(u\) as a linear combination of vectors in \(S\). But we can express \(v_{i}\) as a linear combination of vectors in the collection \(S_{1}\); rewriting \(v_{i}\) as such allows us to express \(u\) as a linear combination of the vectors in \(S_{1}\). Thus \(S_{1}\) is a basis of \(V\) with \(n\) vectors.

We can now iterate this process, replacing one of the \(v_{i}\) in \(S_{1}\) with \(w_{2}\), and so on. If \(m\leq n\), this process ends with the set \(S_{m}=\{w_{1},\ldots, w_{m}\), \(v_{i_1},\ldots,v_{i_{n-m}} \}\), which is fine.

Otherwise, we have \(m>n\), and the set \(S_{n}=\{w_{1},\ldots, w_{n} \}\) is a basis for \(V\). But we still have some vector \(w_{n+1}\) in \(T\) that is not in \(S_{n}\). Since \(S_{n}\) is a basis, we can write \(w_{n+1}\) as a combination of the vectors in \(S_{n}\), which contradicts the linear independence of the set \(T\). Then it must be the case that \(m\leq n\), as desired.

\(\square\)

Corollary

For a finite-dimensional vector space \(V\), any two bases for \(V\) have the same number of vectors.

Proof

Let \(S\) and \(T\) be two bases for \(V\). Then both are linearly independent sets that span \(V\). Suppose \(S\) has \(n\) vectors and \(T\) has \(m\) vectors. Then by the previous lemma, we have that \(m\leq n\). But (exchanging the roles of \(S\) and \(T\) in application of the lemma) we also see that \(n\leq m\). Then \(m=n\), as desired.

Contributor

David Cherney, Tom Denton, and Andrew Waldron (UC Davis)

Thumbnail: A linear combination of one basis set of vectors (purple) obtains new vectors (red). If they are linearly independent, these form a new basis set. The linear combinations relating the first set to the other extend to a linear transformation, called the change of basis. (CC0; Maschen via Wikipedia)