18.10: Movie Scripts 7-8

( \newcommand{\kernel}{\mathrm{null}\,}\)

G.7 Determinants

Permutation Example

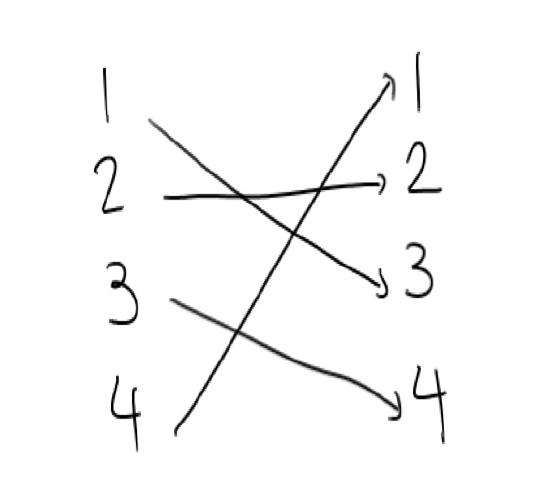

Lets try to get the hang of permutations. A permutation is a function which scrambles things. Suppose we had

This looks like a function $\sigma$ that has values σ(1)=3, σ(2)=2, σ(3)=4, σ(4)=1.

Then we could write this as

[1234σ(1)σ(2)σ(3)σ(4)]=[12343241]

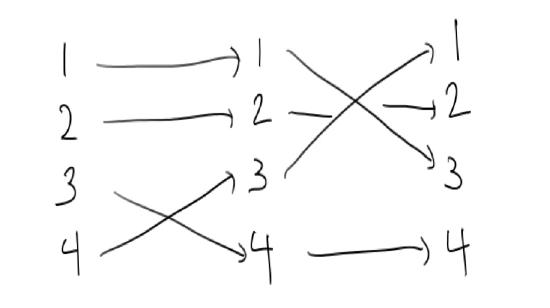

We could write this permutation in two steps by saying that first we swap 3 and 4, and then we swap 1 and 3. The order here is important.

This is an even permutation, since the number of swaps we used is two (an even number).

Elementary Matrices

This video will explain some of the ideas behind elementary matrices. First think back to linear systems, for example n equations in n unknowns:

{a11x1+a12x2+⋯+a1nxn=v1a21x1+a22x2+⋯+a2nxn=v2⋮an1x1+an2x2+⋯+annxn=vn.

We know it is helpful to store the above information with matrices and vectors

M:=(a11a12⋯a1na21a22⋯a2n⋮⋮⋮an1an2⋯ann),X:=(x1x2⋮xn),V:=(v1v2⋮vn).

Here we will focus on the case the M is square because we are interested in its inverse M−1 (if it exists) and its determinant (whose job it will be to determine the existence of M−1).

We know at least three ways of handling this linear system problem:

- As an augmented matrix (MV). Here our plan would be to perform row operations until the system looks like (IM−1V), (assuming that M−1 exists).

- As a matrix equation MX=V, which we would solve by finding M−1 (again, if it exists), so that X=M−1V.

- As a linear transformation L:Rn⟶Rn via Rn∋X⟼MX∈Rn. In this case we have to study the equation L(X)=V because V∈Rn.

Lets focus on the first two methods. In particular we want to think about how the augmented matrix method can give information about finding M−1. In particular, how it can be used for handling determinants.

The main idea is that the row operations changed the augmented matrices, but we also know how to change a matrix M by multiplying it by some other matrix E, so that M→EM. In particular can we find ``elementary matrices'' the perform row operations?

Once we find these elementary matrices is is very important to ask how they effect the determinant, but you can think about that for your own self right now.

Lets tabulate our names for the matrices that perform the various row operations:

$$\left(RowOperationElementaryMatrixRi↔RjEijRi→λRiRi(λ)Ri→Ri+λRjSij(λ)\right)\]

To finish off the video, here is how all these elementary matrices work for a 2×2 example. Lets take

M=(abcd).

A good thing to think about is what happens to detM=ad−bc under the operations below.

- Row swap: E12=(0110),E12M=(0110)(abcd)=(cdab).

- Scalar multiplying: R1(λ)=(λ001),E12M=(λ001)(abcd)=(λaλbcd).

- Row sum: S12(λ)=(1λ01),S12(λ)M=(1λ01)(abcd)=(a+λcb+λdcd).

Elementary Determinants

This video will show you how to calculate determinants of elementary matrices. First remember that the job of an elementary row matrix is to perform row operations, so that if E is an elementary row matrix and M some given matrix, EM is the matrix M with a row operation performed on it.

The next thing to remember is that the determinant of the identity is 1. Moreover, we also know what row operations do to determinants:

- Row swap Eij: flips the sign of the determinant.

- Scalar multiplication Ri(λ): multiplying a row by λ multiplies the determinant by λ.

- Row addition Sij(λ): adding some amount of one row to another does not change the determinant.

The corresponding elementary matrices are obtained by performing exactly these operations on the identity:

$$

E^{i}_{j}=(1⋱01⋱10⋱1)\, ,

\]

Ri(λ)=(1⋱λ⋱1),

Sij(λ)=(1⋱1λ⋱1⋱1)

So to calculate their determinants, we just have to apply the above list of what happens to the determinant of a matrix under row operations to the determinant of the identity. This yields

$$

\det E^{i}_{j}=-1\, ,\qquad

\det R^{i}(\lambda)=\lambda\, ,\qquad

\det S^{i}_{j}(\lambda)=1\, .

\]

Determinants and Inverses

Lets figure out the relationship between determinants and invertibility. If we have a system of equations Mx=b and we have the inverse M−1 then if we multiply on both sides we get x=M−1Mx=M−1b. If the inverse exists we can solve for x and get a solution that looks like a point.

So what could go wrong when we want solve a system of equations and get a solution that looks like a point? Something would go wrong if we didn't have enough equations for example if we were just given

x+y=1

or maybe, to make this a square matrix M we could write this as

x+y=10=0

The matrix for this would be

M=[1100]

and det(M)=0. When we compute the determinant, this row of all zeros gets multiplied in every term. If instead we were given redundant equations

x+y=12x+2y=2

The matrix for this would be

M=[1122] and det(M)=0. But we know that with an elementary row operation, we could replace the second row with a row of all zeros. Somehow the determinant is able to detect that there is only one equation here. Even if we had a set of contradictory set of equations such as

x+y=12x+2y=0,

where it is not possible for both of these equations to be true, the matrix M is still the same, and still has a determinant zero.

Lets look at a three by three example, where the third equation is the sum of the first two equations.

x+y+z=1y+z=1x+2y+2z=2

and the matrix for this is

M=[111011122]

If we were trying to find the inverse to this matrix using elementary matrices

(111100011010122001)=(111100011010000−1−11)

And we would be stuck here. The last row of all zeros cannot be converted into the bottom row of a 3×3 identity matrix. this matrix has no inverse, and the row of all zeros ensures that the determinant will be zero. It can be difficult to see when one of the rows of a matrix is a linear combination of the others, and what makes the determinant a useful tool is that with this reasonably simple computation we can find out if the matrix is invertible, and if the system will have a solution of a single point or column vector.

Alternative Proof

Here we will prove more directly that} the determinant of a product of matrices is the product of their determinants. First we reference that for a matrix M with rows ri, if M′ is the matrix with rows r′j=rj+λri for j≠i and r′i=ri, then det(M)=det(M′). Essentially we have M′ as M multiplied by the elementary row sum matrices Sij(λ). Hence we can create an upper-triangular matrix U such that det(M)=det(U) by first using the first row to set m1i↦0 for all i>1, then iteratively (increasing k by 1 each time) for fixed k using the k-th row to set mki↦0 for all i>k.

Now note that for two upper-triangular matrices U=(uji) and U′=(u′ji), by matrix multiplication we have X=UU′=(xji) is upper-triangular and xii=uiiu′ii. Also since every permutation would contain a lower diagonal entry (which is 0) have det(U)=∏iuii. Let A and A′ have corresponding upper-triangular matrices U and U′ respectively (i.e. det(A)=det(U)), we note that AA′ has a corresponding upper-triangular matrix UU′, and hence we have

det(AA′)=det(UU′)=∏iuiiu′ii=(∏iuii)(∏iu′ii)=det(U)det(U′)=det(A)det(A′).

Practice taking Determinants

Lets practice taking determinants of 2×2 and 3×3 matrices.

For 2×2 matrices we have a formula

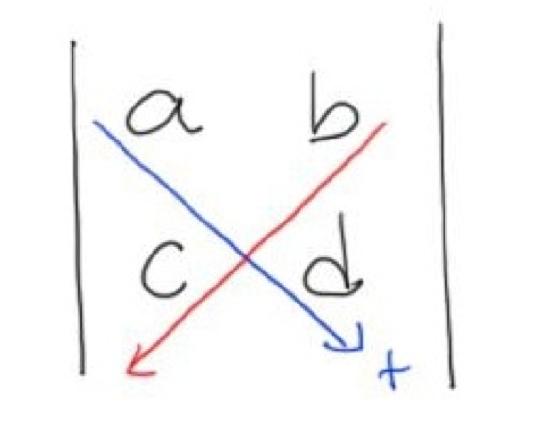

det(abcd)=ad−bc.

This formula might be easier to remember if you think about this picture.

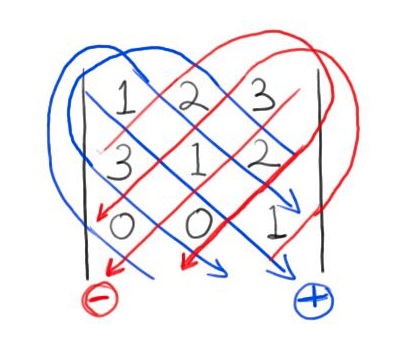

Now we can look at three by three matrices and see a few ways to compute the determinant. We have a similar pattern for 3×3 matrices.

Consider the example

det(123312001)=((1⋅1⋅1)+(2⋅2⋅0)+(3⋅3⋅0))−((3⋅1⋅0)+(1⋅2⋅0)+(3⋅2⋅1))=−5

We can draw a picture with similar diagonals to find the terms that will be positive and the terms that will be negative.

Another way to compute the determinant of a matrix is to use this recursive formula. Here I take the coefficients of the first row and multiply them by the determinant of the minors and the cofactor. Then we can use the formula for a two by two determinant to compute the determinant of the minors

det(123312001)=1|1201|−2|3201|+3|3100|=1(1−0)−2(3−0)+3(0−0)=−5

Decide which way you prefer and get good at taking determinants, you'll need to compute them in a lot of problems.

Hint for Review Problem 5

For an arbitrary 3×3 matrix A=(aij), we have

det(A)=a11a22a33+a12a23a31+a13a21a32−a11a23a32−a12a21a33−a13a22a31

and so the complexity is 5a+12m. Now note that in general, the complexity cn of the expansion minors formula of an arbitrary n×n matrix should be

cn=(n−1)a+ncn−1m

since det(A)=∑ni=1(−1)ia1icofactor(a1i) and cofactor(a1i) is an (n−1)×(n−1) matrix. This is one way to prove part (c).

G.8 Subspaces and Spanning Sets

Linear Systems as Spanning Sets

Suppose that we were given a set of linear equations lj(x1,x2,…,xn) and we want to find out if lj(X)=vj for all j for some vector V=(vj). We know that we can express this as the matrix equation

∑iljixi=vj

where lji is the coefficient of the variable xi in the equation lj. However, this is also stating that V is in the span of the vectors {Li}i where Li=(lji)j. For example, consider the set of equations

2x+3y−z=5−x+3y+z=1x+y−2z=3

which corresponds to the matrix equation

(23−1−13111−2)(xyz)=(513).

We can thus express this problem as determining if the vector

V=(513)

lies in the span of

{(2−11),(331),(−11−2)}.

Hint for Review Problem 2

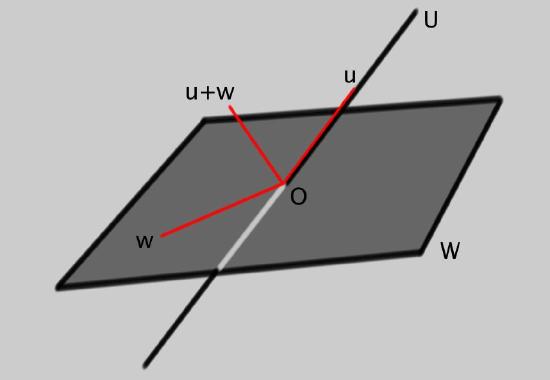

For the first part, try drawing an example in R3:

Here we have taken the subspace W to be a plane through the origin and U to be a line through the origin. The hint now is to think about what happens when you add a vector u∈U to a vector w∈W. Does this live in the union U∪W?

For the second part, we take a more theoretical approach. Lets suppose that v∈U∩W and v′∈U∩W. This implies

v∈Uandv′∈U.

So, since U is a subspace and all subspaces are vector spaces, we know that the linear combination

αv+βv′∈U.

Now repeat the same logic for W and you will be nearly done.

Contributor

David Cherney, Tom Denton, and Andrew Waldron (UC Davis)