18.9: Movie Scripts 5-6

( \newcommand{\kernel}{\mathrm{null}\,}\)

G.5: Linear Transformations

Hint for Review Question 5

The first thing we see in the problem is a definition of this new space Pn. Elements of Pn are polynomials that look like a0+a1t+a2t2+…+antn where the ai's are constants. So this means if L is a linear transformation from P2→P3 that the inputs of L are degree two polynomials which look like a0+a1t+a2t2 and the output will have degree three and look like b0+b1t+b2t2+b3t3

We also know that L is a linear transformation, so what does that mean in this case? Well, by linearity we know that we can separate out the sum, and pull out the constants so we get L(a0+a1t+a2t2)=a0L(1)+a1L(t)+a2L(t2) Just this should be really helpful for the first two parts of the problem. The third part of the problem is asking us to think about this as a linear algebra problem, so lets think about how we could write this in the vector notation we use in the class. We could write a0+a1t+a2t2 as (a0a1a2) And think for a second about how you add polynomials, you match up terms of the same degree and add the constants component-wise. So it makes some sense to think about polynomials this way, since vector addition is also component-wise.

We could also write the output b0+b1t+b2t2+b3t3 as (b0b1b2b3) Then lets look at the information given in the problem and think about it in terms of column vectors

- L(1)=4 but we can think of the input 1=1+0t+0t2 and the output 4=4+0t+0t20t3 and write this as L(100)=(4000)

- L(t)=t3 This can be written as L(010)=(0001)

- L(t2)=t−1 It might be a little trickier to figure out how to write t−1 but if we write the polynomial out with the terms in order and with zeroes next to the terms that do not appear, we can see that t−1=−1+t+0t2+0t3 corresponds to (−1100) So this can be written as L(001)=(−1100)

Now to think about how you would write the linear transformation L as a matrix, first think about what the dimensions of the matrix would be. Then look at the first two parts of this problem to help you figure out what the entries should be.

G.6: Matrices

Adjacency Matrix Example

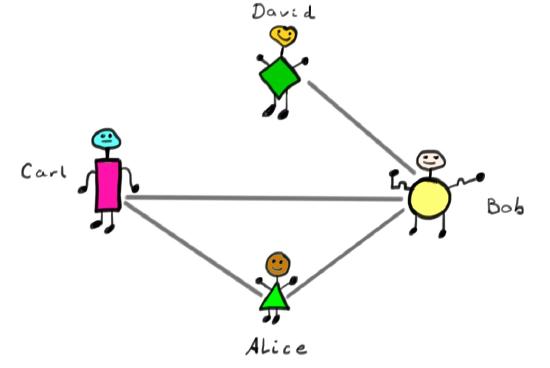

Lets think about a graph as a mini-facebook. In this tiny facebook there are only four people, Alice, Bob, Carl, and David.

Suppose we have the following relationships

- Alice and Bob are friends.

- Alice and Carl are friends.

- Carl and Bob are friends.

- David and Bob are friends.

Now draw a picture where each person is a dot, and then draw a line between the dots of people who are friends. This is an example of a graph if you think of the people as nodes, and the friendships as edges.

Now lets make a 4×4 matrix, which is an adjacency matrix for the graph. Make a column and a row for each of the four people. It will look a lot like a table. When two people are friends put a 1 in the row of one and the column of the other. For example Alice and Carl are friends so we can label the table below.

ABCDA1BC1D

We can continue to label the entries for each friendship. Here lets assume that people are friends with themselves, so the diagonal will be all ones.

ABCDA1110B1111C1110D0101

Then take the entries of this table as a matrix

(1110111111100101)

Notice that this table is symmetric across the diagonal, the same way a multiplication table would be symmetric. This is because on facebook friendship is symmetric in the sense that you can't be friends with someone if they aren't friends with you too. This is an example of a symmetric matrix.

You could think about what you would have to do differently to draw a graph for something like twitter where you don't have to follow everyone who follows you. The adjacency matrix might not be symmetric then.

Do Matrices Commute?

This video shows you a funny property of matrices. Some matrix properties look just like those for numbers. For example numbers obey

a(bc)=(ab)c

and so do matrices:

A(BC)=(AB)C.

This says the order of bracketing does not matter and is called associativity. Now we ask ourselves whether the basic property of numbers

ab=ba,

holds for matrices

AB?=BA.

For this, firstly note that we need to work with square matrices even for both orderings to even make sense. Lets take a simple 2×2 example, let

A=(1a01),B=(1b01),C=(10a1).

In fact, computing AB and BA we get the same result AB=BA=(1a+b01),

so this pair of matrices do commute. Lets try A and C:

AC=(1+a2aa1),andCA=(1aa1+a2)

so AC≠CA

and this pair of matrices does not commute. Generally, matrices usually do not commute, and the problem of finding those that do is a very interesting one.

Matrix Exponential Example

This video shows you how to compute

exp(0θ−θ0).

For this we need to remember that the matrix exponential is defined by its power series

expM:=I+M+12!M2+13!M3+⋯.

Now lets call

(0θ−θ0)=iθ

where the matrix

i:=(01−10)

and by matrix multiplication is seen to obey

i2=−I,i3=−i,i4=I.

Using these facts we compute by organizing terms according to whether they have an i or not:

expiθ=I+12!θ2(−I)+14!(+I)+⋯+iθ+13!θ3(−i)+15!i+⋯=I(1−12!θ2+14!θ4+⋯)+i(θ−13!θ3+15!θ5+⋯)=Icosθ+isinθ=(cosθsinθ−sinθcosθ).

Here we used the familiar Taylor series for the cosine and sine functions. A fun thing to think about is how the above matrix acts on vector in the plane.

Proof Explanation

In this video we will talk through the steps required to prove trMN=trNM\). We want to show that for S={v1,…,vn} a basis for a vector space V, then every vector w∈V can be written uniquely as a linear combination of vectors in the basis S:

w=c1v1+⋯+cnvn.

We should remember that since S is a basis for V, we know two things

- V=spanS

- v1,…,vn are linearly independent, which means that whenever we have a1v1+…+anvn=0 this implies that ai=0 for all i=1,…,n.

This first fact makes it easy to say that there exist constants ci such that w=c1v1+⋯+cnvn. What we don't yet know is that these c1,…cn are unique.

In order to show that these are unique, we will suppose that they are not, and show that this causes a contradiction. So suppose there exists a second set of constants di such that

w=d1v1+⋯+dnvn.

For this to be a contradiction we need to have ci≠di for some i. Then look what happens when we take the difference of these two versions of w:

0V=w−w=(c1v1+⋯+cnvn)−(d1v1+⋯+dnvn)=(c1−d1)v1+⋯+(cn−dn)vn.

Since the vi's are linearly independent this implies that ci−di=0 for all i, this means that we cannot have ci≠di, which is a contradiction.

Hint for Review Question 4

This problem just amounts to remembering that the dot product of x=(x1,x2,…,xn) and y=(y1,y2,…,yn) is

x1y1+x2y2+⋯+xnyn.

Then try multiplying the above row vector times yT and compare.

Hint for Review Problem 5

The majority of the problem comes down to showing that matrices are right distributive. Let Mk is all n×k matrices for any n, and define the map fR:Mk→Mm by fR(M)=MR where R is some k×m matrix. It should be clear that fR(α⋅M)=(αM)R=α(MR)=αfR(M) for any scalar α. Now all that needs to be proved is that

fR(M+N)=(M+N)R=MR+NR=fR(M)+fR(N),

and you can show this by looking at each entry.

We can actually generalize the concept of this problem. Let V be some vector space and M be some collection of matrices, and we say that M is a left-action on V if

(M⋅N)∘v=M∘(N∘v)

for all M,N∈N and v∈V where ⋅ denoted multiplication in M (i.e. standard matrix multiplication) and ∘ denotes the matrix is a linear map on a vector (i.e. M(v)). There is a corresponding notion of a right action where

v∘(M⋅N)=(v∘M)∘N

where we treat v∘M as M(v) as before, and note the order in which the matrices are applied. People will often omit the left or right because they are essentially the same, and just say that M acts on V.

Hint for Review Question 8

This is a hint for computing exponents of matrices. So what is eA if A is a matrix? We remember that the Taylor series for

ex=∞∑n=0xnn!.

So as matrices we can think about

eA=∞∑n=0Ann!.

This means we are going to have an idea of what An looks like for any n. Lets look at the example of one of the matrices in the problem. Let

A=(1λ01).

Lets compute An for the first few n.

A0=(1001)A1=(1λ01)A2=A⋅A=(12λ01)A3=A2⋅A=(13λ01).

There is a pattern here which is that

An=(1nλ01),

then we can think about the first few terms of the sequence

eA=∞∑n=0Ann!=A0+A+12!ßA2+13!A3+….

Looking at the entries when we add this we get that the upper left-most entry looks like this:

1+1+12+13!+…=∞∑n=01n!=e1.

Continue this process with each of the entries using what you know about Taylor series expansions to find the sum of each entry.

Hint for Review Problem 3

First note that (b) implies (a) is the easy direction: just think about what it means for M to be non-singular and for a linear function to be well-defined. Therefore we assume that M is singular which implies that there exists a non-zero vector X0 such that MX0=0. Now assume there exists some vector XV such that MXV=V, and look at what happens to XV+c⋅X0 for any c in your field. Lastly don't forget to address what happens if XV does not exist.

Hint for Review Problem 4

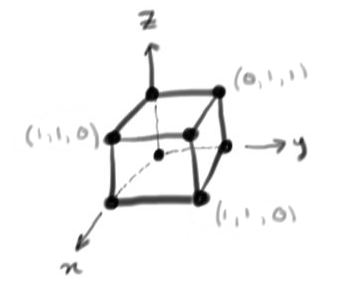

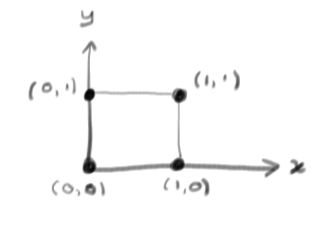

In the text, only inverses for square matrices were discussed, but there is a notion of left and right inverses for matrices that are not square. It helps to look at an example with bits to see why. To start with we look at vector spaces

Z32={(x,y,z)|x,y,z=0,1} and Z22={(x,y)|x,y=0,1}.

These have 8 and 4 vectors, respectively, that can be depicted as corners of a cube or square:

Z32

or Z22

Now lets consider a linear transformation

L:Z32⟶Z22.

This must be represented by a matrix, and lets take the example

L(xyz)=(011110)(xyz):=AX.

Since we have bits, we can work out what $L$ does to every vector, this is listed below

(0,0,0)L↦(0,0)(0,0,1)L↦(1,0)(1,1,0)L↦(1,0)(1,0,0)L↦(0,1)(0,1,1)L↦(0,1)(0,1,0)L↦(1,1)(1,0,1)L↦(1,1)(1,1,1)L↦(1,1)

Now lets think about left and right inverses. A left inverse B to the matrix A

would obey

BA=I

and since the identity matrix is square, B must be 2×3. It would have to undo the action of A and return vectors in Z32 to where they started from. But above, we see that different vectors in Z32 are mapped to the same vector in Z22 by the linear transformation L with matrix A. So B cannot exist. However a right inverse C obeying

AC=I

can. It would be 2×2. Its job is to take a vector in Z22 back to one in Z32 in a way that gets undone by the action of A. This can be done, but not uniquely.

Using an LU Decomposition

Lets go through how to use a LU decomposition to speed up solving a system of equations. Suppose you want to solve for x in the equation Mx=b

(10−53−1−1410−3)x=(6194)

where you are given the decomposition of M into the product of L and U which are lower and upper and lower triangular matrices respectively.

M=(10−53−1−1410−3)=(100310102)(10−50−11001)=LU

First you should solve L(Ux)=b for Ux. The augmented matrix you would use looks like this

(1006310191024)

This is an easy augmented matrix to solve because it is upper triangular. If you were to write out the three equations using variables, you would find that the first equation has already been solved, and is ready to be plugged into the second equation. This backward substitution makes solving the system much faster. Try it and in a few steps you should be able to get

(10060101001−1)

This tells us that Ux=(61−1). Now the second part of the problem is to solve for x. The augmented matrix you get is

(10−560−111001−1)

It should take only a few step to transform it into

(1001010−2001−1),

which gives us the answer x=(1−2−1).

Another LU Decomposition Example

Here we will perform an LU decomposition on the matrix

M=(172−3−214163)

following the procedure outlined in Section 7.7.2. So initially we have L1=I3 and U1=M, and hence

L2=(100−310101)U2=(17200100−1−1).

However we now have a problem since 0⋅c=0 for any value of c since we are working over a field, but we can quickly remedy this by swapping the second and third rows of U2 to get U′2 and note that we just interchange the corresponding rows all columns left of and including the column we added values to in L2 to get L′2. Yet this gives us a small problem as L′2U′2≠M; in fact it gives us the similar matrix M′ with the second and third rows swapped. In our original problem MX=V, we also need to make the corresponding swap on our vector V to get a V′ since all of this amounts to changing the order of our two equations, and note that this clearly does not change the solution. Back to our example, we have

L′2=(100110−301)U′2=(1720−1−10010),

and note that U′2 is upper triangular. Finally you can easily see that

L′2U′2=(172163−3−214)=M′

which solves the problem of L′2U′2X=M′X=V′. (We note that as augmented matrices (M′|V′)∼(M|V).)

Block LDU Explanation

This video explains how to do a block LDU decomposition. Firstly remember some key facts about block matrices: It is important that the blocks fit together properly. For example, if we have matrices

matrixshapeXr×rYr×tZt×rWt×t

we could fit these together as a (r+t)×(r+t) square block matrix

M=(XYZW).

Matrix multiplication works for blocks just as for matrix entries:

M2=(XYZW)(XYZW)=(X2+YZXY+YWZX+WZZY+W2).

Now lets specialize to the case where the square matrix X has an inverse. Then we can multiply out the following triple product of a lower triangular, a block diagonal and an upper triangular matrix:

(I0ZX−1I)(X00W−ZX−1Y)(IX−1Y0I) =(X0ZW−ZX−1Y)(IX−1Y0I) =(XYZX−1Y+ZW−ZX−1Y)

=(XYZW)=M.

This shows that the LDU decomposition given in Section 7.7 is correct.

Contributor

David Cherney, Tom Denton, and Andrew Waldron (UC Davis)