7: Iterative Methods

( \newcommand{\kernel}{\mathrm{null}\,}\)

Iterative methods are often used for solving a system of nonlinear equations. Even for linear systems, iterative methods have some advantages. They may require less memory and may be computationally faster. They are also easier to code. Here, without detailing the theoretical numerical analysis, we will simply explain the related iterative methods that go by the names of the Jacobi method, the Gauss-Seidel method, and the Successive Over Relaxation method (or SOR). We illustrate these three methods by showing how to solve the two-dimensional Poisson equation, an equation that we will later need to solve to determine the flow field past an obstacle

The Poisson equation is given by

−∇2Φ=f,

where

∇2=∂2∂x2+∂2∂y2

is the usual two-dimensional Laplacian. This could be a linear equation with f independent of Φ, or a nonlinear equation where f may be some nonlinear function of Φ.

After discretizing the Poisson equation using the centered finite difference approximation for the second derivatives, we obtain on a grid with uniform grid spacing h,

4Φi,j−Φi+1,j−Φi−1,j−Φi,j+1−Φi,j−1=h2fi,j.

The Jacobi method simply solves the discretized equation (7.1) for Φi,j iteratively. With superscripts indicating iteration steps, we have

Φ(n+1)i,j=14(Φ(n)i+1,j+Φ(n)i−1,j+Φ(n)i,j+1+Φ(n)i,j−1+h2f(n)i,j).

In the old FORTRAN-77 scientific programming language, implementing the Jacobi method required the saving of Φ in two different arrays, one corresponding to the n-th iteration, and one corresponding to the (n+1)-st iteration. When the iteration was done with a single array, the method was called Gauss-Seidel. In the standard Gauss-Seidel method, the array was updated row-by-row and had the form

Φ(n+1)i,j=14(Φ(n)i+1,j+Φ(n+1)i−1,j+Φ(n)i,j+1+Φ(n+1)i,j−1+h2f(n)i,j).

The Gauss-Seidel method had the double advantage of requiring less memory and converging more rapidly than the Jacobi method.

A variant of Gauss-Seidel that can also be found in textbooks is called red-black Gauss-Seidel. In this algorithm, the grid is viewed as a checkerboard with red and black squares. An updating of Φi,j is done in two passes: in the first pass, Φi,j is updated only on the red squares; in the second pass, only on the black squares. Because of the structure of the Laplacian finite difference operator, when solving ∇2Φ=0 the updated values of Φ on the red squares depend only on the values of Φ on the black squares, and the updated values on the black squares depend only on the red squares.

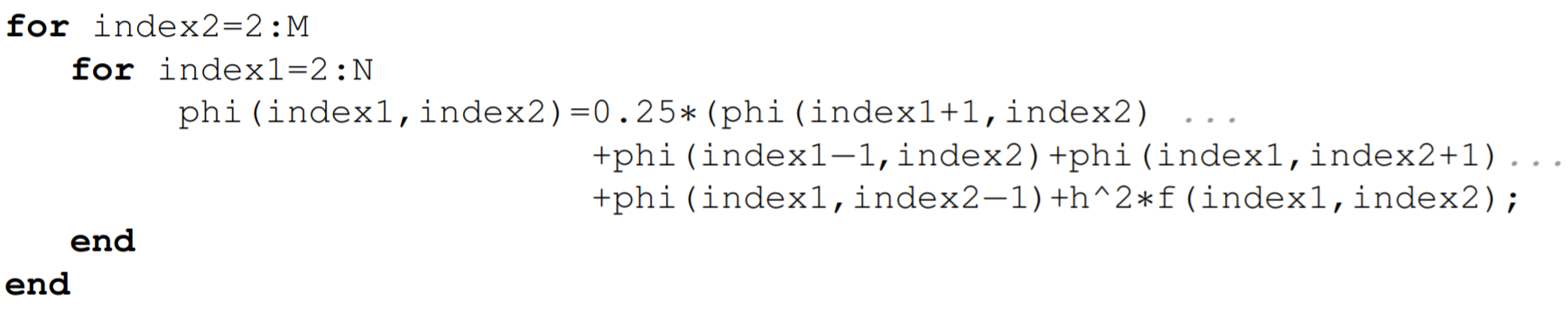

Translating FORTRAN-77 directly into MATLAB, the Gauss-Seidel method in the standard form could be implemented on a uniform grid with N−1 and M−1 internal grid points in the x-and y-directions, respectively, as

Nowadays, however, programming languages such as MATLAB operate on vectors, which are optimized for modern computers, and the explicit loops that used to be the workhorse of FORTRAN77 are now vectorized.

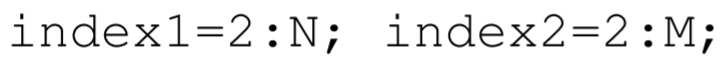

So to properly implement the Jacobi method, say, in MATLAB, one can predefine the vectors index 1 and index 2 that contain the index numbers of the first and second indices of the matrix variable phi. These definitions would be

where indexl and index 2 reference the internal grid points of the domain. The variable phi is assumed to be known on the boundaries of the domain corresponding to the indices (1,1), (N+1,1),(1,M+1), and (N+1,M+1). The Jacobi method in MATLAB can then be coded in one line as

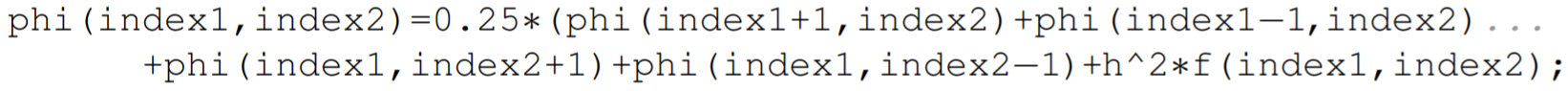

The red-black Gauss-Seidel method could be implemented in a somewhat similar fashion. One must now predefine the vectors even 1, odd1, even 2, and odd2, with definitions

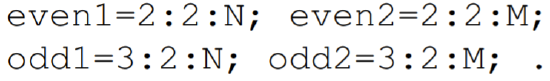

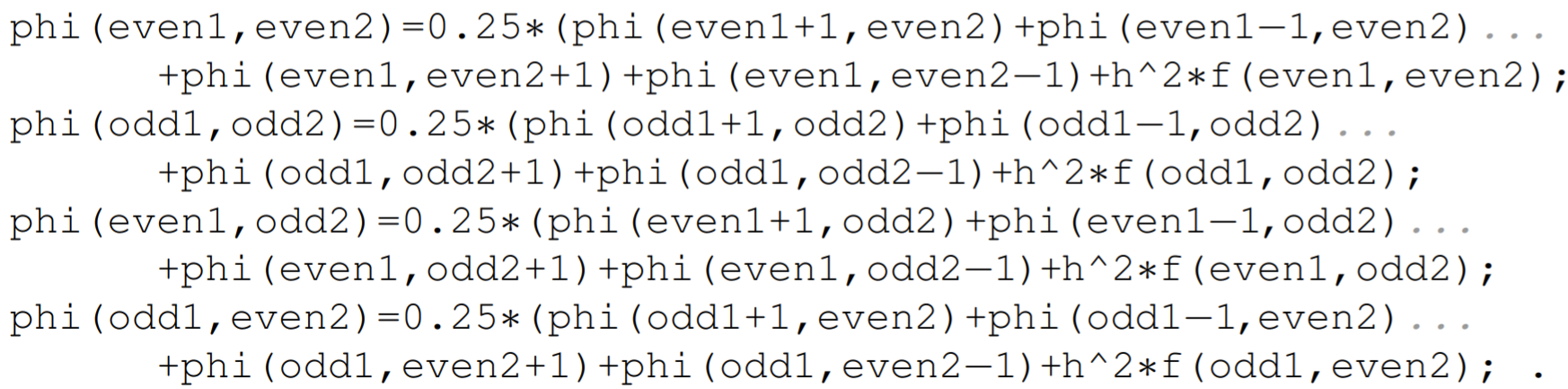

The red-black Gauss-Seidel method then requires the following four coding lines to implement:

Each iteration of the red-black Gauss-Seidel method will run slower than that of the Jacobi method, and the red-black Gauss-Seidel method will only be useful if the slower iterations are compensated by a faster convergence. In practice, however, the Jacobi method or the red-black Gauss Seidel method is replaced by the corresponding Successive Over Relaxation method (SOR method). We will illustrate this method using the Jacobi method, though the better approach is to use red-black Gauss-Seidel.

The Jacobi method is first rewritten by adding and subtracting the diagonal term Φ(n)i,j :

Φ(n+1)i,j=Φ(n)i,j+14(Φ(n)i+1,j+Φ(n)i−1,j+Φ(n)i,j+1+Φ(n)i,j−1−4Φ(n)i,j+h2f(n)i,j)

In this form, we see that the Jacobi method updates the value of Φi,j at each iteration. We can either magnify or diminish this update by introducing a relaxation parameter λ. We have

Φ(n+1)i,j=Φ(n)i,j+λ4(Φ(n)i+1,j+Φ(n)i−1,j+Φ(n)i,j+1+Φ(n)i,j−1−4Φ(n)i,j+h2f(n)i,j)

which can be written more efficiently as

Φ(n+1)i,j=(1−λ)Φ(n)i,j+λ4(Φ(n)i+1,j+Φ(n)i−1,j+Φ(n)i,j+1+Φ(n)i,j−1+h2f(n)i,j)

When used with Gauss-Seidel, a value of λ in the range 1<λ<2 can often be used to accelerate convergence of the iteration. When λ>1, the modifier over in Successive Over Relaxation is apt. When the right-hand-side of the Poisson equation is a nonlinear function of Φ, however, the λ=1 Gauss-Seidel method may fail to converge. In this case, it may be reasonable to choose a value of λ less than one, and perhaps a better name for the method would be Successive Under Relaxation. When under relaxing, the convergence of the iteration will of course be slowed. But this is the cost that must sometimes be paid for stability.

Newton’s method for a system of nonlinear equations

A system of nonlinear equations can be solved using a version of the iterative Newton’s Method for root finding. Although in practice, Newton’s method is often applied to a large nonlinear system, we will illustrate the method here for the simple system of two equations and two unknowns.

Suppose that we want to solve

f(x,y)=0,g(x,y)=0,

for the unknowns x and y. We want to construct two simultaneous sequences x0,x1,x2,… and y0,y1,y2,… that converge to the roots. To construct these sequences, we Taylor series expand f(xn+1,yn+1) and g(xn+1,yn+1) about the point (xn,yn). Using the partial derivatives fx= ∂f/∂x,fy=∂f/∂y, etc., the two-dimensional Taylor series expansions, displaying only the linear terms, are given by

f(xn+1,yn+1)=f(xn,yn)+(xn+1−xn)fx(xn,yn)+(yn+1−yn)fy(xn,yn)+…g(xn+1,yn+1)=g(xn,yn)+(xn+1−xn)gx(xn,yn)+(yn+1−yn)gy(xn,yn)+….

To obtain Newton’s method, we take f(xn+1,yn+1)=0,g(xn+1,yn+1)=0, and drop higherorder terms above linear. Although one can then find a system of linear equations for xn+1 and yn+1, it is more convenient to define the variables

Δxn=xn+1−xn,Δyn=yn+1−yn

The iteration equations will then be given by

xn+1=xn+Δxn,yn+1=yn+Δyn

and the linear equations to be solved for Δxn and Δyn are given by

(fxfygxgy)(ΔxnΔyn)=(−f−g),

where f,g,fx,fy,gx, and gy are all evaluated at the point (xn,yn). The two-dimensional case is easily generalized to n dimensions. The matrix of partial derivatives is called the Jacobian Matrix.

We illustrate Newton’s Method by finding numerically the steady state solution of the Lorenz equations, given by

σ(y−x)=0,rx−y−xz=0,xy−bz=0,

where x,y, and z are the unknown variables and σ,r, and b are the known parameters. Here, we have a three-dimensional homogeneous system f=0,g=0, and h=0, with

f(x,y,z)=σ(y−x),g(x,y,z)=rx−y−xz,h(x,y,z)=xy−bz.

The partial derivatives can be computed to be

fx=−σ,fy=σ,fz=0,gx=r−z,gy=−1,gz=−x,hx=y,hy=x,hz=−b.

The iteration equation is therefore

(−σσ0r−zn−1−xnynxn−b)(ΔxnΔynΔzn)=−(σ(yn−xn)rxn−yn−xnznxnyn−bzn)

with

xn+1=xn+Δxn,yn+1=yn+Δyn,zn+1=zn+Δzn.

The sample MATLAB program that solves this system is in the m-file newton_system.m.