3.3: Higher Order Taylor Methods

( \newcommand{\kernel}{\mathrm{null}\,}\)

Euler’s method for solving differential equations is easy to understand but is not efficient in the sense that it is what is called a first order method. The error at each step, the local truncation error, is of order Δx, for x the independent variable. The accumulation of the local truncation errors results in what is called the global error. In order to generalize Euler’s Method, we need to rederive it. Also, since these methods are typically used for initial value problems, we will cast the problem to be solved as

dydt=f(t,y),y(a)=y0,t∈[a,b]

The first step towards obtaining a numerical approximation to the solution of this problem is to divide the t-interval, [a,b], into N subintervals,

ti=a+ih,i=0,1,…,N,t0=a,tN=b

where

h=b−aN

We then seek the numerical solutions

˜yi≈y(ti),i=1,2,…,N

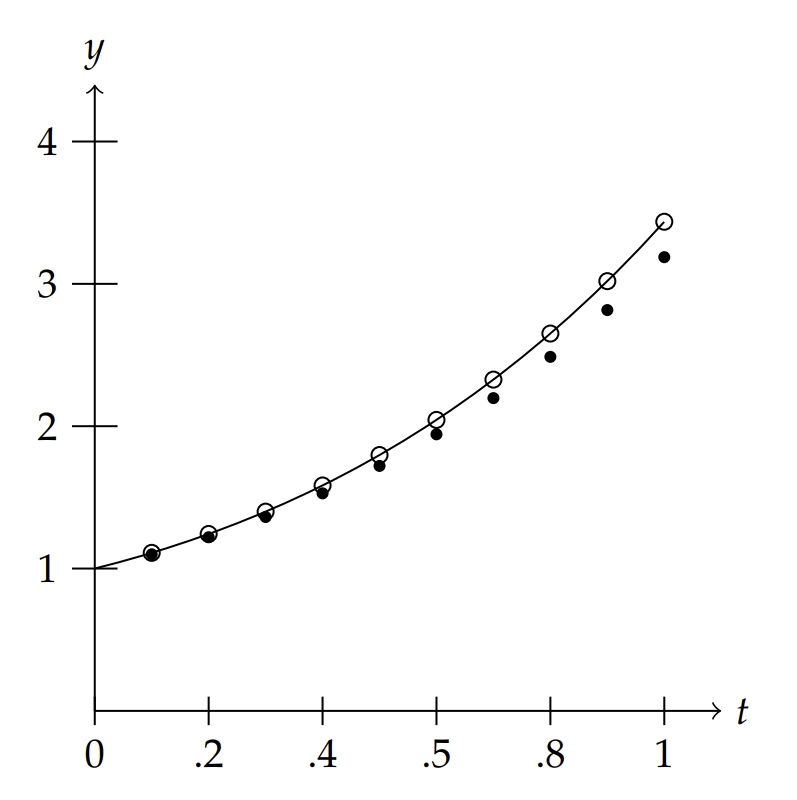

with ˜y0=y(t0)=y0. Figure 3.17 graphically shows how these quantities are related.

Euler’s Method can be derived using the Taylor series expansion of of the solution y(ti+h) about t=ti for i=1,2,…,N. This is given by

y(ti+1)=y(ti+h)=y(ti)+y′(ti)h+h22y′′(ξi),ξi∈(ti,ti+1)

Here the term h22y′′(ξi) captures all of the higher order terms and represents the error made using a linear approximation to y(ti+h). Dropping the remainder term, noting that y′(t)=f(t,y), and defining the resulting numerical approximations by ˜yi≈y(ti), we have

˜yi+1=˜yi+hf(ti,˜yi),i=0,1,…,N−1,˜y0=y(a)=y0.label3.11

This is Euler’s Method.

Euler’s Method is not used in practice since the error is of order h. However, it is simple enough for understanding the idea of solving differential equations numerically. Also, it is easy to study the numerical error, which we will show next.

The error that results for a single step of the method is called the local truncation error, which is defined by

τi+1(h)=y(ti+1)−˜yih−f(ti,yi)

A simple computation gives

τi+1(h)=h2y′′(ξi),ξi∈(ti,ti+1)

Since the local truncation error is of order h, this scheme is said to be of order one. More generally, for a numerical scheme of the form

˜yi+1=˜yi+hF(ti,˜yi),i=0,1,…,N−1˜y0=y(a)=y0

(The local truncation error.) the local truncation error is defined by

τi+1(h)=y(ti+1)−˜yih−F(ti,yi)

The accumulation of these errors leads to the global error. In fact, one can show that if f is continuous, satisfies the Lipschitz condition,

|f(t,y2)−f(t,y1)|≤L|y2−y1|

for a particular domain D⊂R2, and

|y′′(t)|≤M,t∈[a,b]

then

|y(ti)−˜y|≤hM2L(eL(ti−a)−1),i=0,1,…,N

Furthermore, if one introduces round-off errors, bounded by δ, in both the initial condition and at each step, the global error is modified as

|y(ti)−˜y|≤1L(hM2+δh)(eL(ti−a)−1)+|δ0|eL(ti−a),i=0,1,…,N

Then for small enough steps h, there is a point when the round-off error will dominate the error. [See Burden and Faires, Numerical Analysis for the details.]

Can we improve upon Euler’s Method? The natural next step towards finding a better scheme would be to keep more terms in the Taylor series expansion. This leads to Taylor series methods of order n.

Taylor series methods of order n take the form

˜yi+1=˜yi+hT(n)(ti,˜yi),i=0,1,…,N−1˜y0=y0

where we have defined

T(n)(t,y)=y′(t)+h2y′′(t)+⋯+h(n−1)n!y(n)(t)

However, since y′(t)=f(t,y), we can write

T(n)(t,y)=f(t,y)+h2f′(t,y)+⋯+h(n−1)n!f(n−1)(t,y)

We note that for n=1, we retrieve Euler’s Method as a special case. We demonstrate a third order Taylor’s Method in the next example.

Apply the third order Taylor’s Method to

dydt=t+y,y(0)=1

Solution

and obtain an approximation for y(1) for h=0.1.

The third order Taylor’s Method takes the form

˜yi+1=˜yi+hT(3)(ti,˜yi),i=0,1,…,N−1˜y0=y0

where

T(3)(t,y)=f(t,y)+h2f′(t,y)+h23!f′′(t,y)

and f(t,y)=t+y(t).

In order to set up the scheme, we need the first and second derivative of f(t,y):

f′(t,y)=ddt(t+y)=1+y′=1+t+y

f′′(t,y)=ddt(1+t+y)=1+y′=1+t+y

Inserting these expressions into the scheme, we have

˜yi+1=˜yi+h[(ti+yi)+h2(1+ti+yi)+h23!(1+ti+yi)]=˜yi+h(ti+yi)+h2(12+h6)(1+ti+yi)˜y0=y0

for i=0,1,…,N−1.

In Figure 3.1.1 we show the results comparing Euler’s Method, the 3 rd Order Taylor’s Method, and the exact solution for N=10. In Table 3.3.1 we provide are the numerical values. The relative error in Euler’s method is about 7% and that of the 3 rd Order Taylor’s Method is about o.006%. Thus, the 3 rd Order Taylor’s Method is significantly better than Euler’s Method.

In the last section we provided some Maple code for performing Euler’s method. A similar code in MATLAB looks like the following:

a=0;

b=1;

N=10;

h=(b-a)/N;

| Euler | Taylor | Exact |

|---|---|---|

| 1.0000 | 1.0000 | 1.0000 |

| 1.1000 | 1.1103 | 1.1103 |

| 1.2200 | 1.2428 | 1.2428 |

| 1.3620 | 1.3997 | 1.3997 |

| 1.5282 | 1.5836 | 1.5836 |

| 1.7210 | 1.7974 | 1.7974 |

| 1.9431 | 2.0442 | 2.0442 |

| 2.1974 | 2.3274 | 2.3275 |

| 2.4872 | 2.6509 | 2.6511 |

| 2.8159 | 3.0190 | 3.0192 |

| 3.1875 | 3.4364 | 3.4366 |

% Slope function

f = inline(’t+y’,’t’,’y’);

sol = inline(’2*exp(t)-t-1’,’t’);

% Initial Condition

t(1)=0;

y(1)=1;

% Euler’s Method

for i=2:N+1

y(i)=y(i-1)+h*f(t(i-1),y(i-1));

t(i)=t(i-1)+h;

end

A simple modification can be made for the 3 rd Order Taylor’s Method by replacing the Euler’s method part of the preceding code by

% Taylor’s Method, Order 3

y(1)=1;

h3 = h^2*(1/2+h/6);

for i=2:N+1

y(i)=y(i-1)+h*f(t(i-1),y(i-1))+h3*(1+t(i-1)+y(i-1));

t(i)=t(i-1)+h;

end

While the accuracy in the last example seemed sufficient, we have to remember that we only stopped at one unit of time. How can we be confident that the scheme would work as well if we carried out the computation for much longer times. For example, if the time unit were only a second, then one would need 86,400 times longer to predict a day forward. Of course, the scale matters. But, often we need to carry out numerical schemes for long times and we hope that the scheme not only converges to a solution, but that it coverges to the solution to the given problem. Also, the previous example was relatively easy to program because we could provide a relatively simple form for T(3)(t,y) with a quick computation of the derivatives of f(t,y). This is not always the case and higher order Taylor methods in this form are not typically used. Instead, one can approximate T(n)(t,y) by evaluating the known function f(t,y) at selected values of t and y, leading to Runge-Kutta methods.