2.4: Vector Solutions to Linear Systems

( \newcommand{\kernel}{\mathrm{null}\,}\)

- T/F: The equation A→x=→b is just another way of writing a system of linear equations.

- T/F: In solving A→x=→0, if there are 3 free variables, then the solution will be “pulled apart” into 3 vectors.

- T/F: A homogeneous system of linear equations is one in which all of the coefficients are 0.

- Whether or not the equation A→x=→b has a solution depends on an intrinsic property of _____.

The first chapter of this text was spent finding solutions to systems of linear equations. We have spent the first two sections of this chapter learning operations that can be performed with matrices. One may have wondered “Are the ideas of the first chapter related to what we have been doing recently?” The answer is yes, these ideas are related. This section begins to show that relationship.

We have often hearkened back to previous algebra experience to help understand matrix algebra concepts. We do that again here. Consider the equation ax=b, where a=3 and b=6. If we asked one to “solve for x,” what exactly would we be asking? We would want to find a number, which we call x, where a times x gives b; in this case, it is a number, when multiplied by 3, returns 6.

Now we consider matrix algebra expressions. We’ll eventually consider solving equations like AX=B, where we know what the matrices A and B are and we want to find the matrix X. For now, we’ll only consider equations of the type A→x=→b, where we know the matrix A and the vector →b. We will want to find what vector →x satisfies this equation; we want to “solve for →x.”

To help understand what this is asking, we’ll consider an example. Let

A=[1111−12201],→b=[2−31]and→x=[x1x2x3].

(We don’t know what →x is, so we have to represent it’s entries with the variables x1, x2 and x3.) Let’s “solve for →x,” given the equation A→x=→b.

We can multiply out the left hand side of this equation. We find that

A→x=[x1+x2+x3x1−x2+2x32x1+x3].

Be sure to note that the product is just a vector; it has just one column.

Since A→x is equal to →b, we have

[x1+x2+x3x1−x2+2x32x1+x3]=[2−31].

Knowing that two vectors are equal only when their corresponding entries are equal, we know x1+x2+x3=2x1−x2+2x3=−32x1+x3=1.

This should look familiar; it is a system of linear equations! Given the matrix-vector equation A→x=→b, we can recognize A as the coefficient matrix from a linear system and →b as the vector of the constants from the linear system. To solve a matrix–vector equation (and the corresponding linear system), we simply augment the matrix A with the vector →b, put this matrix into reduced row echelon form, and interpret the results.

We convert the above linear system into an augmented matrix and find the reduced row echelon form:

[11121−12−32011]→rref[10010102001−1].

This tells us that x1=1, x2=2 and x3=−1, so

→x=[12−1].

We should check our work; multiply out A→x and verify that we indeed get →b:

[1111−12201][12−1]does equal[2−31].

We should practice.

Solve the equation A→x=→b for →x where

A=[123−121110]and[5−12].

Solution

The solution is rather straightforward, even though we did a lot of work before to find the answer. Form the augmented matrix [A→b] and interpret its reduced row echelon form.

[1235−121−11102]→rref[100201000011]

In previous sections we were fine stating that the result as x1=2,x2=0,x3=1, but we were asked to find →x; therefore, we state the solution as →x=[201].

This probably seems all well and good. While asking one to solve the equation A→x=→b for →x seems like a new problem, in reality it is just asking that we solve a system of linear equations. Our variables x1, etc., appear not individually but as the entries of our vector →x. We are simply writing an old problem in a new way.

In line with this new way of writing the problem, we have a new way of writing the solution. Instead of listing, individually, the values of the unknowns, we simply list them as the elements of our vector →x.

These are important ideas, so we state the basic principle once more: solving the equation A→x=→b for →x is the same thing as solving a linear system of equations. Equivalently, any system of linear equations can be written in the form A→x=→b for some matrix A and vector →b.

Since these ideas are equivalent, we’ll refer to A→x=→b both as a matrix–vector equation and as a system of linear equations: they are the same thing.

We’ve seen two examples illustrating this idea so far, and in both cases the linear system had exactly one solution. We know from Theorem 1.4.1 that any linear system has either one solution, infinite solutions, or no solution. So how does our new method of writing a solution work with infinite solutions and no solutions?

Certainly, if A→x=→b has no solution, we simply say that the linear system has no solution. There isn’t anything special to write. So the only other option to consider is the case where we have infinite solutions. We’ll learn how to handle these situations through examples.

Solve the linear system A→x=→0 for →x and write the solution in vector form, where

A=[1224]and→0=[00].

Solution

We didn’t really need to specify that →0=[00], but we did just to eliminate any uncertainty.

To solve this system, put the augmented matrix into reduced row echelon form, which we do below.

[120240]→rref[120000]

We interpret the reduced row echelon form of this matrix to write the solution as

x1=−2x2x2 is free.

We are not done; we need to write the solution in vector form, for our solution is the vector →x. Recall that

→x=[x1x2].

From above we know that x1=−2x2, so we replace the x1 in →x with −2x2. This gives our solution as

→x=[−2x2x2].

Now we pull the x2 out of the vector (it is just a scalar) and write →x as

→x=x2[−21].

For reasons that will become more clear later, set

→v=[−21].

Thus our solution can be written as

→x=x2→v.

Recall that since our system was consistent and had a free variable, we have infinite solutions. This form of the solution highlights this fact; pick any value for x2 and we get a different solution.

For instance, by setting x2=−1, 0, and 5, we get the solutions

→x=[2−1],[00],and[−105],

respectively.

We should check our work; multiply each of the above vectors by A to see if we indeed get →0.

We have officially solved this problem; we have found the solution to A→x=→0 and written it properly. One final thing we will do here is graph the solution, using our skills learned in the previous section.

Our solution is

→x=x2[−21].

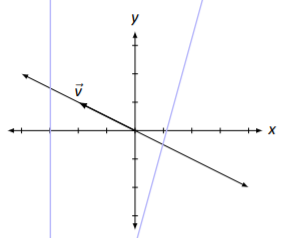

This means that any scalar multiply of the vector →v=[−21] is a solution; we know how to sketch the scalar multiples of →v. This is done in Figure 2.4.1.

Here vector →v is drawn as well as the line that goes through the origin in the direction of →v. Any vector along this line is a solution. So in some sense, we can say that the solution to A→x=→0 is a line.

Let’s practice this again.

Solve the linear system A→x=→0 and write the solution in vector form, where

A=[2−3−23].

Solution

Again, to solve this problem, we form the proper augmented matrix and we put it into reduced row echelon form, which we do below.

[2−30−230]→rref[1−3/20000]

We interpret the reduced row echelon form of this matrix to find that x1=3/2x2x2 is free.

As before,

→x=[x1x2].

Since x1=3/2x2, we replace x1 in →x with 3/2x2:

→x=[3/2x2x2].

Now we pull out the x2 and write the solution as

→x=x2[3/21].

As before, let’s set

→v=[3/21]

so we can write our solution as

→x=x2→v.

Again, we have infinite solutions; any choice of x2 gives us one of these solutions. For instance, picking x2=2 gives the solution

→x=[32].

(This is a particularly nice solution, since there are no fractions…)

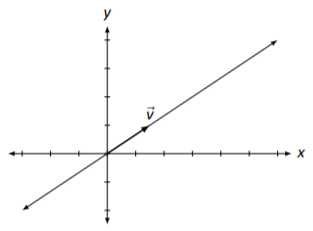

As in the previous example, our solutions are multiples of a vector, and hence we can graph this, as done in Figure 2.4.2.

Figure 2.4.2: The solution, as a line, to A→x=→0 in Example 2.4.3.

Let’s practice some more; this time, we won’t solve a system of the form A→x=→0, but instead A→x=→b, for some vector →b.

Solve the linear system A→x=→b, where

A=[1224]and→b=[36].

Solution

This is the same matrix A that we used in Example 2.4.2. This will be important later.

Our methodology is the same as before; we form the augmented matrix and put it into reduced row echelon form.

[123456]→rref[123000]

Interpreting this reduced row echelon form, we find that x1=3−2x2x2 is free. Again,

→x=[x1x2],

and we replace x1 with 3−2x2, giving

→x=[3−2x2x2].

This solution is different than what we’ve seen in the past two examples; we can’t simply pull out a x2 since there is a 3 in the first entry. Using the properties of matrix addition, we can “pull apart” this vector and write it as the sum of two vectors: one which contains only constants, and one that contains only “x2 stuff.” We do this below.

→x=[3−2x2x2]=[30]+[−2x2x2]=[30]+x2[−21].

Once again, let’s give names to the different component vectors of this solution (we are getting near the explanation of why we are doing this). Let

→xp=[30]and→v=[−21].

We can then write our solution in the form

→x=→xp+x2→v.

We still have infinite solutions; by picking a value for x2 we get one of these solutions. For instance, by letting x2=−1, 0, or 2, we get the solutions

[5−1],[30]and[−12].

We have officially solved the problem; we have solved the equation A→x=→b for →x and have written the solution in vector form. As an additional visual aid, we will graph this solution.

Each vector in the solution can be written as the sum of two vectors: →xp and a multiple of →v. In Figure 2.4.3, →xp is graphed and →v is graphed with its origin starting at the tip of →xp. Finally, a line is drawn in the direction of →v from the tip of →xp; any vector pointing to any point on this line is a solution to A→x=→b.

Figure 2.4.3: The solution, as a line, to A→x=→b in Example 2.4.4.

The previous examples illustrate some important concepts. One is that we can “see” the solution to a system of linear equations in a new way. Before, when we had infinite solutions, we knew we could arbitrarily pick values for our free variables and get different solutions. We knew this to be true, and we even practiced it, but the result was not very “tangible.” Now, we can view our solution as a vector; by picking different values for our free variables, we see this as multiplying certain important vectors by a scalar which gives a different solution.

Another important concept that these examples demonstrate comes from the fact that Examples 2.4.2 and 2.4.4 were only “slightly different” and hence had only “slightly different” answers. Both solutions had

x2[−21]

in them; in Example 2.4.4 the solution also had another vector added to this. Was this coincidence, or is there a definite pattern here?

Of course there is a pattern! Now … what exactly is it? First, we define a term.

A system of linear equations is homogeneous if the constants in each equation are zero.

Note: a homogeneous system of equations can be written in vector form as A→x=→0.

The term homogeneous comes from two Greek words; homo meaning “same” and genus meaning “type.” A homogeneous system of equations is a system in which each equation is of the same type – all constants are 0. Notice that the system of equations in Examples 2.4.2 and 2.4.4 are homogeneous.

Note that A→0=→0; that is, if we set →x=→0, we have a solution to a homogeneous set of equations. This fact is important; the zero vector is always a solution to a homogeneous linear system. Therefore a homogeneous system is always consistent; we need only to determine whether we have exactly one solution (just →0) or infinite solutions. This idea is important so we give it it’s own box.

All homogeneous linear systems are consistent.

How do we determine if we have exactly one or infinite solutions? Recall Key Idea 1.4.1: if the solution has any free variables, then it will have infinite solutions. How can we tell if the system has free variables? Form the augmented matrix [A→0], put it into reduced row echelon form, and interpret the result.

It may seem that we’ve brought up a new question, “When does A→x=→0 have exactly one or infinite solutions?” only to answer with “Look at the reduced row echelon form of A and interpret the results, just as always.” Why bring up a new question if the answer is an old one?

While the new question has an old solution, it does lead to a great idea. Let’s refresh our memory; earlier we solved two linear systems,

A→x=→0andA→x=→b

where

A=[1224]and→b=[36].

The solution to the first system of equations, A→x=→0, is

→x=x2[−21]

and the solution to the second set of equations, A→x=→b, is

→x=[30]+x2[−21],

for all values of x2.

Recalling our notation used earlier, set

→xp=[30]and let→v=[−21].

Thus our solution to the linear system A→x=→b is

→x=→xp+x2→v.

Let us see how exactly this solution works; let’s see why A→x equals →b. Multiply A→x:

A→x=A(→xp+x2→v)=A→xp+A(x2→v)=A→xp+x2(A→v)=A→xp+x2→0=A→xp+→0=A→xp=→b

We know that the last line is true, that A→xp=→b, since we know that →x was a solution to A→x=→b. The whole point is that →xp itself is a solution to A→x=→b, and we could find more solutions by adding vectors “that go to zero” when multiplied by A. (The subscript p of “→xp” is used to denote that this vector is a “particular” solution.)

Stated in a different way, let’s say that we know two things: that A→xp=→b and A→v=→0. What is A(→xp+→v)? We can multiply it out:

A(→xp+→v)=A→xp+A→v=→b+→0=→b

and see that A(→xp+→v) also equals →b.

So we wonder: does this mean that A→x=→b will have infinite solutions? After all, if →xp and →xp+→v are both solutions, don’t we have infinite solutions?

No. If A→x=→0 has exactly one solution, then →v=→0, and →xp=→xp+→v; we only have one solution.

So here is the culmination of all of our fun that started a few pages back. If →v is a solution to A→x=→0 and →xp is a solution to A→x=→b, then →xp+→v is also a solution to A→x=→b. If A→x=→0 has infinite solutions, so does A→x=→b; if A→x=→0 has only one solution, so does A→x=→b. This culminating idea is of course important enough to be stated again.

Let A→x=→b be a consistent system of linear equations.

- If A→x=→0 has exactly one solution (→x=→0), then A→x=→b has exactly one solution.

- If A→x=→0 has infinite solutions, then A→x=→b has infinite solutions.

A key word in the above statement is consistent. If A→x=→b is inconsistent (the linear system has no solution), then it doesn’t matter how many solutions A→x=→0 has; A→x=→b has no solution.

Enough fun, enough theory. We need to practice.

Let

A=[1−1134246]and→b=[110].

Solve the linear systems A→x=→0 and A→x=→b for →x, and write the solutions in vector form.

Solution

We’ll tackle A→x=→0 first. We form the associated augmented matrix, put it into reduced row echelon form, and interpret the result.

[1−113042460]→rref[10120010−10]

x1=−x3−2x4x2=x4x3 is freex4 is free To write our solution in vector form, we rewrite x1 and x2 in →x in terms of x3 and x4.

→x=[x1x2x3x4]=[−x3−2x4x4x3x4]

Finally, we “pull apart” this vector into two vectors, one with the “x3 stuff” and one with the “x4 stuff.”

→x=[−x3−2x4x4x3x4]=[−x30x30]+[−2x4x40x4]=x3[−1010]+x4[−2101]=x3→u+x4→v

We use →u and →v simply to give these vectors names (and save some space).

It is easy to confirm that both →u and →v are solutions to the linear system A→x=→0. (Just multiply A→u and A→v and see that both are →0.) Since both are solutions to a homogeneous system of linear equations, any linear combination of →u and →v will be a solution, too.

Now let’s tackle A→x=→b. Once again we put the associated augmented matrix into reduced row echelon form and interpret the results.

[1−1131424610]→rref[10122010−11]

x1=2−x3−2x4x2=1+x4x3 is freex4 is free

Writing this solution in vector form gives

→x=[x1x2x3x4]=[2−x3−2x41+x4x3x4].

Again, we pull apart this vector, but this time we break it into three vectors: one with “x3” stuff, one with “x4” stuff, and one with just constants.

→x=[2−x3−2x41+x4x3x4]=[2100]+[−x30x30]+[−2x4x40x4]=[2100]+x3[−1010]+x4[−2101]=→xp⏟+x3→u+x4→v⏟particularsolution tosolutionhomogenousequations A→x=→0

Note that A→xp=→b; by itself, →xp is a solution. To get infinite solutions, we add a bunch of stuff that “goes to zero” when we multiply by A; we add the solution to the homogeneous equations.

Why don’t we graph this solution as we did in the past? Before we had only two variables, meaning the solution could be graphed in 2D. Here we have four variables, meaning that our solution “lives” in 4D. You can draw this on paper, but it is very confusing.

Rewrite the linear system

x1+2x2−3x3+2x4+7x5=23x1+4x2+5x3+2x4+3x5=−4

as a matrix–vector equation, solve the system using vector notation, and give the solution to the related homogeneous equations.

Solution

Rewriting the linear system in the form of A→x=→b, we have that

A=[12−32734523],→x=[x1x2x3x4x5]and→b=[2−4].

To solve the system, we put the associated augmented matrix into reduced row echelon form and interpret the results.

[12−327234523−4]→rref[1011−2−11−801−7295]

x1=−8−11x3+2x4+11x5x2=5+7x3−2x4−9x5x3 is freex4 is freex5 is free

We use this information to write →x, again pulling it apart. Since we have three free variables and also constants, we’ll need to pull →x apart into four separate vectors.

→x=[x1x2x3x4x5]=[−8−11x3+2x4+11x55+7x3−2x4−9x5x3x4x5]=[−85000]+[−11x37x3x300]+[2x4−2x40x40]+[11x5−9x500x5]=[−85000]+x3[−117100]+x4[2−2010]+x5[11−9001]=→xp⏟+x3→u+x4→v+x5→w⏟particularsolution to homogenoussolutionequations A→x=→0

So →xp is a particular solution; A→xp=→b. (Multiply it out to verify that this is true.) The other vectors, →u, →v and →w, that are multiplied by our free variables x3, x4 and x5, are each solutions to the homogeneous equations, A→x=→0. Any linear combination of these three vectors, i.e., any vector found by choosing values for x3, x4 and x5 in x3→u+x4→v+x5→w is a solution to A→x=→0.

Let

A=[1245]and→b=[36].

Find the solutions to A→x=→b and A→x=→0.

Solution

We go through the familiar work of finding the reduced row echelon form of the appropriate augmented matrix and interpreting the solution.

[123456]→rref[10−1012]

x1=−1x2=2

Thus

→x=[x1x2]=[−12].

This may strike us as a bit odd; we are used to having lots of different vectors in the solution. However, in this case, the linear system A→x=→b has exactly one solution, and we’ve found it. What is the solution to A→x=→0? Since we’ve only found one solution to A→x=→b, we can conclude from Key Idea 2.4.2 the related homogeneous equations A→x=→0 have only one solution, namely →x=→0. We can write our solution vector →x in a form similar to our previous examples to highlight this:

→x=[−12]=[−12]+[00]=→xp⏟+→0⏟particularsolution tosolutionA→x=→0

Let

A=[1122]and→b=[11].

Find the solutions to A→x=→b and A→x=→0.

Solution

To solve A→x=→b, we put the appropriate augmented matrix into reduced row echelon form and interpret the results.

[111221]→rref[110001]

We immediately have a problem; we see that the second row tells us that 0x1+0x2=1, the sign that our system does not have a solution. Thus A→x=→b has no solution. Of course, this does not mean that A→x=→0 has no solution; it always has a solution.

To find the solution to A→x=→0, we interpret the reduced row echelon from of the appropriate augmented matrix.

[110220]→rref[110000]

x1=−x2x2 is free

Thus

→x=[x1x2]=[−x2x2]=x2[−11]=x2→u.

We have no solution to A→x=→b, but infinite solutions to A→x=→0.

The previous example may seem to violate the principle of Key Idea 2.4.2. After all, it seems that having infinite solutions to A→x=→0 should imply infinite solutions to A→x=→b. However, we remind ourselves of the key word in the idea that we observed before: consistent. If A→x=→b is consistent and A→x=→0 has infinite solutions, then so will A→x=→b. But if A→x=→b is not consistent, it does not matter how many solutions A→x=→0 has; A→x=→b is still inconsistent.

This whole section is highlighting a very important concept that we won’t fully understand until after two sections, but we get a glimpse of it here. When solving any system of linear equations (which we can write as A→x=→b), whether we have exactly one solution, infinite solutions, or no solution depends on an intrinsic property of A. We’ll find out what that property is soon; in the next section we solve a problem we introduced at the beginning of this section, how to solve matrix equations AX=B.