19.3: Introduction to Markov Models

( \newcommand{\kernel}{\mathrm{null}\,}\)

In probability theory, a Markov model is a stochastic model used to model randomly changing systems. It is assumed that future states depend only on the current state, not on the events that occurred before it.

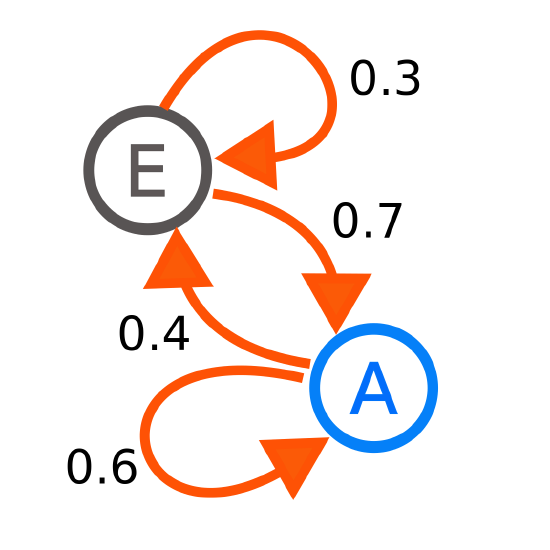

Each number represents the probability of the Markov process changing from one state to another state, with the direction indicated by the arrow. For example, if the Markov process is in state A, then the probability it changes to state E is 0.4, while the probability it remains in state A is 0.6.

The above state model can be represented by a transition matrix.

At each time step (t) the probability to move between states depends on the previous state (t−1):

At=0.6A(t−1)+0.7E(t−1)

Et=0.4A(t−1)+0.3E(t−1)

The above state model (St=[At,Et]T) can be represented in the following matrix notation:

St=PS(t−1)

Create a 2×2 matrix (P) representing the transition matrix for the above Markov space.