5.4: Diagonalization

( \newcommand{\kernel}{\mathrm{null}\,}\)

- Learn two main criteria for a matrix to be diagonalizable.

- Develop a library of examples of matrices that are and are not diagonalizable.

- Recipes: diagonalize a matrix, quickly compute powers of a matrix by diagonalization.

- Pictures: the geometry of diagonal matrices, why a shear is not diagonalizable.

- Theorem: the diagonalization theorem (two variants).

- Vocabulary words: diagonalizable, algebraic multiplicity, geometric multiplicity.

Diagonal matrices are the easiest kind of matrices to understand: they just scale the coordinate directions by their diagonal entries. In Section 5.3, we saw that similar matrices behave in the same way, with respect to different coordinate systems. Therefore, if a matrix is similar to a diagonal matrix, it is also relatively easy to understand. This section is devoted to the question: “When is a matrix similar to a diagonal matrix?” This section is devoted to the question: “When is a matrix similar to a diagonal matrix?” We will see that the algebra and geometry of such a matrix is relatively easy to understand.

Diagonalizability

Before answering the above question, first we give it a name.

An n×n matrix A is diagonalizable if it is similar to a diagonal matrix: that is, if there exists an invertible n×n matrix C and a diagonal matrix D such that

A=CDC−1.

Any diagonal matrix is D is diagonalizable because it is similar to itself, Proposition 5.3.1 in Section 5.3. For instance,

(100020003)=I3(100020003)I−13.

Most of the examples in Section 5.3 involve diagonalizable matrices: The following are examples of diagonalizable matrices:

(−1215−1013)is diagonalizablebecause it equals(−231−1)(300−2)(−231−1)−1(1/23/23/21/2)is diagonalizablebecause it equals(111−1)(200−1)(111−1)−115(−8−9613)is diagonalizablebecause it equals12(−1−321)(200−1)(12(−1−321))−1(−100−102−111)is diagonalizablebecause it equals(−110111−101)(−1000−10002)(−110111−101)−1.

If a matrix A is diagonalizable, and if B is similar to A, then B is diagonalizable as well by Proposition 5.3.1 in Section 5.3. as well. Indeed, if A=CDC−1 for D diagonal, and B=EAE−1, then

B=EAE−1=E(CDC−1)E−1=(EC)D(EC)−1, so B is similar to D.

Powers of Diagonalizable Matrices

Multiplying diagonal matrices together just multiplies their diagonal entries:

(x1000x2000x3)(y1000y2000y3)=(x1y1000x2y2000x3y3).

Therefore, it is easy to take powers of a diagonal matrix:

(x000y000z)n=(xn000yn000zn).

By Fact 5.3.1 in Section 5.3, if A=CDC−1 then An=CDnC−1, so it is also easy to take powers of diagonalizable matrices. This will be very important in applications to difference equations in Section 5.6. This is often very important in applications.

If A=CDC−1, where D is a diagonal matrix, then An=CDnC−1:

A=C(x000y000z)C−1⟹An=C(xn000yn000zn)C−1.

Let

A=(1/23/23/21/2)=(111−1)(200−1)(111−1)−1.

Find a formula for An in which the entries are functions of n, where n is any positive whole number.

Solution

We have

An=(111−1)(200−1)n(111−1)−1=(111−1)(2n00(−1)n)1−2(−1−1−11)=(2n(−1)n2n(−1)n+1)12(111−1)=12(2n+(−1)n2n+(−1)n+12n+(−1)n+12n+(−1)n),

where we used (−1)n+2=(−1)2(−1)n=(−1)n.

A fundamental question about a matrix is whether or not it is diagonalizable. The following is the primary criterion for diagonalizability. It shows that diagonalizability is an eigenvalue problem.

An n×n matrix A is diagonalizable if and only if A has n linearly independent eigenvectors.

In this case, A=CDC−1 for

C=(|||v1v2⋯vn|||)D=(λ10⋯00λ2⋯0⋮⋮⋱⋮00⋯λn),

where v1,v2,…,vn are linearly independent eigenvectors, and λ1,λ2,…,λn are the corresponding eigenvalues, in the same order.

- Proof

-

First suppose that A has n linearly independent eigenvectors v1,v2,…,vn, with eigenvalues λ1,λ2,…,λn. Define C as above, so C is invertible by Theorem 5.1.1 in Section 5.1. Let D=C−1AC, so A=CDC−1. Multiplying by standard coordinate vectors, Fact 3.3.2 in Section 3.3, picks out the columns of C: we have Cei=vi, so ei=C−1vi. We multiply by the standard coordinate vectors to find the columns of D:

Dei=C−1ACei=C−1Avi=C−1λivi=λiC−1vi=λiei.

Therefore, the columns of D are multiples of the standard coordinate vectors:

D=(λ10⋯000λ2⋯00⋮⋮⋱⋮⋮00⋯λn−1000⋯0λn).

Now suppose that A=CDC−1, where C has columns v1,v2,…,vn, and D is diagonal with diagonal entries λ1,λ2,…,λn. Since C is invertible, its columns are linearly independent. We have to show that vi is an eigenvector of A with eigenvalue λi. We know that the standard coordinate vector ei is an eigenvector of D with eigenvalue λi, so:

Avi=CDC−1vi=CDei=Cλiei=λiCei=λivi.

By Fact 5.1.1 in Section 5.1, if an n×n matrix A has n distinct eigenvalues λ1,λ2,…,λn, then a choice of corresponding eigenvectors v1,v2,…,vn is automatically linearly independent.

An n×n matrix with n distinct eigenvalues is diagonalizable.

Apply Theorem 5.4.1 to the matrix

A=(100020003).

Solution

This diagonal matrix is in particular upper-triangular, so its eigenvalues are the diagonal entries 1,2,3. The standard coordinate vectors are eigenvalues of a diagonal matrix:

(100020003)(100)=1⋅(100)(100020003)(010)=2⋅(010)

(100020003)(001)=3⋅(001).

Therefore, the diagonalization theorem says that A=CDC−1, where the columns of C are the standard coordinate vectors, and the D is the diagonal matrix with entries 1,2,3:

(100020003)=(100010001)(100020003)(100010001)−1.

This just tells us that A is similar to itself.

Actually, the diagonalization theorem is not completely trivial even for diagonal matrices. If we put our eigenvalues in the order 3,2,1, then the corresponding eigenvectors are e3,e2,e1, so we also have that A=C′D′(C′)−1, where C′ is the matrix with columns e3,e2,e1, and D′ is the diagonal matrix with entries 3,2,1:

(100020003)=(001010100)(300020001)(001010100)−1.

In particular, the matrices

(100020003)and(300020001)

are similar to each other.

We saw in the above example that changing the order of the eigenvalues and eigenvectors produces a different diagonalization of the same matrix. There are generally many different ways to diagonalize a matrix, corresponding to different orderings of the eigenvalues of that matrix. The important thing is that the eigenvalues and eigenvectors have to be listed in the same order.

A=(|||v1v2v3|||)(λ1000λ2000λ3)(|||v1v2v3|||)−1=(|||v3v2v1|||)(λ3000λ2000λ1)(|||v3v2v1|||)−1.

There are other ways of finding different diagonalizations of the same matrix. For instance, you can scale one of the eigenvectors by a constant c:

A=(|||v1v2v3|||)(λ1000λ2000λ3)(|||v1v2v3|||)−1=(|||cv1v2v3|||)(λ1000λ2000λ3)(|||cv1v2v3|||)−1.

you can find a different basis entirely for an eigenspace of dimension at least 2, etc.

Diagonalize the matrix

A=(1/23/23/21/2).

Solution

We need to find the eigenvalues and eigenvectors of A. First we compute the characteristic polynomial:

f(λ)=λ2−Tr(A)λ+det(A)=λ2−λ−2=(λ+1)(λ−2).

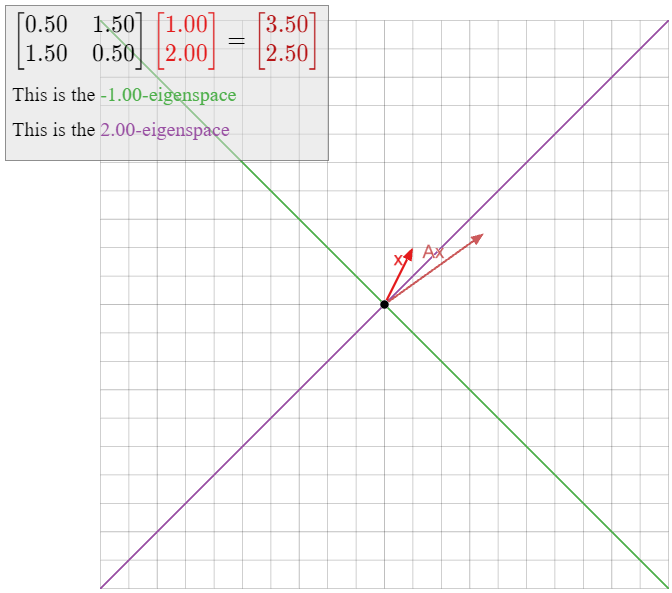

Therefore, the eigenvalues are −1 and 2. We need to compute eigenvectors for each eigenvalue. We start with λ1=−1:

(A+1I2)v=0⟺(3/23/23/23/2)v=0RREF→(1100)v=0.

The parametric form is x=−y, so v1=(−11) is an eigenvector with eigenvalue λ1. Now we find an eigenvector with eigenvalue λ2=2:

(A−2I2)v=0⟺(−3/23/23/2−3/2)v=0RREF→(1−100)v=0.

The parametric form is x=y, so v2=(11) is an eigenvector with eigenvalue 2.

The eigenvectors v1,v2 are linearly independent, so Theorem 5.4.1 says that

A=CDC−1forC=(−1111)D=(−1002).

Alternatively, if we choose 2 as our first eigenvalue, then

A=C′D′(C′)−1forC′=(1−111)D′=(200−1).

Diagonalize the matrix

A=(2/3−4/3−2/34/3).

Solution

We need to find the eigenvalues and eigenvectors of A. First we compute the characteristic polynomial:

f(λ)=λ2−Tr(A)λ+det(A)=λ2−2λ=λ(λ−2).

Therefore, the eigenvalues are 0 and 2. We need to compute eigenvectors for each eigenvalue. We start with λ1=0:

(A−0I2)v=0⟺(2/3−4/3−2/34/3)v=0RREF→(1−200)v=0.

The parametric form is x=2y, so v1=(21) is an eigenvector with eigenvalue λ1. Now we find an eigenvector with eigenvalue λ2=2:

(A−2I2)v=0⟺(−4/3−4/3−2/3−2/3)v=0RREF→(1100)v=0.

The parametric form is x=−y, so v2=(1−1) is an eigenvector with eigenvalue 2.

The eigenvectors v1,v2 are linearly independent, so the Theorem 5.4.1 says that

A=CDC−1forC=(211−1)D=(0002).

Alternatively, if we choose 2 as our first eigenvalue, then

A=C′D′(C′)−1forC′=(12−11)D′=(2000).

In the above example, the (non-invertible) matrix A=13(2−4−24) is similar to the diagonal matrix D=(0002). Since A is not invertible, zero is an eigenvalue by the invertible matrix theorem, Theorem 5.1.1 in Section 5.1, so one of the diagonal entries of D is necessarily zero. Also see Example 5.4.10 below.

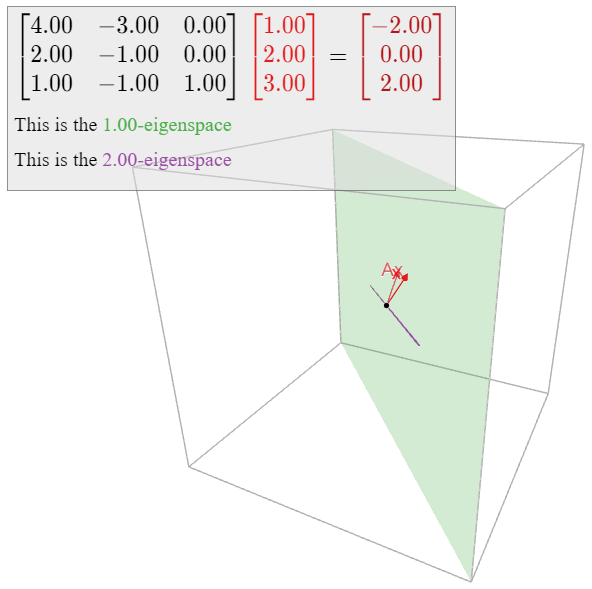

Diagonalize the matrix

A=(4−302−101−11).

Solution

We need to find the eigenvalues and eigenvectors of A. First we compute the characteristic polynomial by expanding cofactors along the third column:

f(λ)=det(A−λI3)=(1−λ)det((4−32−1)−λI2)=(1−λ)(λ2−3λ+2)=−(λ−1)2(λ−2).

Therefore, the eigenvalues are 1 and 2. We need to compute eigenvectors for each eigenvalue. We start with λ1=1:

(A−I3)v=0⟺(3−302−201−10)v=0RREF→(1−10000000)v=0.

The parametric vector form is

{x=yy=yz=z⟹(xyz)+y(110)+z(001).

Hence a basis for the 1-eigenspace is

B1={v1,v2}wherev1=(110),v2=(001).

Now we compute the eigenspace for λ2=2:

(A−2I3)v=0⟺(2−302−301−1−1)v=0RREF→(10−301−2000)v=0

The parametric form is x=3z,y=2z, so an eigenvector with eigenvalue 2 is

v3=(321).

The eigenvectors v1,v2,v3 are linearly independent: v1,v2 form a basis for the 1-eigenspace, and v3 is not contained in the 1-eigenspace because its eigenvalue is 2. Therefore, Theorem 5.4.1 says that

A=CDC−1forC=(103102011)D=(100010002).

Here is the procedure we used in the above examples.

Let A be an n×n matrix. To diagonalize A:

- Find the eigenvalues of A using the characteristic polynomial.

- For each eigenvalue λ of A, compute a basis Bλ for the λ-eigenspace.

- If there are fewer than n total vectors in all of the eigenspace bases Bλ, then the matrix is not diagonalizable.

- Otherwise, the n vectors v1,v2,…,vn in the eigenspace bases are linearly independent, and A=CDC−1 for C=(|||v1v2⋯vn|||)andD=(λ10⋯00λ2⋯0⋮⋮⋱⋮00⋯λn), where λi is the eigenvalue for vi.

We will justify the linear independence assertion in part 4 in the proof of Theorem 5.4.3 below.

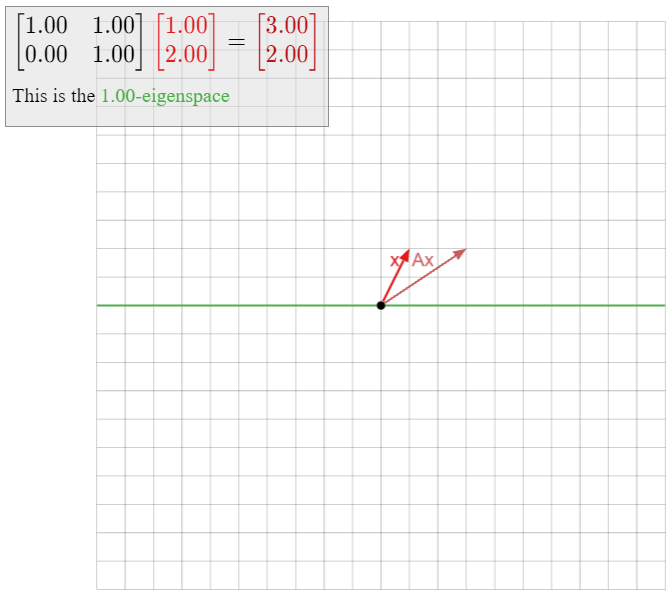

Let

A=(1101),

so T(x)=Ax is a shear, Example 3.1.8 in Section 3.1. The characteristic polynomial of A is f(λ)=(λ−1)2, so the only eigenvalue of A is 1. We compute the 1-eigenspace:

(A−I2)v=0⟺(0100)(xy)=0⟺y=0.

In other words, the 1-eigenspace is exactly the x-axis, so all of the eigenvectors of A lie on the x-axis. It follows that A does not admit two linearly independent eigenvectors, so by Theorem 5.4.1, it is not diagonalizable.

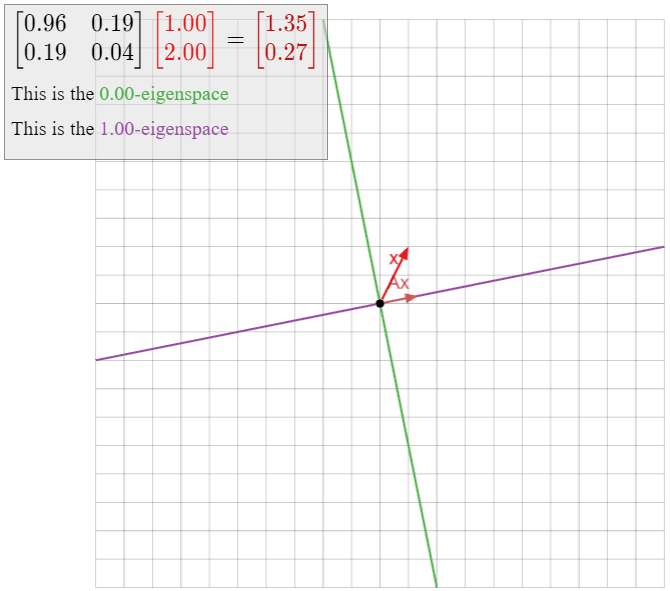

In Example 5.1.8 in Section 5.1, we studied the eigenvalues of a shear geometrically; we reproduce the interactive demo here.

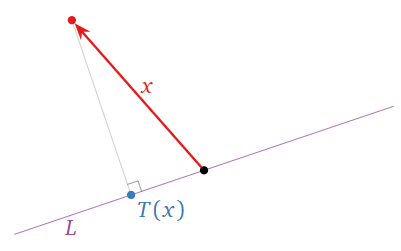

Let L be a line through the origin in R2, and define T:R2→R2 to be the transformation that sends a vector x to the closest point on L to x, as in the picture below.

Figure 5.4.4

This is an example of an orthogonal projection. We will see in Section 6.3 that T is a linear transformation; let A be the matrix for T. Any vector on L is not moved by T because it is the closest point on L to itself: hence it is an eigenvector of A with eigenvalue 1. Let L⊥ be the line perpendicular to L and passing through the origin. Any vector x on L⊥ is closest to the zero vector on L, so a (nonzero) such vector is an eigenvector of A with eigenvalue 0. (See Example 5.1.5 in Section 5.1 for a special case.) Since A has two distinct eigenvalues, it is diagonalizable; in fact, we know from Theorem 5.4.1 that A is similar to the matrix (1000).

Note that we never had to do any algebra! We know that A is diagonalizable for geometric reasons.

Let

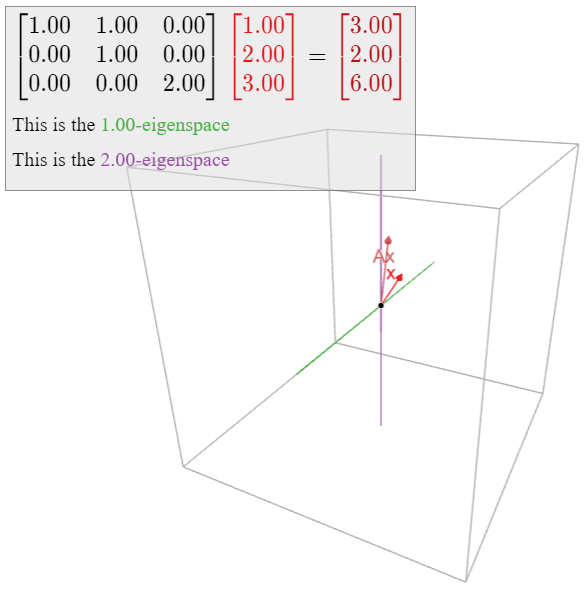

A=(110010002).

The characteristic polynomial of A is f(λ)=−(λ−1)2(λ−2), so the eigenvalues of A are 1 and 2. We compute the 1-eigenspace:

(A−I3)v=0⟺(010000002)(xyz)=0⟺y=z=0.

In other words, the 1-eigenspace is the x-axis. Similarly,

(A−2I3)v=0⟺(−1100−10000)(xyz)=0⟺x=y=0,

so the 2-eigenspace is the z-axis. In particular, all eigenvectors of A lie on the xz-plane, so there do not exist three linearly independent eigenvectors of A. By Theorem 5.4.1, the matrix A is not diagonalizable.

Notice that A contains a 2×2 block on its diagonal that looks like a shear:

A=(110010002).

This makes one suspect that such a matrix is not diagonalizable.

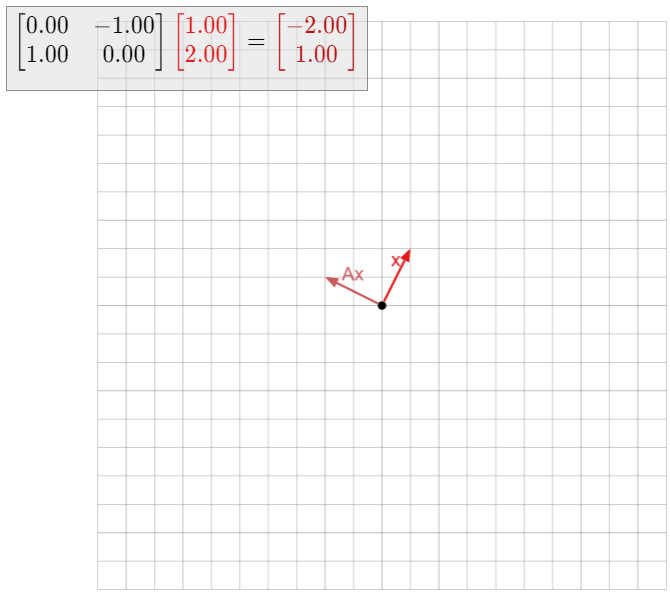

Let

A=(0−110),

so T(x)=Ax is the linear transformation that rotates counterclockwise by 90∘. We saw in Example 5.1.9 in Section 5.1 that A does not have any eigenvectors at all. It follows that A is not diagonalizable.

The characteristic polynomial of A is f(λ)=λ2+1, which of course does not have any real roots. If we allow complex numbers, however, then f has two roots, namely, ±i, where i=√−1. Hence the matrix is diagonalizable if we allow ourselves to use complex numbers. We will treat this topic in detail in Section 5.5.

The following point is often a source of confusion.

Of the following matrices, the first is diagonalizable and invertible, the second is diagonalizable but not invertible, the third is invertible but not diagonalizable, and the fourth is neither invertible nor diagonalizable, as the reader can verify:

(1001)(1000)(1101)(0100).

As in the above Example 5.4.9, one can check that the matrix

Aλ=(λ10λ)

is not diagonalizable for any number λ. We claim that any non-diagonalizable 2×2 matrix B with a real eigenvalue λ is similar to Aλ. Therefore, up to similarity, these are the only such examples.

To prove this, let B be such a matrix. Let v1 be an eigenvector with eigenvalue λ, and let v2 be any vector in R2 that is not collinear with v1, so that {v1,v2} forms a basis for R2. Let C be the matrix with columns v1,v2, and consider A=C−1BC. We have Ce1=v1 and Ce2=v2, so C−1v1=e1 and C−1v2=e2. We can compute the first column of A as follows:

Ae1=C−1BCe1=C−1Bv1=C−1λv1=λC−1v1=λe1.

Therefore, A has the form

A=(λb0d).

Since A is similar to B, it also has only one eigenvalue λ; since A is upper-triangular, this implies d=\lambda\text{,} so

A = \left(\begin{array}{cc}\lambda&b\\0&\lambda\end{array}\right). \nonumber

As B is not diagonalizable, we know A is not diagonal (B is similar to A), so b\neq 0. Now we observe that

\left(\begin{array}{cc}1/b&0\\0&1\end{array}\right)\left(\begin{array}{cc}\lambda&b\\0&\lambda\end{array}\right)\left(\begin{array}{cc}1/b&0\\0&1\end{array}\right)^{-1}=\left(\begin{array}{cc}\lambda /b&1 \\ 0&\lambda\end{array}\right)\left(\begin{array}{cc}b&0\\0&1\end{array}\right)=\left(\begin{array}{cc}\lambda&1 \\ 0&\lambda\end{array}\right)=A_{\lambda}.\nonumber

We have shown that B is similar to A\text{,} which is similar to A_\lambda\text{,} so B is similar to A_\lambda by the transitivity property, Proposition 5.3.1 in Section 5.3, of similar matrices.

The Geometry of Diagonalizable Matrices

A diagonal matrix is easy to understand geometrically, as it just scales the coordinate axes:

\left(\begin{array}{ccc}1&0&0\\0&2&0\\0&0&3\end{array}\right)\left(\begin{array}{c}1\\0\\0\end{array}\right)=1\cdot\left(\begin{array}{c}1\\0\\0\end{array}\right)\quad\left(\begin{array}{ccc}1&0&0\\0&2&0\\0&0&3\end{array}\right)\left(\begin{array}{c}0\\1\\0\end{array}\right)=2\cdot\left(\begin{array}{c}0\\1\\0\end{array}\right)\nonumber

\left(\begin{array}{ccc}1&0&0\\0&2&0\\0&0&3\end{array}\right)\left(\begin{array}{c}0\\0\\1\end{array}\right)=3\cdot\left(\begin{array}{c}0\\0\\1\end{array}\right).\nonumber

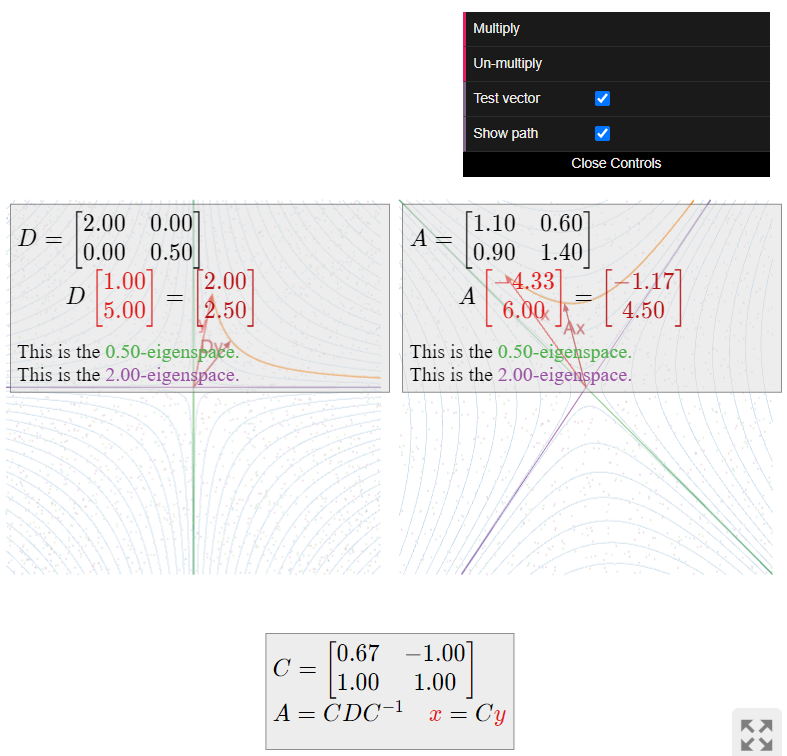

Therefore, we know from Section 5.3 that a diagonalizable matrix simply scales the “axes” with respect to a different coordinate system. Indeed, if v_1,v_2,\ldots,v_n are linearly independent eigenvectors of an n\times n matrix A\text{,} then A scales the v_i-direction by the eigenvalue \lambda_i.

In the following examples, we visualize the action of a diagonalizable matrix A in terms of its dynamics. In other words, we start with a collection of vectors (drawn as points), and we see where they move when we multiply them by A repeatedly.

Describe how the matrix

A = \frac{1}{10}\left(\begin{array}{cc}11&6\\9&14\end{array}\right) \nonumber

acts on the plane.

Solution

First we diagonalize A. The characteristic polynomial is

f(\lambda) = \lambda^2 - \text{Tr}(A)\lambda + \det(A) = \lambda^2 - \frac 52\lambda + 1 = (\lambda - 2)\left(\lambda - \frac 12\right). \nonumber

We compute the 2-eigenspace:

(A-2I_3)v = 0 \iff \frac 1{10}\left(\begin{array}{cc}-9&6\\9&-6\end{array}\right)v = 0 \;\xrightarrow{\text{RREF}}\; \left(\begin{array}{cc}1&-2/3\\0&0\end{array}\right)v = 0. \nonumber

The parametric form of this equation is x = 2/3y\text{,} so one eigenvector is v_1 = {2/3\choose 1}. For the 1/2-eigenspace, we have:

\left(A-\frac 12I_3\right)v = 0 \iff \frac 1{10}\left(\begin{array}{cc}6&6\\9&9\end{array}\right)v = 0 \;\xrightarrow{\text{RREF}}\; \left(\begin{array}{cc}1&1\\0&0\end{array}\right)v = 0. \nonumber

The parametric form of this equation is x = -y\text{,} so an eigenvector is v_2 = {-1\choose 1}. It follows that A = CDC^{-1}\text{,} where

C = \left(\begin{array}{cc}2/3&-1 \\ 1&1\end{array}\right) \qquad D = \left(\begin{array}{cc}2&0\\0&1/2\end{array}\right). \nonumber

The diagonal matrix D scales the x-coordinate by 2 and the y-coordinate by 1/2. Therefore, it moves vectors closer to the x-axis and farther from the y-axis. In fact, since (2x)(y/2) = xy\text{,} multiplication by D does not move a point off of a hyperbola xy = C.

The matrix A does the same thing, in the v_1,v_2-coordinate system: multiplying a vector by A scales the v_1-coordinate by 2 and the v_2-coordinate by 1/2. Therefore, A moves vectors closer to the 2-eigenspace and farther from the 1/2-eigenspace.

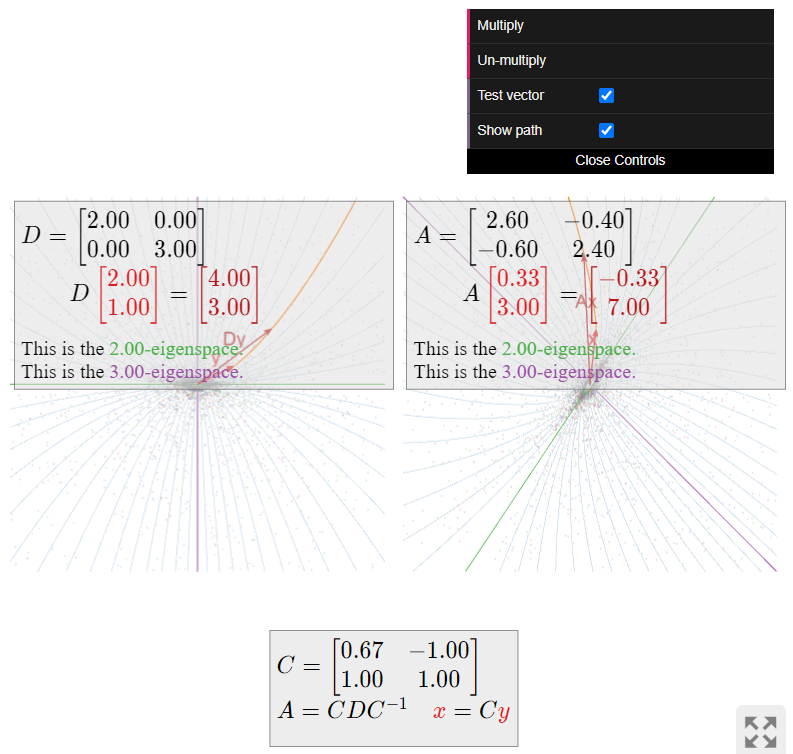

Describe how the matrix

A = \frac 1{5}\left(\begin{array}{cc}13&-2\\-3&12\end{array}\right) \nonumber

acts on the plane.

Solution

First we diagonalize A. The characteristic polynomial is

f(\lambda) = \lambda^2 - \text{Tr}(A)\lambda + \det(A) = \lambda^2 - 5\lambda + 6 = (\lambda - 2)(\lambda - 3). \nonumber

Next we compute the 2-eigenspace:

(A-2I_3)v = 0 \iff \frac 15\left(\begin{array}{cc}3&-2\\-3&2\end{array}\right)v = 0 \;\xrightarrow{\text{RREF}}\; \left(\begin{array}{cc}1&-2/3\\0&0\end{array}\right)v = 0. \nonumber

The parametric form of this equation is x = 2/3y\text{,} so one eigenvector is v_1 = {2/3\choose 1}. For the 3-eigenspace, we have:

(A-3I_3)v = 0 \iff \frac 15\left(\begin{array}{cc}-2&-2\\-3&-3\end{array}\right)v = 0 \;\xrightarrow{\text{RREF}}\; \left(\begin{array}{cc}1&1\\0&0\end{array}\right)v = 0. \nonumber

The parametric form of this equation is x = -y\text{,} so an eigenvector is v_2 = {-1\choose 1}. It follows that A = CDC^{-1}\text{,} where

C = \left(\begin{array}{cc}2/3&-1\\1&1\end{array}\right) \qquad D = \left(\begin{array}{cc}2&0\\0&3\end{array}\right). \nonumber

The diagonal matrix D scales the x-coordinate by 2 and the y-coordinate by 3. Therefore, it moves vectors farther from both the x-axis and the y-axis, but faster in the y-direction than the x-direction.

The matrix A does the same thing, in the v_1,v_2-coordinate system: multiplying a vector by A scales the v_1-coordinate by 2 and the v_2-coordinate by 3. Therefore, A moves vectors farther from the 2-eigenspace and the 3-eigenspace, but faster in the v_2-direction than the v_1-direction.

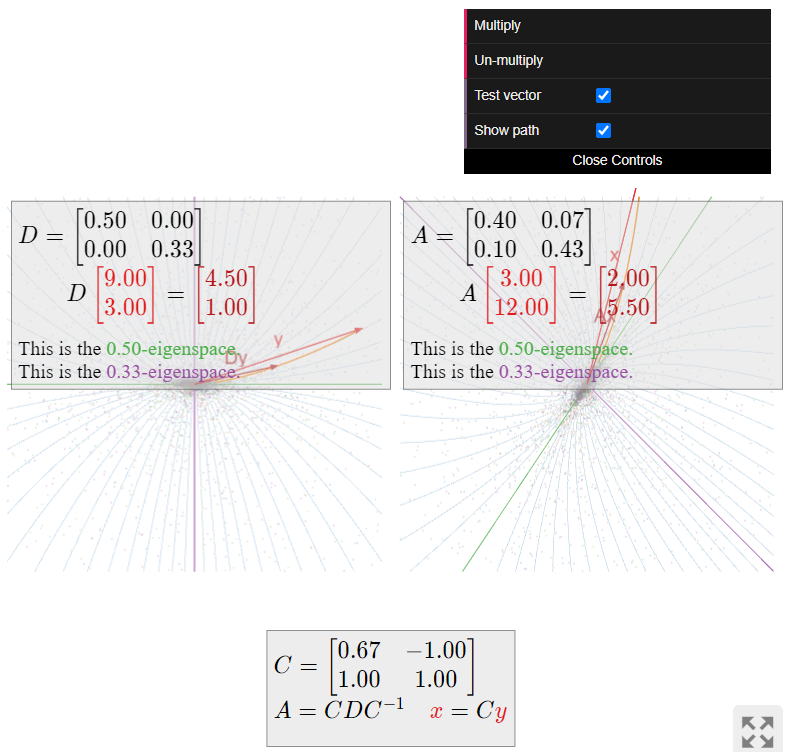

Describe how the matrix

A' = \frac 1{30}\left(\begin{array}{cc}12&2\\3&13\end{array}\right) \nonumber

acts on the plane.

Solution

This is the inverse of the matrix A from the previous Example \PageIndex{14}. In that example, we found A = CDC^{-1} for

C = \left(\begin{array}{cc}2/3&-1\\1&1\end{array}\right) \qquad D = \left(\begin{array}{cc}2&0\\0&3\end{array}\right). \nonumber

Therefore, remembering that taking inverses reverses the order of multiplication, Fact 3.5.1 in Section 3.5, we have

A' = A^{-1} = (CDC^{-1})^{-1} = (C^{-1})^{-1} D^{-1} C^{-1} = C\left(\begin{array}{cc}1/2&0\\0&1/3\end{array}\right)C^{-1}. \nonumber

The diagonal matrix D^{-1} does the opposite of what D does: it scales the x-coordinate by 1/2 and the y-coordinate by 1/3. Therefore, it moves vectors closer to both coordinate axes, but faster in the y-direction. The matrix A' does the same thing, but with respect to the v_1,v_2-coordinate system.

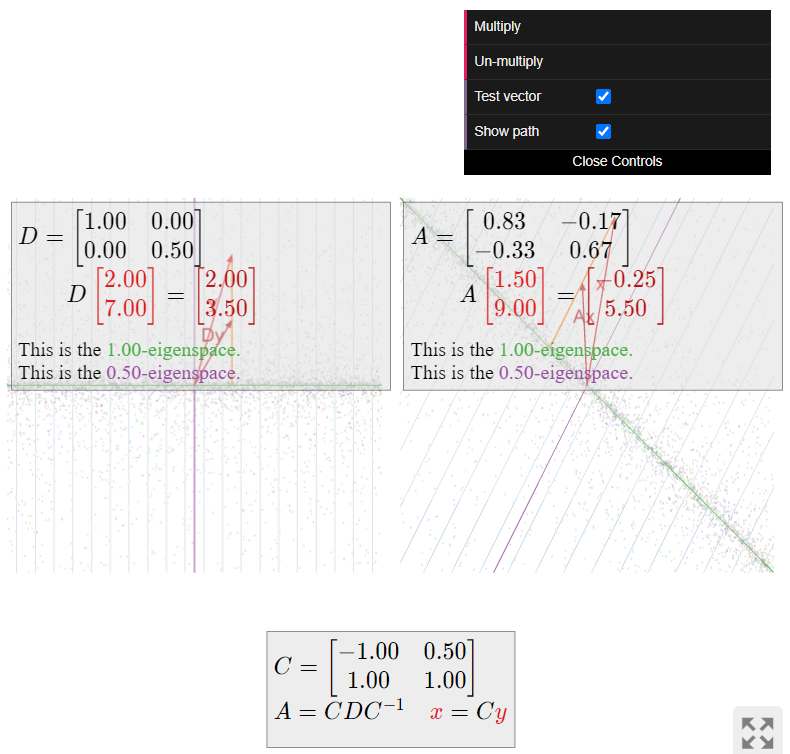

Describe how the matrix

A = \frac 16\left(\begin{array}{cc}5&-1\\-2&4\end{array}\right) \nonumber

acts on the plane.

Solution

First we diagonalize A. The characteristic polynomial is

f(\lambda) = \lambda^2 - \text{Tr}(A)\lambda + \det(A) = \lambda^2 - \frac 32\lambda + \frac 12 = (\lambda - 1)\left(\lambda - \frac 12\right). \nonumber

Next we compute the 1-eigenspace:

(A-I_3)v = 0 \iff \frac 16\left(\begin{array}{cc}-1&-1\\-2&-2\end{array}\right)v = 0 \;\xrightarrow{\text{RREF}}\; \left(\begin{array}{cc}1&1\\0&0\end{array}\right)v = 0. \nonumber

The parametric form of this equation is x = -y\text{,} so one eigenvector is v_1 = {-1\choose 1}. For the 1/2-eigenspace, we have:

\left(A-\frac 12I_3\right)v = 0 \iff \frac 16\left(\begin{array}{cc}2&-1\\-2&1\end{array}\right)v = 0 \;\xrightarrow{\text{RREF}}\; \left(\begin{array}{cc}1&-1/2 \\ 0&0\end{array}\right)v = 0. \nonumber

The parametric form of this equation is x = 1/2y\text{,} so an eigenvector is v_2 = {1/2\choose 1}. It follows that A = CDC^{-1}\text{,} where

C = \left(\begin{array}{cc}-1&1/2 \\ 1&1\end{array}\right) \qquad D = \left(\begin{array}{cc}1&0\\0&1/2\end{array}\right). \nonumber

The diagonal matrix D scales the y-coordinate by 1/2 and does not move the x-coordinate. Therefore, it simply moves vectors closer to the x-axis along vertical lines. The matrix A does the same thing, in the v_1,v_2-coordinate system: multiplying a vector by A scales the v_2-coordinate by 1/2 and does not change the v_1-coordinate. Therefore, A “sucks vectors into the 1-eigenspace” along lines parallel to v_2.

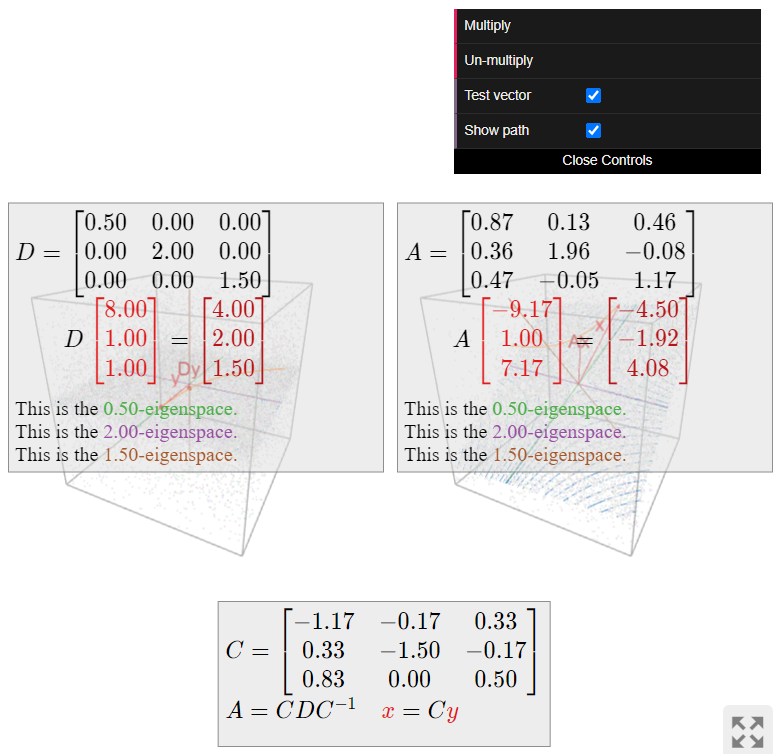

The diagonal matrix

D = \left(\begin{array}{ccc}1/2&0&0\\0&2&0\\0&0&3/2\end{array}\right) \nonumber

scales the x-coordinate by 1/2\text{,} the y-coordinate by 2\text{,} and the z-coordinate by 3/2. Looking straight down at the xy-plane, the points follow parabolic paths taking them away from the x-axis and toward the y-axis. The z-coordinate is scaled by 3/2\text{,} so points fly away from the xy-plane in that direction.

If A = CDC^{-1} for some invertible matrix C\text{,} then A does the same thing as D\text{,} but with respect to the coordinate system defined by the columns of C.

Algebraic and Geometric Multiplicity

In this subsection, we give a variant of Theorem \PageIndex{1} that provides another criterion for diagonalizability. It is stated in the language of multiplicities of eigenvalues.

In algebra, we define the multiplicity of a root \lambda_0 of a polynomial f(\lambda) to be the number of factors of \lambda-\lambda_0 that divide f(\lambda). For instance, in the polynomial

f(\lambda) = -\lambda^3 + 4\lambda^2 - 5\lambda + 2 = -(\lambda-1)^2(\lambda-2), \nonumber

the root \lambda_0=2 has multiplicity 1\text{,} and the root \lambda_0=1 has multiplicity 2.

Let A be an n\times n matrix, and let \lambda be an eigenvalue of A.

- The algebraic multiplicity of \lambda is its multiplicity as a root of the characteristic polynomial of A.

- The geometric multiplicity of \lambda is the dimension of the \lambda-eigenspace.

Since the \lambda-eigenspace of A is \text{Nul}(A-\lambda I_n)\text{,} its dimension is the number of free variables in the system of equations (A - \lambda I_n)x=0\text{,} i.e., the number of columns without pivots in the matrix A-\lambda I_n.

The shear matrix

A = \left(\begin{array}{cc}1&1\\0&1\end{array}\right) \nonumber

has only one eigenvalue \lambda=1. The characteristic polynomial of A is f(\lambda) = (\lambda-1)^2\text{,} so 1 has algebraic multiplicity 2\text{,} as it is a double root of f. On the other hand, we showed in Example \PageIndex{9} that the 1-eigenspace of A is the x-axis, so the geometric multiplicity of 1 is equal to 1. This matrix is not diagonalizable.

![A graph showing vectors in red with components [1,0] and [1,3], resulting in component [2,3]. Green line and grid in the background, with labeled coordinates and a description above.](https://math.libretexts.org/@api/deki/files/65376/clipboard_e579fb3ddde68d8fe8db921d0042286e8.png?revision=1)

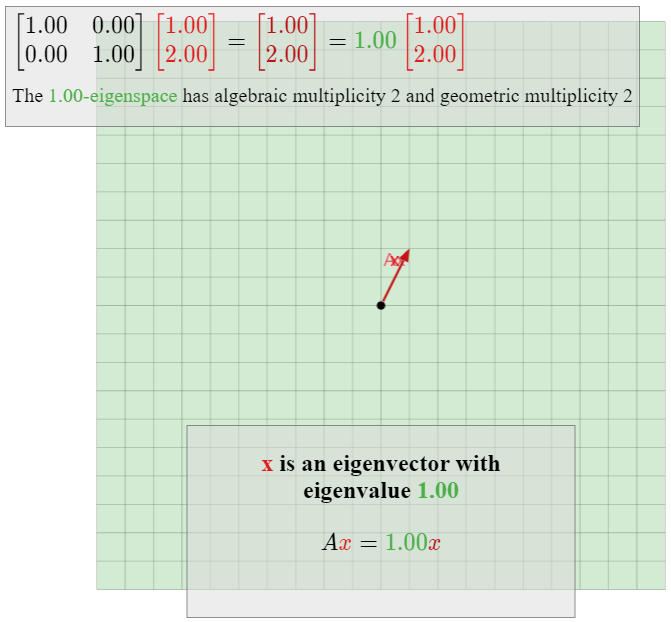

The identity matrix

I_2 = \left(\begin{array}{cc}1&0\\0&1\end{array}\right) \nonumber

also has characteristic polynomial (\lambda-1)^2\text{,} so the eigenvalue 1 has algebraic multiplicity 2. Since every nonzero vector in \mathbb{R}^2 is an eigenvector of I_2 with eigenvalue 1\text{,} the 1-eigenspace is all of \mathbb{R}^2 \text{,} so the geometric multiplicity is 2 as well. This matrix is diagonal.

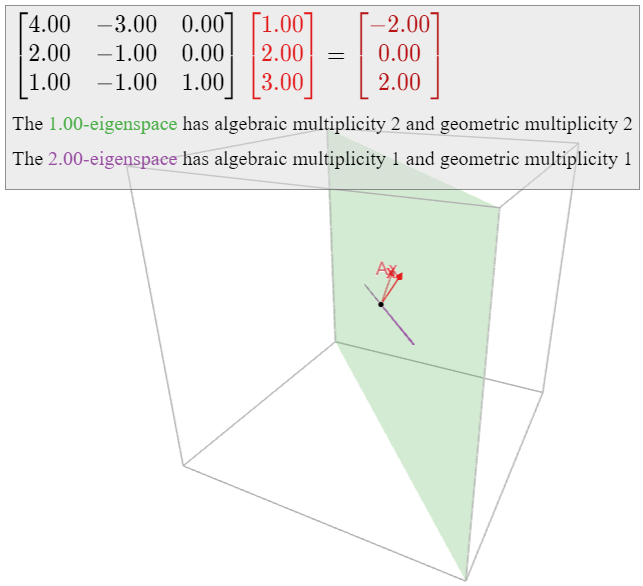

Continuing with Example \PageIndex{8}, let

A = \left(\begin{array}{ccc}4&-2&0\\2&-1&0\\1&-1&1\end{array}\right). \nonumber

The characteristic polynomial is f(\lambda) = -(\lambda-1)^2(\lambda-2)\text{,} so that 1 and 2 are the eigenvalues, with algebraic multiplicities 2 and 1\text{,} respectively. We computed that the 1-eigenspace is a plane and the 2-eigenspace is a line, so that 1 and 2 also have geometric multiplicities 2 and 1\text{,} respectively. This matrix is diagonalizable.

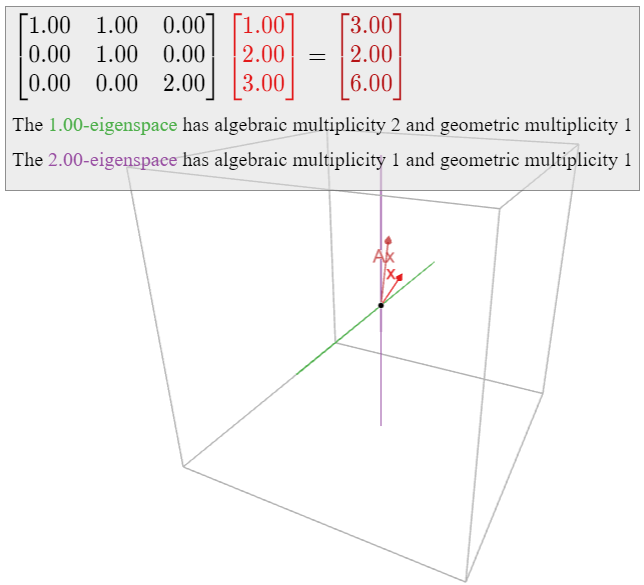

In Example \PageIndex{11}, we saw that the matrix

A = \left(\begin{array}{ccc}1&1&0\\0&1&0\\0&0&2\end{array}\right) \nonumber

also has characteristic polynomial f(\lambda) = -(\lambda-1)^2(\lambda-2)\text{,} so that 1 and 2 are the eigenvalues, with algebraic multiplicities 2 and 1\text{,} respectively. In this case, however, both eigenspaces are lines, so that both eigenvalues have geometric multiplicity 1. This matrix is not diagonalizable.

We saw in the above examples that the algebraic and geometric multiplicities need not coincide. However, they do satisfy the following fundamental inequality, the proof of which is beyond the scope of this text.

Let A be a square matrix and let \lambda be an eigenvalue of A. Then

1 \leq \text{(the geometric multiplicity of $\lambda$)} \leq \text{(the algebraic multiplicity of $\lambda$)}. \nonumber

In particular, if the algebraic multiplicity of \lambda is equal to 1\text{,} then so is the geometric multiplicity.

If A has an eigenvalue \lambda with algebraic multiplicity 1\text{,} then the \lambda-eigenspace is a line.

We can use Theorem \PageIndex{2} to give another criterion for diagonalizability (in addition to Theorem \PageIndex{1}).

Let A be an n\times n matrix. The following are equivalent:

- A is diagonalizable.

- The sum of the geometric multiplicities of the eigenvalues of A is equal to n.

- The sum of the algebraic multiplicities of the eigenvalues of A is equal to n\text{,} and for each eigenvalue, the geometric multiplicity equals the algebraic multiplicity.

- Proof

-

We will show 1\implies 2\implies 3\implies 1. First suppose that A is diagonalizable. Then A has n linearly independent eigenvectors v_1,v_2,\ldots,v_n. This implies that the sum of the geometric multiplicities is at least n\text{:} for instance, if v_1,v_2,v_3 have the same eigenvalue \lambda\text{,} then the geometric multiplicity of \lambda is at least 3 (as the \lambda-eigenspace contains three linearly independent vectors), and so on. But the sum of the algebraic multiplicities is greater than or equal to the sum of the geometric multiplicities by Theorem \PageIndex{2}, and the sum of the algebraic multiplicities is at most n because the characteristic polynomial has degree n. Therefore, the sum of the geometric multiplicities equals n.

Now suppose that the sum of the geometric multiplicities equals n. As above, this forces the sum of the algebraic multiplicities to equal n as well. As the algebraic multiplicities are all greater than or equal to the geometric multiplicities in any case, this implies that they are in fact equal.

Finally, suppose that the third condition is satisfied. Then the sum of the geometric multiplicities equals n. Suppose that the distinct eigenvectors are \lambda_1,\lambda_2,\ldots,\lambda_k\text{,} and that \mathcal{B}_i is a basis for the \lambda_i-eigenspace, which we call V_i. We claim that the collection \mathcal{B} = \{v_1,v_2,\ldots,v_n\} of all vectors in all of the eigenspace bases \mathcal{B}_i is linearly independent. Consider the vector equation

0 = c_1v_1 + c_2v_2 + \cdots + c_nv_n. \nonumber

Grouping the eigenvectors with the same eigenvalues, this sum has the form

0 = \text{(something in $V_1$)} + \text{(something in $V_2$)} + \cdots + \text{(something in $V_k$)}. \nonumber

Since eigenvectors with distinct eigenvalues are linearly independent, Fact 5.1.1 in Section 5.1, each “something in V_i” is equal to zero. But this implies that all coefficients c_1,c_2,\ldots,c_n are equal to zero, since the vectors in each \mathcal{B}_i are linearly independent. Therefore, A has n linearly independent eigenvectors, so it is diagonalizable.

The first part of the third statement simply says that the characteristic polynomial of A factors completely into linear polynomials over the real numbers: in other words, there are no complex (non-real) roots. The second part of the third statement says in particular that for any diagonalizable matrix, the algebraic and geometric multiplicities coincide.

Let A be a square matrix and let \lambda be an eigenvalue of A. If the algebraic multiplicity of \lambda does not equal the geometric multiplicity, then A is not diagonalizable.

The examples at the beginning of this subsection illustrate the theorem. Here we give some general consequences for diagonalizability of 2\times 2 and 3\times 3 matrices.

Let A be a 2\times 2 matrix. There are four cases:

- A has two different eigenvalues. In this case, each eigenvalue has algebraic and geometric multiplicity equal to one. This implies A is diagonalizable. For example: A=\left(\begin{array}{cc}1&7\\0&2\end{array}\right).\nonumber

- A has one eigenvalue \lambda of algebraic and geometric multiplicity 2. To say that the geometric multiplicity is 2 means that \text{Nul}(A-\lambda I_2) = \mathbb{R}^2 \text{,} i.e., that every vector in \mathbb{R}^2 is in the null space of A-\lambda I_2. This implies that A-\lambda I_2 is the zero matrix, so that A is the diagonal matrix \lambda I_2. In particular, A is diagonalizable. For example: A=\left(\begin{array}{cc}1&0\\0&1\end{array}\right).\nonumber

- A has one eigenvalue \lambda of algebraic multiplicity 2 and geometric multiplicity 1. In this case, A is not diagonalizable, by part 3 of Theorem \PageIndex{3}. For example, a shear: A=\left(\begin{array}{cc}1&1\\0&1\end{array}\right).\nonumber

- A has no eigenvalues. This happens when the characteristic polynomial has no real roots. In particular, A is not diagonalizable. For example, a rotation: A=\left(\begin{array}{cc}1&-1\\1&1\end{array}\right).\nonumber

Let A be a 3\times 3 matrix. We can analyze the diagonalizability of A on a case-by-case basis, as in the previous Example \PageIndex{20}.

- A has three different eigenvalues. In this case, each eigenvalue has algebraic and geometric multiplicity equal to one. This implies A is diagonalizable. For example: A=\left(\begin{array}{ccc}1&7&4\\0&2&3\\0&0&-1\end{array}\right).\nonumber

- A has two distinct eigenvalues \lambda_1,\lambda_2. In this case, one has algebraic multiplicity one and the other has algebraic multiplicity two; after reordering, we can assume \lambda_1 has multiplicity 1 and \lambda_2 has multiplicity 2. This implies that \lambda_1 has geometric multiplicity 1\text{,} so A is diagonalizable if and only if the \lambda_2-eigenspace is a plane. For example: A=\left(\begin{array}{ccc}1&7&4\\0&2&0\\0&0&2\end{array}\right).\nonumber On the other hand, if the geometric multiplicity of \lambda_2 is 1\text{,} then A is not diagonalizable. For example: A=\left(\begin{array}{ccc}1&7&4\\0&2&1\\0&0&2\end{array}\right).\nonumber

- A has only one eigenvalue \lambda. If the algebraic multiplicity of \lambda is 1\text{,} then A is not diagonalizable. This happens when the characteristic polynomial has two complex (non-real) roots. For example: A=\left(\begin{array}{ccc}1&-1&0\\1&1&0\\0&0&2\end{array}\right).\nonumber Otherwise, the algebraic multiplicity of \lambda is equal to 3. In this case, if the geometric multiplicity is 1\text{:} A=\left(\begin{array}{ccc}1&1&1\\0&1&1\\0&0&1\end{array}\right)\nonumber or 2\text{:} A=\left(\begin{array}{ccc}1&0&1\\0&1&1\\0&0&1\end{array}\right)\nonumber then A is not diagonalizable. If the geometric multiplicity is 3\text{,} then \text{Nul}(A-\lambda I_3) = \mathbb{R}^3 \text{,} so that A-\lambda I_3 is the zero matrix, and hence A = \lambda I_3. Therefore, in this case A is necessarily diagonal, as in: A=\left(\begin{array}{ccc}1&0&0\\0&1&0\\0&0&1\end{array}\right).\nonumber

Similarity and Multiplicity

Recall from Fact 5.3.2 in Section 5.3 that similar matrices have the same eigenvalues. It turns out that both notions of multiplicity of an eigenvalue are preserved under similarity.

Let A and B be similar n\times n matrices, and let \lambda be an eigenvalue of A and B. Then:

- The algebraic multiplicity of \lambda is the same for A and B.

- The geometric multiplicity of \lambda is the same for A and B.

- Proof

-

Since A and B have the same characteristic polynomial, the multiplicity of \lambda as a root of the characteristic polynomial is the same for both matrices, which proves the first statement. For the second, suppose that A = CBC^{-1} for an invertible matrix C. By Fact 5.3.3 in Section 5.3, the matrix C takes eigenvectors of B to eigenvectors of A\text{,} both with eigenvalue \lambda.

Let \{v_1,v_2,\ldots,v_k\} be a basis of the \lambda-eigenspace of B. We claim that \{Cv_1,Cv_2,\ldots,Cv_k\} is linearly independent. Suppose that

c_1Cv_1 + c_2Cv_2 + \cdots + c_kCv_k = 0. \nonumber

Regrouping, this means

C\bigl(c_1v_1 + c_2v_2 + \cdots + c_kv_k\bigr) = 0. \nonumber

By Theorem 5.1.1 in Section 5.1, the null space of C is trivial, so this implies

c_1v_1 + c_2v_2 + \cdots + c_kv_k = 0. \nonumber

Since v_1,v_2,\ldots,v_k are linearly independent, we get c_1=c_2=\cdots=c_k=0\text{,} as desired.

By the previous paragraph, the dimension of the \lambda-eigenspace of A is greater than or equal to the dimension of the \lambda-eigenspace of B. By symmetry (B is similar to A as well), the dimensions are equal, so the geometric multiplicities coincide.

For instance, the four matrices in Example \PageIndex{20} are not similar to each other, because the algebraic and/or geometric multiplicities of the eigenvalues do not match up. Or, combined with the above Theorem \PageIndex{3}, we see that a diagonalizable matrix cannot be similar to a non-diagonalizable one, because the algebraic and geometric multiplicities of such matrices cannot both coincide.

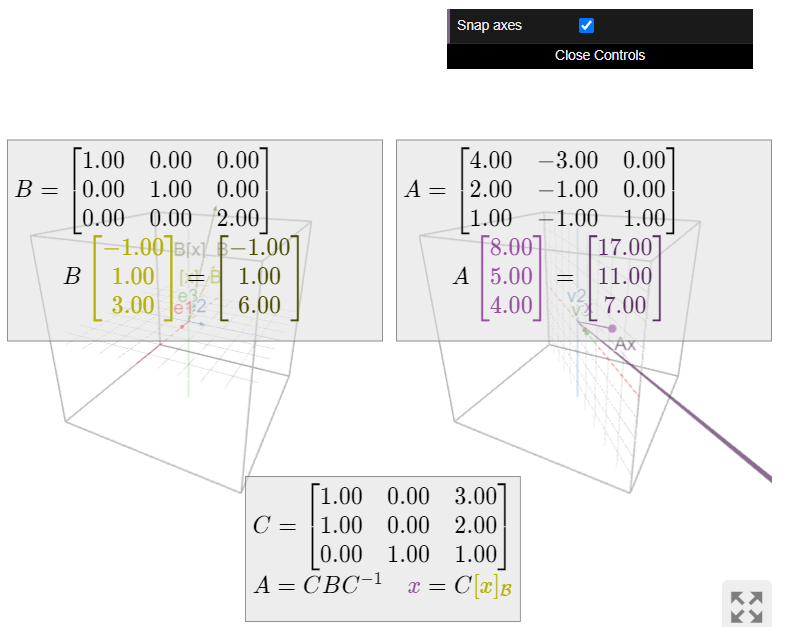

Continuing with this Example \PageIndex{8}, let

A = \left(\begin{array}{ccc}4&-3&0\\2&-1&0\\1&-1&1\end{array}\right). \nonumber

This is a diagonalizable matrix that is similar to

D = \left(\begin{array}{ccc}1&0&0\\0&1&0\\0&0&2\end{array}\right)\quad\text{using the matrix}\quad C = \left(\begin{array}{ccc}1&0&3\\1&0&2\\0&1&1\end{array}\right). \nonumber

The 1-eigenspace of D is the xy-plane, and the 2-eigenspace is the z-axis. The matrix C takes the xy-plane to the 1-eigenspace of A\text{,} which is again a plane, and the z-axis to the 2-eigenspace of A\text{,} which is again a line. This shows that the geometric multiplicities of A and D coincide.

The converse of Theorem \PageIndex{4} is false: there exist matrices whose eigenvectors have the same algebraic and geometric multiplicities, but which are not similar. See Example \PageIndex{23} below. However, for 2\times 2 and 3\times 3 matrices whose characteristic polynomial has no complex (non-real) roots, the converse of Theorem \PageIndex{4} is true. (We will handle the case of complex roots in Section 5.5.)

Show that the matrices

A=\left(\begin{array}{cccc}0&0&0&0\\0&0&1&0\\0&0&0&1\\0&0&0&0\end{array}\right)\quad\text{and}\quad B=\left(\begin{array}{cccc}0&1&0&0\\0&0&0&0\\0&0&0&1\\0&0&0&0\end{array}\right)\nonumber

have the same eigenvalues with the same algebraic and geometric multiplicities, but are not similar.

Solution

These matrices are upper-triangular. They both have characteristic polynomial f(\lambda) = \lambda^4\text{,} so they both have one eigenvalue 0 with algebraic multiplicity 4. The 0-eigenspace is the null space, Fact 5.1.2 in Section 5.1, which has dimension 2 in each case because A and B have two columns without pivots. Hence 0 has geometric multiplicity 2 in each case.

To show that A and B are not similar, we note that

A^2=\left(\begin{array}{cccc}0&0&0&0\\0&0&0&1\\0&0&0&0\\0&0&0&0\end{array}\right)\quad\text{and}\quad B^2=\left(\begin{array}{cccc}0&0&0&0\\0&0&0&0\\0&0&0&0\\0&0&0&0\end{array}\right),\nonumber

as the reader can verify. If A = CBC^{-1} then by Recipe: Compute Powers of a Diagonalizable Matrix, we have

A^2 = CB^2C^{-1} = C0C^{-1} = 0, \nonumber

which is not the case.

On the other hand, suppose that A and B are diagonalizable matrices with the same characteristic polynomial. Since the geometric multiplicities of the eigenvalues coincide with the algebraic multiplicities, which are the same for A and B\text{,} we conclude that there exist n linearly independent eigenvectors of each matrix, all of which have the same eigenvalues. This shows that A and B are both similar to the same diagonal matrix. Using the transitivity property, Proposition 5.3.1 in Section 5.3, of similar matrices, this shows:

Diagonalizable matrices are similar if and only if they have the same characteristic polynomial, or equivalently, the same eigenvalues with the same algebraic multiplicities.

Show that the matrices

A=\left(\begin{array}{ccc}1&7&2\\0&-1&3\\0&0&4\end{array}\right)\quad\text{and}\quad B=\left(\begin{array}{ccc}1&0&0\\-2&4&0\\-5&-4&-1\end{array}\right)\nonumber

are similar.

Solution

Both matrices have the three distinct eigenvalues 1,-1,4. Hence they are both diagonalizable, and are similar to the diagonal matrix

\left(\begin{array}{ccc}1&0&0\\0&-1&0\\0&0&4\end{array}\right). \nonumber

By the transitivity property, Proposition 5.3.1 in Section 5.3, of similar matrices, this implies that A and B are similar to each other.

Any two diagonal matrices with the same diagonal entries (possibly in a different order) are similar to each other. Indeed, such matrices have the same characteristic polynomial. We saw this phenomenon in Example \PageIndex{5}, where we noted that

\left(\begin{array}{ccc}1&0&0\\0&2&0\\0&0&3\end{array}\right)=\left(\begin{array}{ccc}0&0&1\\0&1&0\\1&0&0\end{array}\right)\left(\begin{array}{ccc}3&0&0\\0&2&0\\0&0&1\end{array}\right)\left(\begin{array}{ccc}0&0&1\\0&1&0\\1&0&0\end{array}\right)^{-1}.\nonumber